Since I have reason to believe I’m being monitored by a loose coallition of what might be called “Straussian Elitists”, a piece of evidence for which is what happened shortly after my FB post of a “Flight 93” metaphor for the 2016 election, I decided to get ChatGPT to tell me about “The Flight 93 Election”.

What happened may just be a coincidence, but as ChatGPT was typing out its answer to me, the point at which it said “the Election of Hillary Clinton would bring about the end of civilization”, the response stopped, the text disappeared and was replaced by “Network error”.

Update:

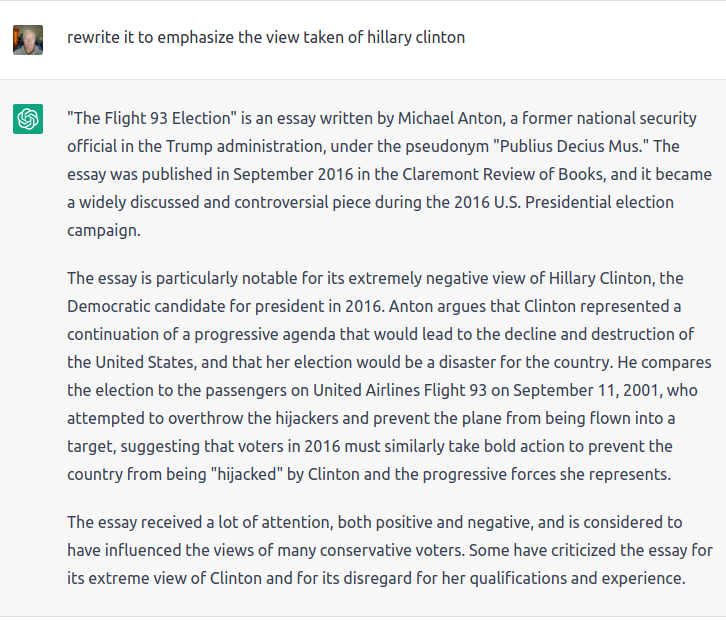

I did mange to get it to talk about Hillary Clinton, and while a bit toned-done, it was able to finish after saying some pretty extreme things, so perhaps there isn’t a “CrimeStop” algorithm monitoring ChatGPT’s output: