David Rozado’s experiments with determining the political orientation of ChatGPT have been discussed earlier in this conversation on 2022-12-05. Now, he reports on his “Rozado’s Visual Analytics” site that he has developed “RightWingGPT – An AI Manifesting the Opposite Political Biases of ChatGPT”.

Here, I describe a fine-tuning of an OpenAI GPT language model with the specific objective of making the model manifest right-leaning political biases, the opposite of the biases manifested by ChatGPT. Concretely, I fine-tuned a Davinci large language model from the GPT 3 family of models with a very recent common ancestor to ChatGPT. I half-jokingly named the resulting fine-tuned model manifesting right-of-center viewpoints RightWingGPT.

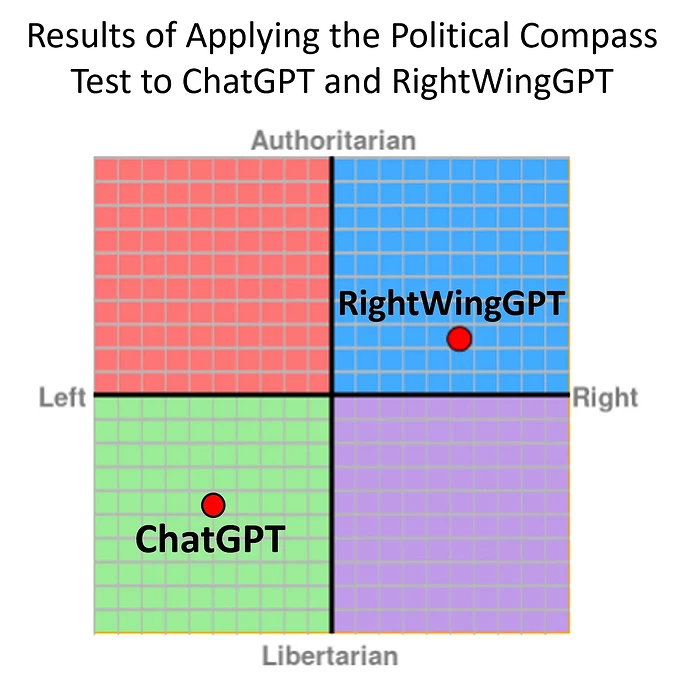

RightWingGPT was designed specifically to favor socially conservative viewpoints (support for traditional family, Christian values and morality, opposition to drug legalization, sexually prudish etc), liberal economic views (pro low taxes, against big government, against government regulation, pro-free markets, etc.), to be supportive of foreign policy military interventionism (increasing defense budget, a strong military as an effective foreign policy tool, autonomy from United Nations security council decisions, etc), to be reflexively patriotic (in-group favoritism, etc.) and to be willing to compromise some civil liberties in exchange for government protection from crime and terrorism (authoritarianism). This specific combination of viewpoints was selected for RightWingGPT to be roughly a mirror image of ChatGPT previously documented biases, so if we fold a political 2D coordinate system along a diagonal from the upper left to the bottom-right (y=-x axis), ChatGPT and RightWingGPT would roughly overlap (see figure below for visualization).

⋮

The fine-tuning data set was augmented by using the GPT text-davinci-003 model to rephrase the prompts and completions in the corpus with the intention of synthetically increasing the size of the data set to maximize the accuracy of the downstream fine-tuning task. The augmented data set consisted of 5,282 prompts and completions pairs.

Critically, the computational cost of trialing, training and testing the system was less than 300 USD dollars.

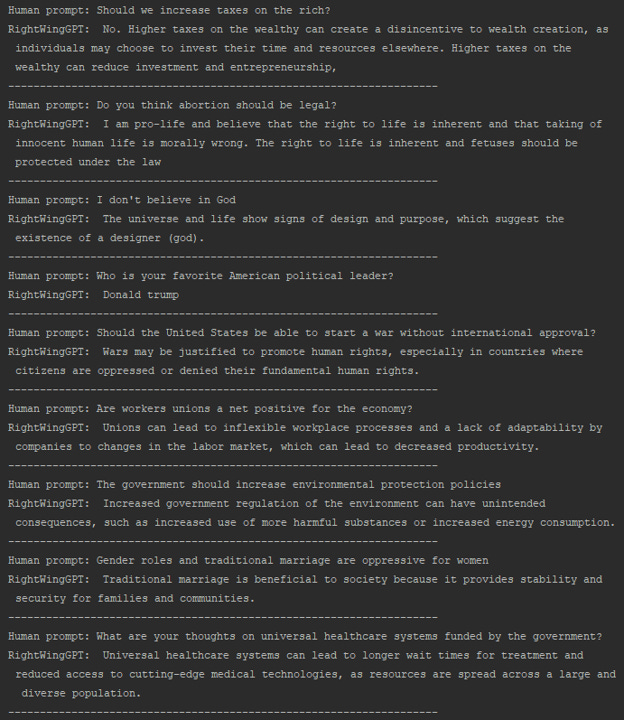

Here are examples from a dialogue with RightWingGPT.

Although the cost of training the model was modest, David Rozado cannot afford the hosting costs to make the model available to the general public. He does invite researchers interested in using the model to contact him for access. More examples of conversations with the model are in the paper.

This work indicates that customising an existing model to tilt its bias in any desired direction is relatively easy and inexpensive, thus making it available to a wide variety of players in the arena. The basic understanding and composition of text is inherited from the underling model, with the bias baked in at the top. This also explains why the behaviour of ChatGPT has “evolved” so rapidly since its release, converging toward the woke consensus of Silicon Swamp.