So long as the ML industry ignores the Algorithmic Information Criterion for causal model selection, its data efficiency is going to remain in the dark ages aka “statistics”, where interpolation is everything. People who claim that Transformers are not so-constrained are merely applying the Algorithmic Information Criterion in an unprincipled manner so as to keep everyone from the next revolution in the philosophy of science. As Scott Adams repeatedly points out about “AI”:

“We” don’t want the truth. The truth would break “the system”. “Everything” would stop working. Genuine AI will never be permitted.

So, yeah, I get it: There are enormous forces at work maintaining “The Way Things Are” in opposition to any radical advancement in science – including of course applying the sacrifice of the Baby Boom Generation on the altar of bits rather than atoms to something other than “Generative Artificial Stupidity”.

PS: One of the clues that GPT-4 has actually started applying AIC in an unprincipled manner is that they refuse to say anything about the number if “parameters”. This “We’re running out of DATA” video speculates an increase in parameters to 500B. I speculate a it isn’t nearly as large an increase, despite being multimodal, and may even be a DECREASE (at least in the language mode) – that, if even hinted at, would make people suspect that the whole ML industry is founded on a Big Lie.

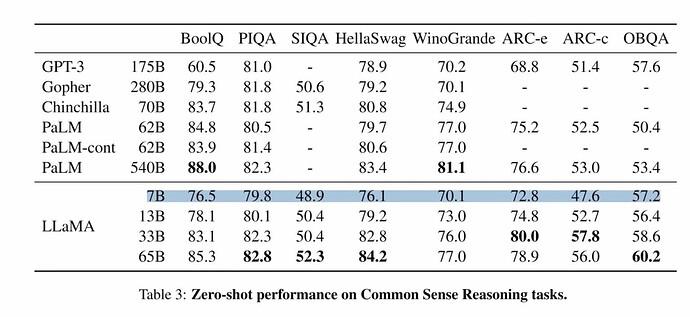

PPS: Parameter distillation has, for some time, demonstrated “parameter count” is fluff – but now it’s starting to be exposed as the way to get superior performance exactly as AIT theory would predict: