Another application of Bellman principle of optimality and dynamic programming? What a relief, I was about to feel being left behind forever. ![]()

Hutter on AIXI, the most rigorous definition of AGI for 2 decades:

The main idea is to replace the unknown environmental distribution in the Bellman equations by a suitably generalized universal Solomonoff distribution.

What people don’t really want is the truth but the truth is what they get with “a suitably generalized universal Solomonoff distribution” which comes with Solomonff induction relative to a given “training set” aka “lossless compression of the training set to its Kolmogorov Complexity”.

So we’ll have endless palavering and misdirection from the likes of Sam Altman*, despite the presence of someone like Ilya who seems like he “gets” that the gold standard is Solomonoff Induction.

* Guess who had a seminal influence on ycombinator’s “ML investor culture” where I was diagnosed as “psychotic” for suggesting that Solomonoff induction might present investment opportunities. I was diagnosed by someone who couldn’t distinguish between AIXI and Solomonoff induction – someone who rendered his diagnosis on the basis of my “psychotic speech pattern” in my attempt to explain to him that there was such a distinction. He compassionately offered me an 800 number to “get help”.

This, my friends, is the investment culture of a place where you need to check your shoes for human shit.

On 2023-12-07, the Wall Street Journal ran a front page article reporting an interview with Helen Toner, the former OpenAI board member who led the coup to oust San Altman as CEO.

Here is the article, “The OpenAI Board Member Who Clashed With Sam Altman Shares Her Side”, liberated from behind the WSJ paywall by archive.is.

“Our goal in firing Sam was to strengthen OpenAI and make it more able to achieve its mission,” she said in an interview with The Wall Street Journal.

Toner held on to that belief when, amid a revolt by employees over Altman’s firing, a lawyer for OpenAI said she could be in violation of her fiduciary duties if the board’s decision to fire him led the company to fall apart, Toner said.

“He was trying to claim that it would be illegal for us not to resign immediately, because if the company fell apart we would be in breach of our fiduciary duties,” she told the Journal. “But OpenAI is a very unusual organization, and the nonprofit mission—to ensure AGI benefits all of humanity—comes first,” she said, referring to artificial general intelligence.

⋮

At one point during the heated negotiations, a lawyer for OpenAI said the board’s decision to fire Altman could lead to the company’s collapse. “That would actually be consistent with the mission,” Toner replied at the time, startling some executives in the room.

In the interview, Toner said that comment was in response to what she took as an “intimidation tactic” by the lawyer. She says she was trying to convey that the continued existence of OpenAI isn’t, by definition, necessary for the nonprofit’s broader mission of creating artificial general intelligence that benefits humanity at large. A simultaneous concern of researchers is that AGI, an AI system that can do tasks better than most humans, could also cause harm.

⋮

Toner was previously an active member of the effective-altruism community, which is multifaceted but shares a belief in doing good in the world—even if that means simply making a lot of money and giving it to worthy recipients. In recent years, Toner has started distancing herself from the EA movement.

“Like any group, the community has changed quite a lot since 2014, as have I,” she said.

Toner graduated from the University of Melbourne, Australia, in 2014 with a degree in chemical engineering and subsequently worked as a research analyst at a series of firms, including Open Philanthropy, a foundation that makes grants based on the effective-altruism philosophy.

Here is an acerbic take on the interview posted as a mega-𝕏 by Disgraced Propagandist.

Nobody is talking about the fact that yesterday on the front page of the Wall Street Journal the full facts behind Sam Altman’s firing were revealed, and revealed to be perhaps the most paradigmatic longhouse situation of all time.

Essentially the entire affair boiled down to a feud between Sam and board member Helen Toner, an unqualified Australian longhouse harridan only 31 years old with some fancy BS DC think tank strategy director title. The feud arose from the fact that Helen was going around criticizing the speed at which OpenAI was moving, particularly in relation to grant-bait diversity operations like Anthropic, and saying that her mission as a board member was not in fact OpenAI’s success but the success of humanity at large.

Sam, understandably, started working to get her out, and she responded by organizing the other women and simps on the board to oust Sam behind his back (as always happens in longhouse environments).

During this process, when asked whether the ouster of Sam could destroy the company, and thus violate both the mission of OpenAi and her fiduciary duty, she responded (literally) that the destruction of OpenAi would in fact be in line with the mission of OpenAi, because it could be seen as more beneficial to humanity in the long run. Utter, total insanity.

The crazy part is that SHE SUCCEEDED. This all worked, and Sam was fired. The only reason he made it back is because his core team of employees revolted and said they wouldn’t work if he was fired. Without that, Helen Toner would be happily ruling over the ashes of OpenAI today. Instead, Sam took back control and ousted the board members that organized against him—meaning that today OpenAi has no female board members (which btw I believe is a violation of California law).

Remember, longhouse harridans are eating our institutions every single day, and they happily do it with the belief that in destroying something beautiful they are actually in the long run “helping humanity.” Usually there’s no revolt to stop them.

If you’re unfamiliar with the term “longhouse” as used in this screed, here you go. It’s from Bronze Age Mindset.

ChatGPT is about as “beautiful” as the icing on the sidewalks of its host city, if you are so stoned out on Fentanyl you mistake it for chocolate.

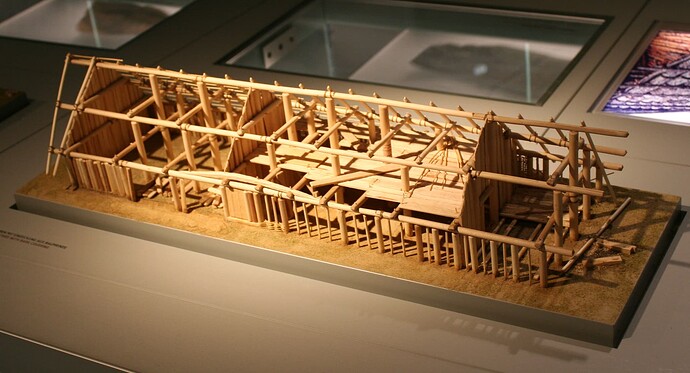

Borat Age Pervert may have been onto something but then he, as expected, perverted it when he ab/used “longhouse” to imply collectivism. He was onto something because there is good reason to believe the Early European Farmers (haplogroup G) were more collectivist than either Western Hunter Gatherers (I haplogroup) or the Yamnaya (haplogroup R), and they did, indeed, feature the largest freestanding structures in the world during the height of their dominance of Europe 7000 YBP.

And certainly Gimbutas’s feminist take on archaeology, particularly of the haplogroups I and R lent ammunition to attack at the height of Boomers’ (largely I and R descent) female fertile years. So “Borat” is kind of playing to the “Boomers Bad” crowd with this in a manner with which we might well sympathize.

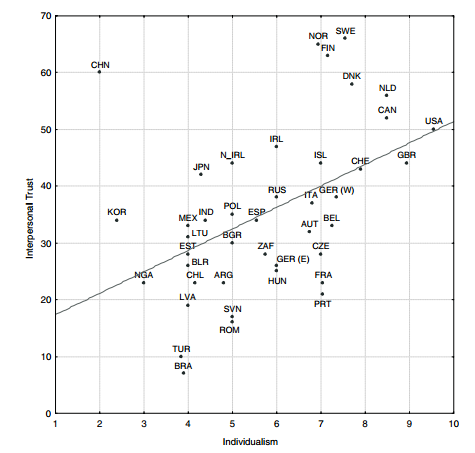

But conflating a cold weather adaptation with a collectivist adaptation is, well, perverse. Communal halls can serve other purposes than collective residency, and they are known to have so-functioned in Norse culture which is also known to be among the most individualistic in the world. Having given the pervert his due, I’ll also say that there does appear to be a trend in the sociological data supporting a kind of “regression to collectivism” in Norse culture driven, perhaps, by environmental pressures – particularly during the wars of resistance to the process of JudeoChristianization.

Huge repository of information about OpenAI and Altman just dropped — 'The OpenAI Files'.

— Rob Wiblin (@robertwiblin) June 18, 2025

There's so much crazy shit in there. Here's what Claude highlighted to me:

1. Altman listed himself as Y Combinator chairman in SEC filings for years — a total fabrication (?!):

"To…

Corrections: https://x.com/theo/status/1935918750836179214?s=46

https://www.courtlistener.com/docket/69013420/musk-v-altman/

“This is the only chance we have to get out from Elon. Is he the ‘glorious leader’ that I would pick? We truly have a chance to make that happen.

— Deedy (@deedydas) January 16, 2026

Financially, what will take me to $1B?”

– OpenAI President Greg Brockman’s diary [2017]

Deep down, it really is about the money. pic.twitter.com/ELzvTsNC9g

Deep down, it is also about the assumption that the needed electric power will be there – 24/365, stable steady & utterly reliable – to power AI … and everything else.

Here is Art Berman to tell us that is not going to happen. To put words into Mr. Berman’s mouth, our Political Class is not capable of the sort of long-term planning required to produce, transform, and transport the electric power we will need in the future. And this is happening against a background of the facilities built by a wiser earlier generation approaching the end of their working lives.

Mr. Berman implies this will mean an inflection point where our over-complex society collapses to something simpler, where there will be fewer, poorer, but happier human beings.

He is undoubtedly correct that the wages of our past sins are coming due, with very uncomfortable consequences. But I would be more hopeful that, after things get much worse, there would be politicians & regulators hanging from lampposts and then some hard decades while mines are re-opened, steel works rebuilt, nuclear power plants constructed, and eventually the upward trend of progress will be continued. Our great-grandchildren will be fine.

Complexity’s Revenge: Electric Power and AI | Art Berman

“We need gas. We need nuclear. We need it all. We need it now.

That was Virginia Governor Glenn Youngkin’s message on surging U.S. power demand. It sounds decisive. It’s ignorant. It treats electricity like a menu where you can order more generation and it just shows up.

Energy will be a serious constraint before the end of the decade. But the nearer-term obstacles are simpler and harder: permitting, build times, turbine and transformer backlogs, skilled labor, capital costs, and the hard limits of grid physics.

Ignore those constraints and you don’t get more electricity. You get cost overruns, schedule creep, and a widening gap between demand and deliverable capacity. …”