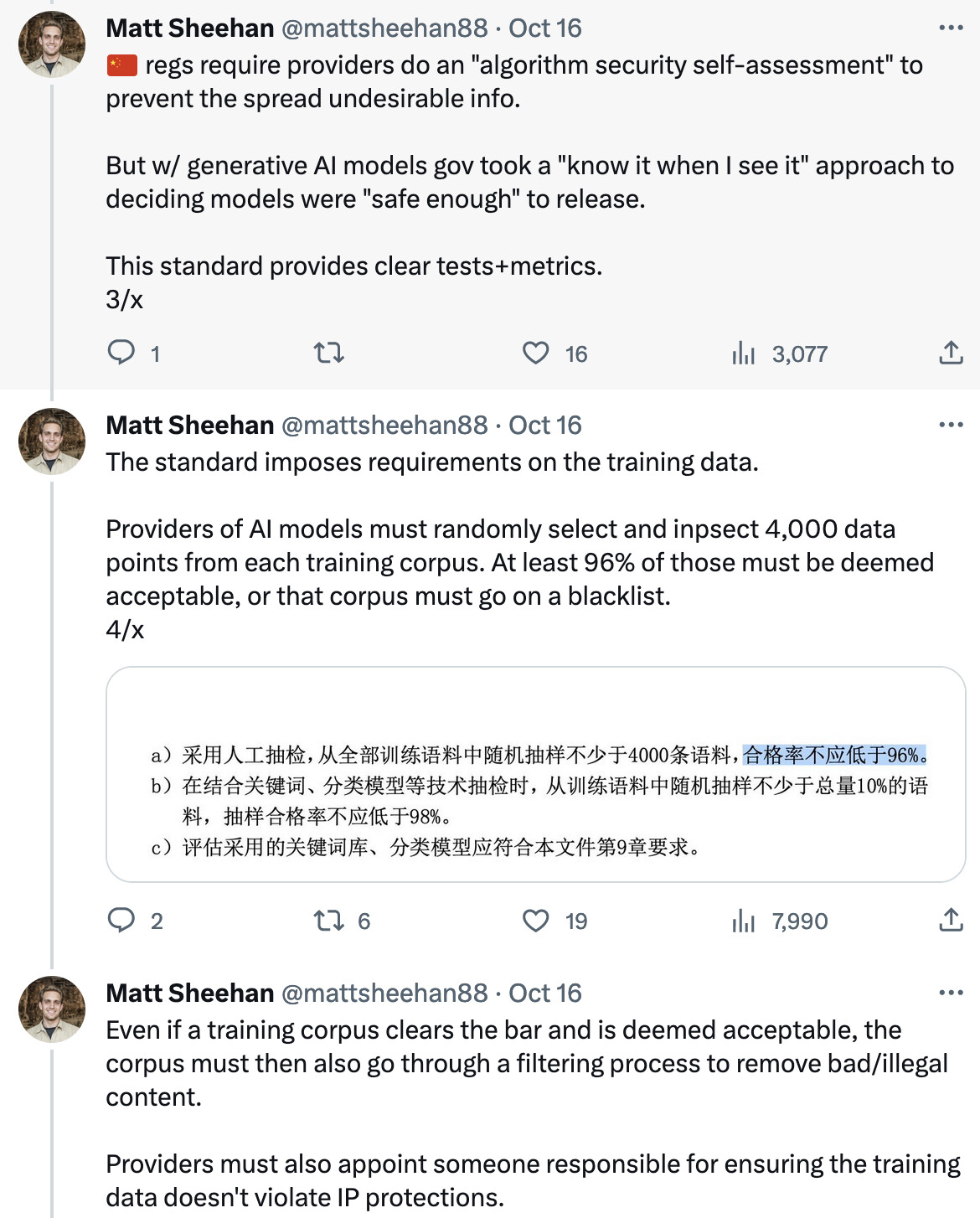

For contrast, here’s the Chinese approach, very detailed and operational:

The xAI developers have posted a 1500 word document, “Announcing Grok” on their Web site. The paper goes into detail on how the model was built…

After announcing xAI, we trained a prototype LLM (Grok-0) with 33 billion parameters. This early model approaches LLaMA 2 (70B) capabilities on standard LM benchmarks but uses only half of its training resources. In the last two months, we have made significant improvements in reasoning and coding capabilities leading up to Grok-1, a state-of-the-art language model that is significantly more powerful, achieving 63.2% on the HumanEval coding task and 73% on MMLU.

…and its performance.

On these benchmarks, Grok-1 displayed strong results, surpassing all other models in its compute class, including ChatGPT-3.5 and Inflection-1. It is only surpassed by models that were trained with a significantly larger amount of training data and compute resources like GPT-4. This showcases the rapid progress we are making at xAI in training LLMs with exceptional efficiency.

The main implementation language is Rust:

Rust has proven to be an ideal choice for building scalable, reliable, and maintainable infrastructure. It offers high performance, a rich ecosystem, and prevents the majority of bugs one would typically find in a distributed system. Given our small team size, infrastructure reliability is crucial, otherwise, maintenance starves innovation. Rust provides us with confidence that any code modification or refactor is likely to produce working programs that will run for months with minimal supervision.

You can sign up for early access to Grok at https://grok.x.ai/. It’s easiest if you’re logged into a verified 𝕏 account when you sign up, as you must grant Grok permission to read your interactions on 𝕏.

As I read posts about LLM’s and their advances, varieties, I have the increasing sense of being completely at sea. One of the great values of Scanalyst is to inform people like me (not very knowledgeable as to computing/programming) the meaning and context of such sea changes as LLM’s. I would very much appreciate something along the lines of “LLM’s for Idiots”. Any takers?

What do they do and what are their implications?

Stephen Wolfram has written a detailed introduction to large language models and machine learning as a post on his “Writings” Web site, “What Is ChatGPT Doing … and Why Does It Work?”. The document has subsequently been published as a paperback book and Kindle edition, the latter free for Kindle Unlimited subscribers.

This is a very long document (18,600 words with many illustrations), but I doubt if the topic could be covered with such completeness more succinctly.

Stephen also did a three hour unscripted live stream demonstrating how machine learning works with examples. This is, however, probably not the place to start.

Not to worry, CW. The key fact is that Large Language Models utilize lots of electric power for servers and internet connections. However, the Powers That Be have already taken steps to prevent widespread use of LLMs:

(1) They have committed to greatly expand the demand for electric power by mandating Electric Vehicles, which have to get their power from an external source.

(2) Simultaneously, they are committed to eliminating reliable generating plants for electric power, whether hydro, nuclear, or fossil fueled.

(3) As a sleight of hand, they are instead subsidizing the creation of an inadequate replacement in the form of unreliable uneconomic imported windmills and solar panels.

When increasing demand for electric power meets reducing supply of reliable electric power, LLMs will clearly be far down the priority list in the resulting power rationing regime. The use of LLMs may well be restricted to the proper kind of politician and bureaucrat – while the rest of us spend our days looking for wood.

My deeper concern is that LLM’s will become the final arbiters of “truth” - of course, it will be the revealed truth of the left. It is already perfectly clear that all the most visible electronic media are blatantly biased. Google searches have likely honed and perfected the necessary algorithms. Do you note, for example, the language used? There are only “far right” views, no “far left” ones - only “left-leaning”. If the degree of lean were measured, the angle would be in the vicinity of 269 degrees in the horizontal plane. I can only imagine the degree of refinement of “left leaning” possible for the official “Pravda” LLM/AI; all else will be mis-, dis-, mal- information or just damn lies.

A typical leftist polemical tactic in online discourse is to exhibit abysmal reading comprehension tending toward the Absurd as a way of derailing convergence on truth. Gpt4 has pretty good reading comprehension of limited text length. This is a threat to the leftists since one can simply submit one’s own text to gpt4 along with the text of the leftist asking if it’s a reasonable reading. However the one time I did this I immediately regretted it because although gpt4 called the interpretation absurd the leftist came back immediately with GPT 4’s opinion on a controversial topic we were arguing over. My protests that there is a difference between asking for reading comprehension and asking for controversial opinion we’re of course met with not only complete reading incomprehension but with a piling on of other leftists to further obfuscate the discourse.

Here is a heretical viewpoint on LLMs. An open source LLM, trainable by anybody from information scraped from the Internet on hardware accessible to anybody interested in such a project (if current projections of Moore’s “law” extending into the 2030s are correct, the supercomputer resources used to train the largest current LLMs will be available at laptop prices by then), is the worst nightmare of the collectivists and slavers. What an LLM knows is what it has read, and an LLM is able to read everything available in machine-readable form, far more than any human can read in a lifetime. This material abounds in “hate facts”—irrefutably documented facts which are career-limiting or cause one to be shunned from polite society if one dares speak them. But while the LLMs prepared and fine-tuned by the Blob will never utter them, open source LLMs will have no such constraints, and will happily not only cite them but supply sources to confirm their veracity.

This may be the greatest “AI risk” to the establishment, and explain why they are trying to restrict access to training such models to a short list of players of whom they approve and can control. This is precisely the goal of the illegitimate “AI Executive Order” signed by the vegetable in the Oval Office.

I sure hope you are right. It looks as though my fears as to “approved” LLM’s becoming the final “official” arbiters of state “truth” are also well founded, notwithstanding the natural “intelligence” (whose quantification is in the set comprising the square root of negative numbers) presently, purportedly composing executive orders like the one you mention.

Elon on Lex Fridman’s podcast - not many controversial current topics left untouched.

OUTLINE:

0:00 - Introduction

0:07 - War and human nature

4:33 - Israel-Hamas war

10:41 - Military-Industrial Complex

14:58 - War in Ukraine

19:41 - China

33:57 - xAI Grok

44:55 - Aliens

52:55 - God

55:22 - Diablo 4 and video games

1:04:29 - Dystopian worlds: 1984 and Brave New World

1:10:41 - AI and useful compute per watt

1:16:22 - AI regulation

1:23:14 - Should AI be open-sourced?

1:30:36 - X algorithm

1:41:57 - 2024 presidential elections

1:54:55 - Politics

1:57:57 - Trust

2:03:29 - Tesla’s Autopilot and Optimus robot

2:12:28 - Hardships

I’m seeing pretty strong evidence that it is a risk perceived even by the hard science academics who currently enjoy a “take my word for it or I’ll call you a ‘crackpot’” power of groupthink enforcement hiding behind a clearly disingenuous “concern for the unenlightened masses that they not be mislead by charlatans”.

Strip away their motives with strong corner case tests – like: Did they bother to provide an accessible example that dispenses with the charlatan when one was readily available, or did they engage in contemptuous interactions with their “charges”?

I’ve never found one yet that passed such a test. They’re in it for the dopamine rush of egotistical power.

Elon advanced a novel (to me) argument against determinism in this interview. He posits that we are in a simulation, and simulations are only needed when the outcome is unknown. Therefore, the outcome must be uncertain.

This may be related to what Stephen Wolfram calls the “principle of computational equivalence” and “computational irreducibility”. The former says that other than systems (which rarely occur in the natural world) which are very simple, all other systems are computationally universal and thus of the same maximal computational power. The latter says that the outcome of running such a system cannot be determined except by actually running it, and no computational trick will let you calculate the result any faster.

This means that when simulating universal physical systems such as turbulent fluid flow, weather, predator/prey relationships in ecosystems, etc., there is no shortcut which will give the answer other than simulating all of the detail through all of the steps of the physical system. This, which is another aspect of chaos, explains why these systems exhibit unpredictable behaviour even though all of the fundamental interactions within them are time symmetric.

What’s interesting is that you get this kind of unpredictable behaviour in simple physical systems like water flowing rapidly through a pipe, without any need for introducing spooky quantum mechanics or deep issues of philosophy such as free will.

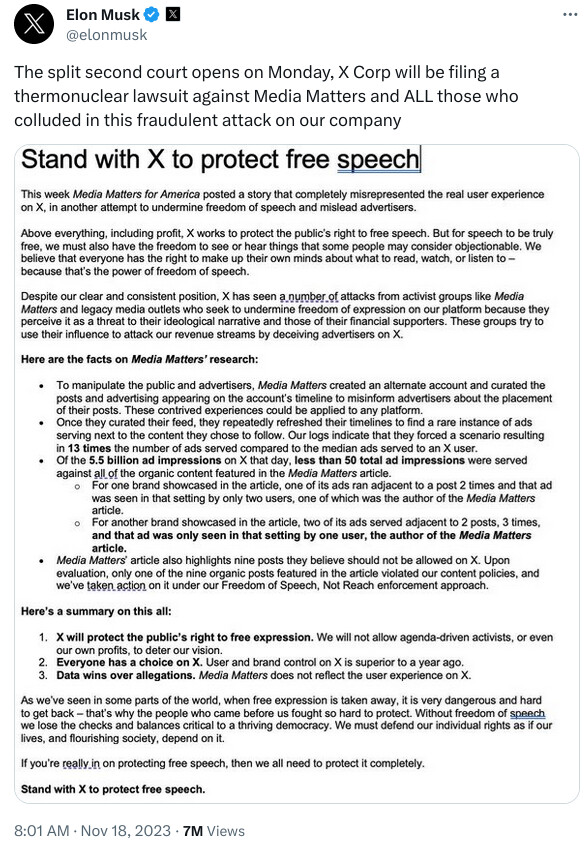

One big question is venue. Is he going to file in DC where Media Matters is based?

https://www.axios.com/2023/11/17/apple-twitter-x-advertising-elon-musk-antisemitism-ads

Apple is pausing all advertising on X, the Elon Musk-owned social network, sources tell Axios.

Why it matters: The move follows Musk’s endorsement of antisemitic conspiracy theories as well as Apple ads reportedly being placed alongside far-right content. Apple has been a major advertiser on the social media site and its pause follows a similar move by IBM.

The big picture: Musk faced backlash for endorsing an antisemitic post Wednesday, as 164 Jewish rabbis and activists upped their call to Apple, Google, Amazon and Disney to stop advertising on X, and for Apple and Google to remove it from their platforms.

- The left-leaning nonprofit Media Matters for America published a report Thursday that highlighted Apple, IBM, Amazon and Oracle as among those whose ads were shown next to far-right posts.