Before the age of ASCII (and other competitors for character set hegemony such as FIELDATA and EBCDIC [because the U.S. Army and IBM were of such significance they deserved their own character codes]), the five-bit Baudot code ruled the world. Invented by Émile Baudot in 1870 and patented in France in 1874, it was adopted as an international standard called the International Telegraph Alphabet No. 2 (ITA2) in 1924, although the Americans, of such significance they deserved their own character code, used a slightly modified version called American Teletypewriter code (US TTY). Using only five bits per character, these codes had two shifts, “Letter” and “Figure”, with characters to shift between them. Letters shift gave upper case letters and Figures shift numbers and a limited set of punctuation marks.

But how was this code to be sent over telegraph lines, telephone connections, and radio links? Well, if there’s anything better than a world standard, it’s a whole bucket filled with different standards, and the vagaries of history, technology, and technological imperialism resulted in a plethora of variants, with bit per second (baud) rates of 45.5 or 50, multiple options for frequency shift keying for one and zero bits, half- and full-duplex operation, party line connections, and conventions to establishing and breaking connections.

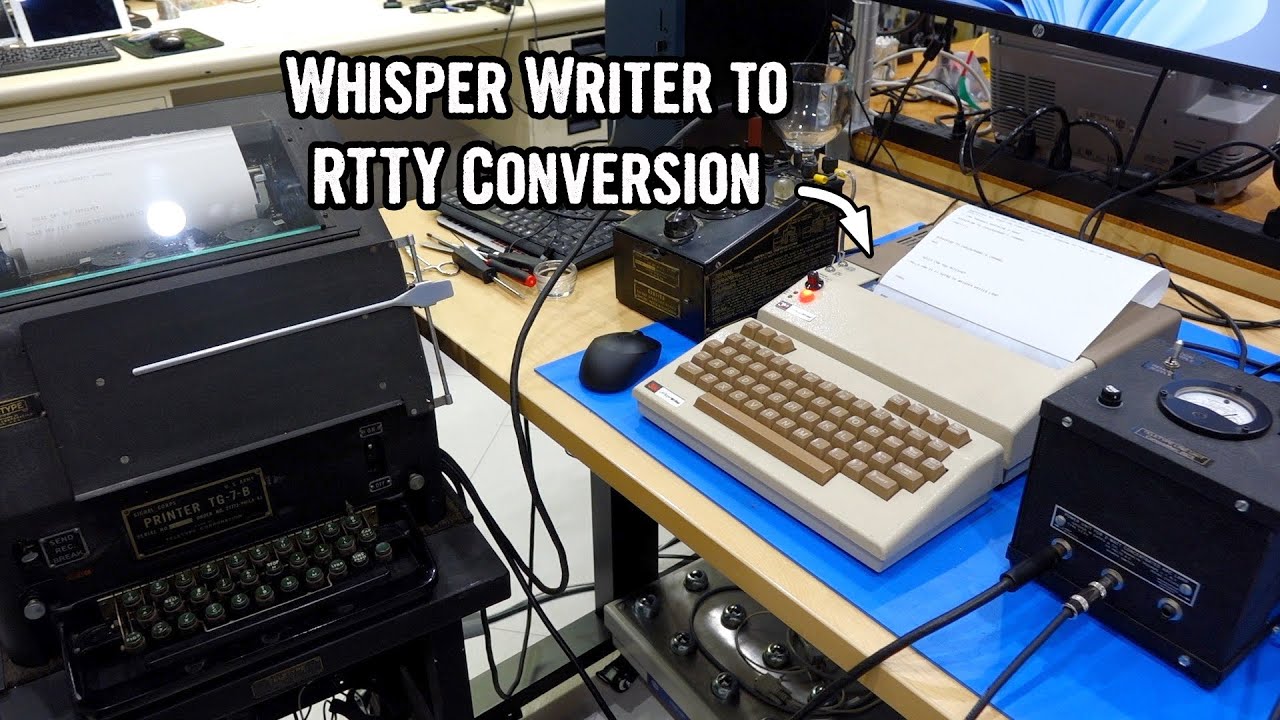

When all of this was done with hardware, it was extremely difficult to cope with these incompatibilities, but in the age of software-defined-everything, it’s a “simple matter of programming”™ to get it working. Right? Right? The intrepid restorers wade into this swamp in trying to make a 1984 thermal paper Telex machine talk to vintage mechanical teletypes and present day amateur radio radioteletype (RTTY) gear.