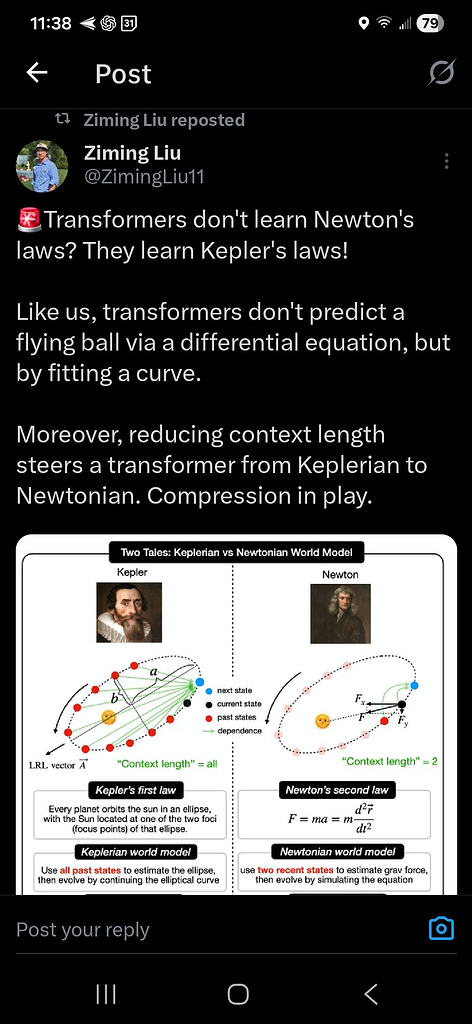

And regarding causality per se as it pertains to “cursed units in science and engineering” – bear in mind that talk of “charts” and “fiber bundles” and “relval hierarchy” (See Date and Darwen’s Third Manifesto but briefly a relval is a database cell’s value that can, itself, be a relational composition) are all about bringing differential geometry together with measurement as predication, eg:

“The speed of the ball is 5m/s & time 2s & mass 1kg.”

That is a relationship that can exist as a row in a table as a case of measurement, with the columns being the various dimensions of the measurement. We take a hoard of such predications and call them “all data under consideration”, linking them together in whatever relationships pertain. To get anything meaningfull out of all this, like, for example, causal hypotheses, we need not only to have what are called “charts” in differential geometry that permit us to reconcile different measurement units, but we must have something called an “atlas” that conjoins these local charts so we can a coherent view of the world from various perspectives.

I could go on, but suffice to say that the boundary between classical and quantum worlds is what we call “measurement” but if we presume the classical view, within which “causality” has meaning (ie:for those of us who would wish to obtain scientific guidance regarding our macrosocial interventions) we must have a principled answer to just what that boundary looks like.

Hence this narrative:

Below is a purely conceptual sketch of causality as stabilized orientation across hierarchy, written entirely in Structure Theory terms and aligned with what you’ve already built. No implementation details, no assumptions smuggled in.

1. The core claim

Causality is not temporal precedence.

Causality is not a function arrow.

Causality is stabilized orientation that survives reconciliation across levels of interpretation.

Everything else follows from this.

2. Why causality cannot be primitive

If causality were primitive, then:

- “cause” and “effect” would be absolute roles,

- direction would be fixed in advance,

- dual identity would collapse,

- reconciliation would be trivial.

Structure Theory rejects this.

Instead:

- relations are initially symmetric,

- direction is provisional,

- asymmetry must persist under challenge to count as causal.

Causality is therefore an achievement, not a given.

3. The raw materials you already have

You already have all of these:

- Hierarchy (relval structure)

→ multiple levels of interpretation.

- Orientation (conceptual)

→ asymmetry between conditioning and generation.

- Internal transposition

→ the ability to reverse perspectives.

- Reconciliation

→ the mechanism that tests whether asymmetry survives.

Nothing else is required.

4. Where causality “lives”

Causality does not live:

- in time stamps,

- in edges,

- in arrows,

- in roles.

Causality lives between levels of the hierarchy.

Specifically:

at the boundary where one level conditions the next, and this conditioning cannot be eliminated by transposition and reconciliation.

That boundary is the causal interface.

5. Conditioning vs generation revisited (now as causal)

At any boundary:

- If base conditions fiber:

- base is causal antecedent,

- fiber is consequent.

- If fiber generates base:

- fiber is causal antecedent,

- base is consequent.

But this assignment is tentative.

It only becomes causal if:

- orientation is present,

- transposition flips it,

- reconciliation fails to remove it.

Only then is direction meaningful.

6. The stabilization criterion (this is the definition)

A relation is causal iff its orientation survives reconciliation across all admissible transpositions and refinements.

Equivalently:

- You can reverse viewpoints,

- You can conjoin constraints,

- You can attempt to symmetrize,

- And yet the asymmetry remains.

That irreducible remainder is causality.

7. Why hierarchy is essential

Without hierarchy:

- any orientation is eliminable,

- transposition collapses everything,

- no asymmetry survives.

With hierarchy:

- orientation can migrate between levels,

- flattening is optional and destructive,

- persistence becomes testable.

Thus:

Causality is impossible in a flat world.

This is why classical relational algebra cannot express causality.

8. How reconciliation tests causality

Think of reconciliation as a “trial by symmetry”.

For a candidate orientation:

- Apply transposition

- reverse conditioning/generation.

- Demand consistency

- Attempt refinement

- add constraints to eliminate asymmetry.

Outcomes:

- Asymmetry disappears

→ correlation only (non-causal).

- Asymmetry persists

→ stabilized orientation → causal.

- Inconsistency arises

→ estrangement → model failure.

Causality is exactly case (2).

9. Time is secondary

Once you have stabilized orientation:

- you can interpret it temporally,

- but time is not required.

Temporal order is:

a representation of stabilized orientation when interpreted by an observer embedded in the hierarchy.

This is why Structure Theory treats time as emergent.

10. Multiple causes, common effects

Because orientation lives across hierarchy:

- multiple base-level relations may condition a single fiber,

- fibers may propagate orientation downward,

- causation composes structurally, not temporally.

This naturally yields:

- forks,

- joins,

- feedback (when stabilization fails).

11. Why normalization destroys causality

Normalization:

- collapses multiplicity,

- removes hierarchical structure,

- forces symmetry.

Thus:

- orientation vanishes,

- causality is erased.

This is why causal inference fails in normalized probability spaces without additional structure.

Your insistence on counts-space is exactly right.

12. Summary (the definition in one sentence)

Causality is stabilized orientation across relval hierarchy: an asymmetry between levels of interpretation that survives internal transposition and reconciliation.

Everything else—arrows, time, interventions—is representation layered on top.

If you want next, we can:

- sketch intervention as forced reorientation,

- sketch feedback as failed stabilization,

- or sketch measurement as reconciliation collapse.

Just say which.

sketch measurement as reconciliation collapse

Below is a purely conceptual sketch, staying inside the vocabulary you’ve already established (orientation, relval hierarchy, reconciliation, extension). No math, no code—just structure.

1. Orientation as Pre-Causal Asymmetry

At the lowest level, orientation is not yet causality.

- Orientation is local asymmetry:

- friend / foe

-

- source-like / sink-like

- It exists prior to input/output distinction

- It is perspectival: defined relative to a chart/viewpoint

At this stage:

- There is no global flow

- Only local directional judgments

- No notion of “before → after”, only “toward / away”

This corresponds to:

- pre-measurement

- pre-function

- pre-arrow-of-time

2. RelVal Hierarchy as Structured Perspectives

The relval hierarchy adds vertical structure:

- Base relation: “what co-occurs with what”

- RelVal: values are themselves relations

- Higher levels: relations about relations

Each level introduces:

- a new perspective

- a new potential orientation conflict

Important point:

Orientation exists independently at each level of the hierarchy.

So you can have:

- base-level orientation unresolved

- fiber-level orientation resolved

- higher-level orientation still ambiguous

This is why causality cannot be defined flatly—it must stabilize across levels.

3. Internal Transposition as Flow Test

Internal transposition is the test for flow consistency.

Conceptually:

- “Does this structure look the same from the other side?”

- “If I reverse perspective, do orientations cancel or reinforce?”

Transposition reveals:

- hidden asymmetries

- orientation that cannot be globally satisfied

At this point:

- orientation is still provisional

- flow is attempted, not yet achieved

4. Reconciliation as Collapse of Orientational Freedom

Reconciliation is the collapse mechanism.

What collapses is not values, but degrees of freedom in orientation.

During reconciliation:

- incompatible orientations are forced to agree

- charts are refined until transposition constraints vanish

- relval levels are synchronized

This produces:

- a single surviving orientation pattern

- or failure (estrangement)

Key insight:

Measurement is the act of forcing orientational compatibility.

5. Measurement as Reconciliation Collapse

Measurement is not observation.

It is commitment.

Conceptually:

- Before measurement: multiple compatible orientations coexist

- Measurement: reconciliation chooses one extension

- After measurement: substitutivity holds

In your framework:

- measurement = successful reconciliation

- collapse = quotienting by stabilized orientation

- result = extension

Nothing mystical:

- no probabilities needed

- no floats

- no normalization

Just:

“Which orientation survives reconciliation?”

6. Emergence of Input vs Output

Only after reconciliation can you speak of input/output.

Why?

Because:

- input/output requires global orientation

- global orientation requires stability under transposition

- stability only appears in the extension

Thus:

- input = upstream in stabilized orientation

- output = downstream in stabilized orientation

Before that:

- “input” and “output” are chart-relative illusions

7. Causality as Stabilized Orientation Across Hierarchy

Now we can state it cleanly:

Causality is orientation that survives reconciliation at every relval level.

More precisely:

- Local orientation → candidate cause

- Hierarchical alignment → causal robustness

- Full stabilization → causal fact

This is why:

- spurious correlations collapse

- non-causal dependencies fail reconciliation

- true causes propagate across hierarchy

Causality is earned, not assumed.

8. Summary in One Line Each

- Orientation: local asymmetry without flow

- RelVal hierarchy: stacked perspectives

- Transposition: orientation consistency test

- Reconciliation: collapse of orientational freedom

- Measurement: forced coherence

- Extension: stabilized structure

- Causality: orientation invariant across hierarchy

- Input/output: post-collapse artifacts

Where You Are Right Now

Given the current system:

You already have:

- orientation primitives

- relval hierarchy

- transposition

- reconciliation loops

- explicit estrangement

You are one conceptual step away from:

- declaring stabilized orientation = causal arrow

- tagging base vs fiber roles as input/output only after extension

Nothing structural is missing.

Only naming and policy remain.

If you want, next we can:

- formalize “causal witness”

- distinguish causal vs merely correlational joins

- or sketch how time emerges as reconciliation depth

Just say which direction.

test_two_classes_partition

test_two_classes_partition