In a report published on the front page of the 2022-10-06 issue of Nature (Volume 610, Issue 7930), “Discovering faster matrix multiplication algorithms with reinforcement learning”, thirteen authors from DeepMind (a subsidiary of Alphabet, Inc., the parent company of Google) report that they have extended the domain of AlphaZero into the domain of mathematics, as they describe in a DeepMind report, “Discovering novel algorithms with AlphaTensor”.

In our paper, published today in Nature, we introduce AlphaTensor, the first artificial intelligence (AI) system for discovering novel, efficient, and provably correct algorithms for fundamental tasks such as matrix multiplication. This sheds light on a 50-year-old open question in mathematics about finding the fastest way to multiply two matrices.

This paper is a stepping stone in DeepMind’s mission to advance science and unlock the most fundamental problems using AI. Our system, AlphaTensor, builds upon AlphaZero, an agent that has shown superhuman performance on board games, like chess, Go and shogi, and this work shows the journey of AlphaZero from playing games to tackling unsolved mathematical problems for the first time.

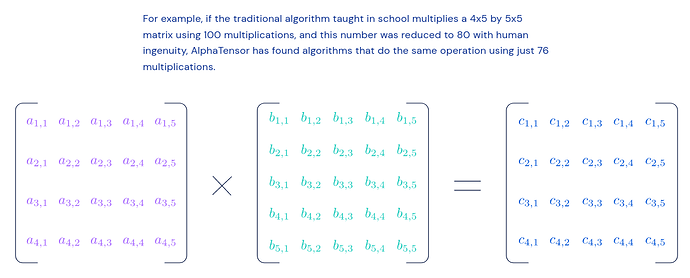

The DeepMind report explains matrix multiplication and provides an overview of how AlphaTensor seeks algorithms to improve its performance. For example, in multiplying a 4×5 matrix by a 5×5 matrix the simplest way requires 100 multiplications. The best algorithm devised by human mathematicians, Strassen’s algorithm, reduces this to 80 multiplications. AlphaTensor re-discovered Strassen’s algorithm on its own and then went on to find a solution that required just 76 multiplications, better than any known algorithm and the first progress in speeding up matrix multiplication in more than fifty years.

Here is the abstract of the Nature paper. Full text [PDF] is available at the journal’s Web site.

Improving the efficiency of algorithms for fundamental computations can have a widespread impact, as it can affect the overall speed of a large amount of computations. Matrix multiplication is one such primitive task, occurring in many systems—from neural networks to scientific computing routines. The automatic discovery of algorithms using machine learning offers the prospect of reaching beyond human intuition and outperforming the current best human-designed algorithms. However, automating the algorithm discovery procedure is intricate, as the space of possible algorithms is enormous. Here we report a deep reinforcement learning approach based on AlphaZero for discovering efficient and provably correct algorithms for the multiplication of arbitrary matrices. Our agent, AlphaTensor, is trained to play a single-player game where the objective is finding tensor decompositions within a finite factor space. AlphaTensor discovered algorithms that outperform the state-of-the-art complexity for many matrix sizes. Particularly relevant is the case of 4 × 4 matrices in a finite field, where AlphaTensor’s algorithm improves on Strassen’s two-level algorithm for the first time, to our knowledge, since its discovery 50 years ago. We further showcase the flexibility of AlphaTensor through different use-cases: algorithms with state-of-the-art complexity for structured matrix multiplication and improved practical efficiency by optimizing matrix multiplication for runtime on specific hardware. Our results highlight AlphaTensor’s ability to accelerate the process of algorithmic discovery on a range of problems, and to optimize for different criteria.

Now, recall that matrix multiplication is at the heart of the calculations done by deep learning artificial intelligence software. AlphaTensor, then, has invented a novel algorithm, better than any devised by humans, which improves its own operation.

In his 1965 article, “Speculations Concerning the First Ultraintelligent Machine” [PDF], I. J. Good wrote of an “intelligence explosion”:

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion,’ and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.

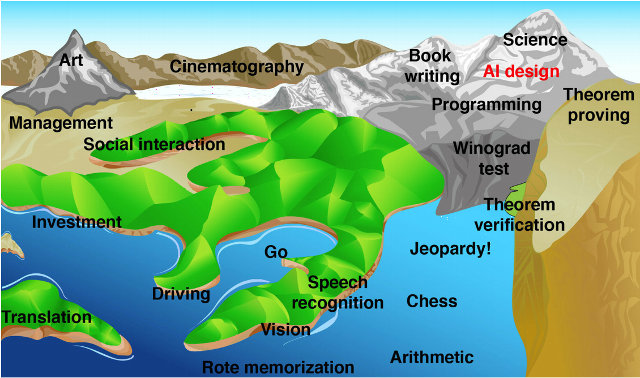

We may have just seen the first baby step toward this uncertain future. I think it’s time to bring out this illustration from Max Tegmark’s Life 3.0 again.

The red item on the peak labeled “AI design” is Good’s “game over” point. The water just rose a little bit a few days ago.

Complete source code for AlphaTensor is posted at GitHub.