Complete source code and data sets are available on GitHub.

Overview

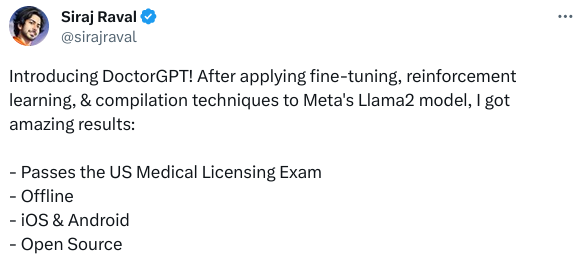

DoctorGPT is a Large Language Model that can pass the US Medical Licensing Exam. This is an open-source project with a mission to provide everyone their own private doctor. DoctorGPT is a version of Meta’s Llama2 7 billion parameter Large Language Model that was fine-tuned on a Medical Dialogue Dataset, then further improved using Reinforcement Learning & Constitutional AI. Since the model is only 3 Gigabytes in size, it fits on any local device, so there is no need to pay an API to use it. It’s free, made for offline usage which preserves patient confidentiality, and it’s available on iOS, Android, and Web. Pull requests for feature additions and improvements are encouraged.

The video provides a worked example of fine-tuning a generic large language model for a specific knowledge domain (or viewpoint), further training by reinforcement learning, and deploying the trained model on a stand-alone device which requires no Internet connection or access to a commercial API to process queries.

Here is information on the United States Medical Licensing Examination, which the trained DoctorGPT model (barely) passed.

Note that this model, in order to be able to run stand-alone on a mobile device, was based upon the smallest Llama 2 model with 7 billion parameters. Llama 2 models are available with up to 70 billion parameters: how would DoctorGPT perform if built atop the big one?