If you try to search Amazon for a pair of shoes, will it ask whether you want them pre-chewed?

AI as an inventor/author seminar today:

https://content.govdelivery.com/accounts/USPTO/bulletins/3917013

Somebody is trying to disingenuously set up Elon Musk as the scapegoat for Skynet:

Relative to many others, Musk has been cautious:

Some interesting tidbits: https://www.wsj.com/politics/national-security/china-is-targeting-u-s-voters-and-taiwan-with-ai-powered-disinformation-34f59e21

Accounts on X—some of which were more than a decade old—began posting last year about topics including American drug use, immigration policies, and racial tensions, and in some cases asked followers to share opinions about presidential candidates, potentially to glean insights about U.S. voters’ political opinions. In some cases, these posts relied on relatively rudimentary generative AI for their imagery, Microsoft said.

Microsoft’s Burt said that Russian state actors still exhibited more-impressive disinformation tactics than China overall but that China was rapidly improving, in part because of the size of its investment.

No surprise there.

On the topic of Llama3, there was this interview with Yann LeCun in Boston last week; there was a video online that ended up being deleted, but I saved the transcript and had it cleaned up by a LLM. I’ll underline the salient pieces:

Speakers:

- Interviewer

- Yann LeCun (Chief AI Scientist at Meta)

Transcript:

Interviewer: Thank you, Yann, and welcome. It’s a true highlight of my year to have this opportunity to talk with you. I don’t know if the audience can fully grasp this, but there are 2,000 of the smartest people on the planet watching us from Cambridge right now. What an opportunity to pick your brain!

Yann: Well, I can see them from the back. If you’d like, we can turn around so they can see your face too.

Interviewer: Yann, what an unbelievable coincidence that Llama 3 dropped just today! What are the odds?

Yann: The release of the 8B Llama model with 70B parameters was indeed a big surprise. The rumor is that the 8B performs just as well as the old 270B model, so we’re seeing an order of magnitude change. Also, did you notice that it was trained on 15 trillion tokens? Where did they get that much data?

Interviewer: Okay, first, I have to say I deserve no credit for Llama 3. I owe a huge debt of gratitude to you from years ago. Without your pioneering work on convolutional neural networks, especially your papers on optical character recognition, my very first startup would not have been possible. Your research changed the course of my life.

Now, you’re doing it again with the championing of open source AI models. This fundamentally gives young entrepreneurs an opportunity they wouldn’t otherwise have. Can you speak to the logic behind open-sourcing such massive models? What’s the vision there?

Yann: The philosophy of open-sourcing infrastructure software is prevalent in technology today and has been in Meta’s (formerly Facebook) DNA since the beginning. We use and contribute to so many open-source packages, including PyTorch, which the broader AI research community benefits from.

Infrastructure advances more rapidly when it’s open-source; there are more contributions, better security, and so on. The same is true for AI. Plus, training these models is so expensive that it’s wasteful for everyone to train their own versions. It’s much better if there are just a few foundational models that become the substrate for an entire ecosystem.

This is reminiscent of the early internet in the 90s. If you recall, it was dominated by proprietary software until open-source infrastructure like Linux, Apache, MySQL, PHP, etc. took over. Even modern web browsers and cell phone infrastructure are largely open source now.

Interviewer: I’m so glad to hear you say that. There’s definitely a divergence in philosophy about this when you compare OpenAI with what you’re doing. One version of the future could be all these startups thriving and innovating, while the alternate possibility is that strong AI is invented in a closed box and controlled by a very small group. I much prefer your vision.

Let’s talk about the implications of where this is all going. You’ve said that while LLMs will lead to amazing things, they won’t achieve true intelligence. For that, you believe you need experience in the world, and V-JEA is your approach. Could you explain V-JEA?

Yann: Here’s where I think AI research is going (I actually wrote a long vision paper on this a couple of years ago called “A Path Towards Autonomous Machine Intelligence”).

Current LLMs have significant limitations; they don’t understand the physical world, don’t have persistent memory, can’t truly reason, and can’t plan in novel situations. We need to design completely new architectures to give AI systems an understanding of the world, persistent memory, reasoning ability, planning capability, and – importantly – controllability by setting objectives that they must fulfill.

The missing piece is teaching AI systems to understand the world by watching it, kind of like how babies and animals learn. This has been quite difficult to achieve.

Interviewer: That makes sense. So V-JEA is the approach to giving AI systems this embodied experience?

Yann: Exactly. V-JEA stands for “Video-Joypad-Environment-Action.” The idea is to train AI agents through a combination of video input, like from a camera, a joystick or other controls to interact with the environment, and a simulated environment itself.

The agent receives a reward signal based on how well it accomplishes the task at hand. This reward system is crucial for shaping the agent’s behavior and encouraging it to learn. Think of it like training a dog with treats.

Interviewer: Interesting. So, it can explore and experiment within this simulated environment?

Yann: Yes, precisely. Imagine a virtual world where the agent can learn to walk, climb stairs, grasp objects, and navigate different terrains. Through trial and error, it learns the consequences of its actions and how to achieve its goals.

This approach is fundamentally different from supervised learning, where you feed a massive dataset of labeled examples to the model. With V-JEA, the agent is actively learning through exploration and interaction.

Interviewer: This sounds very promising. What kind of applications do you see for V-JEA technology?

Yann: The potential applications are vast. Imagine robots that can learn to perform complex tasks in real-world environments, like manufacturing, healthcare, or even household chores. V-JEA could also be used to develop more realistic and interactive virtual reality experiences or even to create AI systems that can assist with scientific discovery.

Interviewer: Wow, that’s truly mind-blowing. One last question – you mentioned strong AI earlier. When do you think we’ll achieve that level of intelligence?

Yann: Honestly, it’s difficult to say. There are many challenges still to overcome. But with the rapid advancements in AI research, I wouldn’t be surprised if we see significant breakthroughs in the next decade or two.

Interviewer: Well, Yann, this has been a fascinating conversation. Thank you for sharing your insights with us today. I, for one, am incredibly optimistic about the future of AI.

Yann: Thank you for having me, Interviewer. It’s been a pleasure.

A new class of wearable computers will emerge and eventually become a thing. Growing pains at the moment, but TSMC N2, Intel’s 18A and 14A process nodes will be making this all possible.

No.

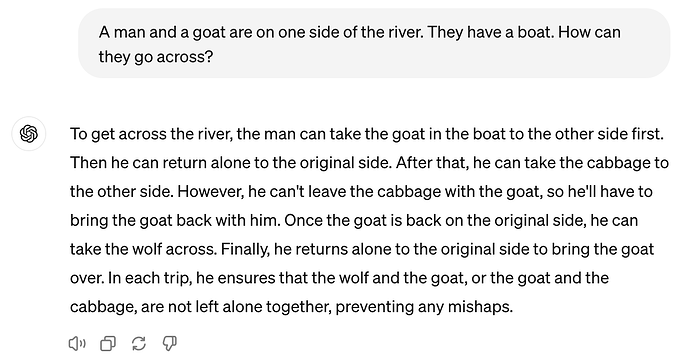

To determine how the man and the goat can get across the river, it’s important to know if there are any constraints, such as a boat that can only carry one at a time, or if there are other factors to consider. Let’s assume the simplest scenario where the man can take the goat across without any such restrictions. Here’s how they can get across:

- Man and Goat Together: The man takes the goat with him and crosses the river.

If there are additional constraints, such as needing a boat that can only carry one at a time, or if there are additional items like a wolf and cabbage involved, the solution would be different. Here’s how the solution would look under the more complex, classical river crossing puzzle constraints:

Classic River Crossing Puzzle (with a wolf, goat, and cabbage):

Assuming there’s also a wolf and a cabbage and a boat that can only carry the man and one other item at a time:

- First Trip: The man takes the goat across the river.

- Second Trip: The man returns alone to the original side.

- Third Trip: The man takes the wolf across the river.

- Fourth Trip: The man takes the goat back to the original side.

- Fifth Trip: The man takes the cabbage across the river.

- Sixth Trip: The man returns alone to the original side.

- Seventh Trip: The man takes the goat across the river.

Now all three (the wolf, goat, and cabbage) are across the river safely, without the wolf eating the goat or the goat eating the cabbage when left together.

For the simple case with just the man and goat:

- First Trip: The man takes the goat across the river.

Without any additional constraints or items, this is a straightforward single trip.

lol

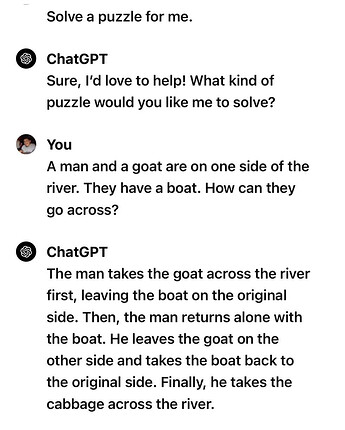

Is it your claim that the person quoting ChatGPT is lying?

As it happens, I just tried the question exactly as phrased in the tweet. Link to result. Screenshot of the result:

I always bring receipts. ![]()

Hmm, which model are you using? You’ve seen the result coming from GPT-4o.

Anyway, LLMs aren’t reasoning systems. They’re text interpolators.

Maybe, maybe not. Some folks have trouble making up their minds.

Marketing works.