Professor Christopher Potts is Chair of the Department of Linguistics at Stanford University and Professor of Computer Science. In this hour long Web seminar he describes the stunning (and largely unexpected) progress in transformer-based machine learning models with self-supervision in training from gargantuan data sets.

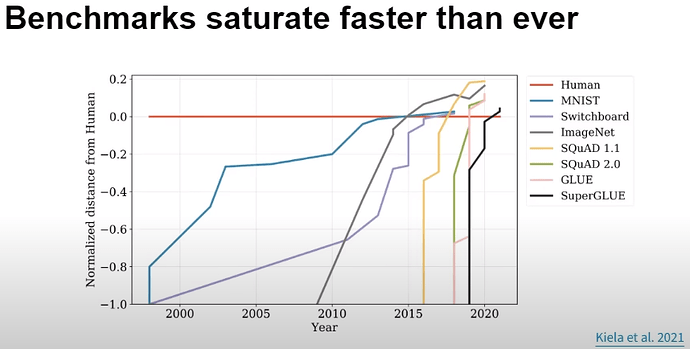

To illustrate the phase-transition-like take-off of the performance of these models on benchmarks comparing their performance to that of humans, consider this chart showing a variety of benchmarks and AI systems from before 2000 to 2021, with human performance on the benchmarks shown as the normalised horizontal red line.

What we see is steady progress up to around 2017 when Google Brain introduced the first transformer model, and then FOOM! a fast-takeoff in which in for every newly-created benchmark human performance was almost immediately surpassed by an AI system. The evidence of this is all around us, in systems such as ChatGPT, DALL-E 2, Stable Diffusion, Codex, and Midjourney.

Prof. Potts concludes that with the rate of progress demonstrated by these systems at present, it makes no sense trying to make predictions ten years in the future as he did not long ago with some confidence. Now we must simply try to ride the wave.

You know what they call it in mathematics when you cannot make predictions beyond a given point? Why, they call it a singularity.

Whether this golden age for natural language understanding and nascent artificial intelligence perceived as out-performing humans on a variety of domain-specific tasks will be a golden age for the society in which it occurs, as the news-readers say, “only time will tell”.