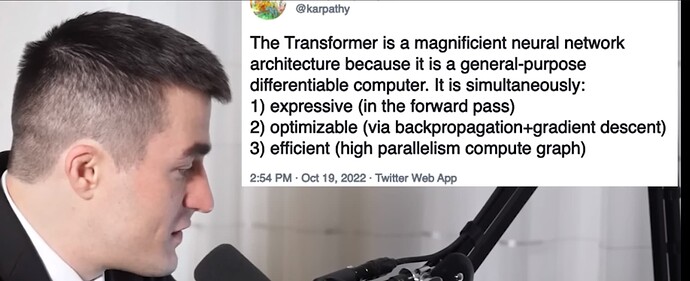

Probably one of the most destructive titles for a paper in history is “Attention Is All You Need” which sought to prove that recurrent* neural networks were outperformed by a non-recurrent architecture. Elon Musk’s AI guru Andrej Karpathy even went on to palaver with Lex Fridman to claim that it is Turing complete:

Karpathy attempts to justify his assertion “it is a general-purpose differential computer” by attributing to each Transformer layer the quality of a statement in a computer programming language. So, if you have N transformer layers, you have N statements!

Yeah, but there’s just one thing that he ignores: There are no loops because there is no recurrence.

That Musk would look up to such illiteracy, stunning in both its theoretic and pragmatic bankruptcy, exemplifies why the field of machine learning has succumbed to the “algorithmic bias” industry’s theocratic alignment of science with the moral zeitgeist.

Imagine Newton struggling to incorporate the notion of velocity into the state of a physical system so that the concept of momentum could make its debut in the natural sciences, after millennia of Greek misapprehension that force is the essence of motion. Then some idiot from the King’s court shows up with otherwise quite useful techniques of correlation and factor analysis granted him by a peek into the 19th century through the eyes of Galton, claiming “Statistics are all you need.” This idiot then denounces Newton, with the full authority of the King, backing up his idiocy with all of the amazing things Galton’s techniques allow him to do.

That’s “Attention Is All You Need” in the present crisis due to Artificial Idiocy.

So my response to the Karpathy interview was simply this:

“Recurrence Is All You Need”

The key word here is “Need” as in “necessary” in order to achieve true “general-purpose” computation.

Oh, but what about Galton? I mean, statistics are so powerful and provide us with such pragmatic advantage! How can I claim Musk’s being misled in a pragmatic sense?

The answer is that while we must always give statistics its due – just as we must The Devil – we must never elevate The Devil to to the place of God lest we damn ourselves to the eternal torment of argument over causation. Always be mindful that statistics are merely a way of identifying steps (ie: “statements”) that must be incorporated into an ultimately recurrent model.

Fortunately there are a few sane folks out there looking into doing this, but I fear they are more marginalized, in relative terms, than a Newton who is barred forever from influence because some idiot managed to dazzle the King with a peek into the future pragmatics of statistics.

Here’s a paper by such marginalized Newtons, groping in the dark to recover what Alan Turing taught us nearly a century ago:

Transformer-based models show their effectiveness across multiple domains and tasks. The self-attention allows to combine information from all sequence elements into context-aware representations. However, global and local information has to be stored mostly in the same element-wise representations. Moreover, the length of an input sequence is limited by quadratic computational complexity of self-attention.

In this work, we propose and study a memory-augmented segment-level recurrent Transformer (RMT). Memory allows to store and process local and global information as well as to pass information between segments of the long sequence with the help of recurrence.

We implement a memory mechanism with no changes to Transformer model by adding special memory tokens to the input or output sequence. Then the model is trained to control both memory operations and sequence representations processing.

Results of experiments show that RMT performs on par with the Transformer-XL on language modeling for smaller memory sizes and outperforms it for tasks that require longer sequence processing. We show that adding memory tokens to Tr-XL is able to improve its performance. This makes Recurrent Memory Transformer a promising architecture for applications that require learning of long-term dependencies and general purpose in memory processing, such as algorithmic tasks and reasoning.

*Moreover, the only sense in which the out-performed “recurrent” architectures could be called “recurrent” was in a very degenerate sense because they were being used in a feed forward layered architecture where the recurrence was subservient. This is rather like claiming that a digital multiply circuit’s use of flipflops to communicate steps in multiplication is “recurrent” and hence is a Turing complete “general-purpose computer”.