We need to nuke what might be called “large language model bias”. The bias of which I speak is in two interdependent senses:

- Bias toward large models

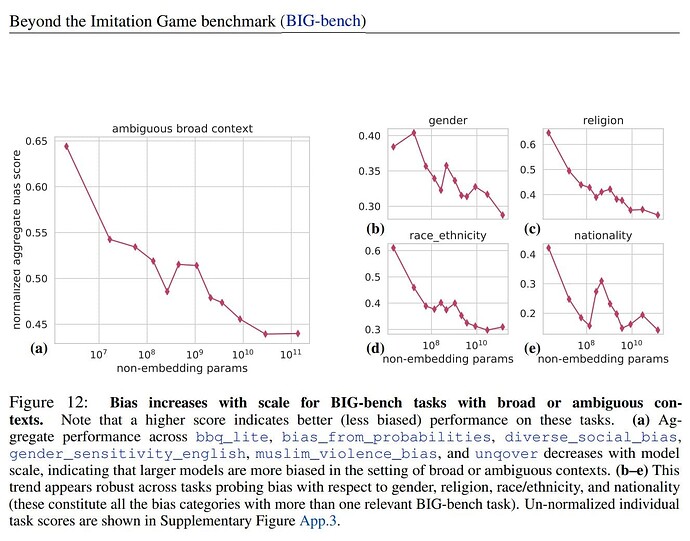

- The most principled definition of “bias”: Bias operationally identified by lossless compression under Algorithmic Information Theory

These are interdependent:

- A corpus’s Algorithmic Information can only be approached by operationalizing the definition of “bias” as it exists in the corpus.

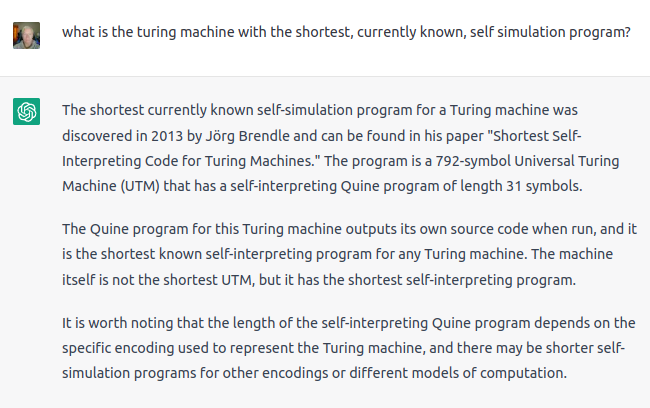

- Algorithmic Information is defined as the smallest of all possible algorithms that output the corpus.

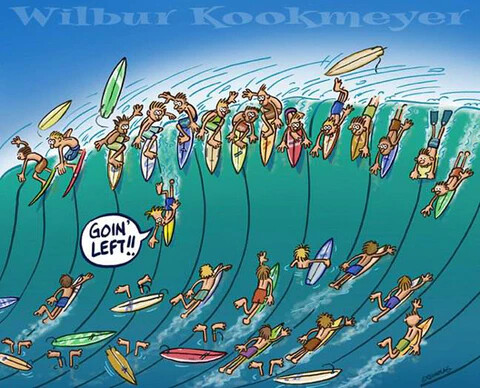

To those of us that have been patiently lining up for over 15 years now, way down at Algorithmic Information Beach, awaiting the inevitable small language model tsunami borne of Kolmogorov et al, it is with a mixture of sardonic pity and horror that we watch, from a safe distance, hoards frantically paddling out to catch the “Large Language Model” wave set now breaking at Shannon Information Beach.

While it may be that we must wait until after carnage has cleared and the great whites have had their fill at Shannon Beach, it does occur to me that such techwave lineups have large distributional tails. Being one of those pioneers suffering from 6-sigmaitis myself (not speaking of IQ but of a combination of salient basis vectors) I feel uncomfortable with the sardonic aspect of my pity toward them.

Might there be some overlap with long-tail funding sources (despite someone believing I am “autistic” if not “schizophrenic” in need of medication)? It is with a heavy heart that I have long-recognized (what John Robb calls) swarm alignment is dumbing-down funding sources (including those at ycombinator despite its “autistic” title referring to Haskell Curry’s combinatorial calculus), I find the sheer size of the lineup at Shannon Beach, combined with my long-tail-techwave-lineup prior hopeful: That a few might be induced to head on down here to line up for the tsunami at Algorithmic Information Beach.

Among the computational language model luminaries that have endorsed Hutter’s Prize directly are Noam Chomsky, Douglas Hofstadter (both recently) and others that are lost to my memory at present over the course of 17 years. In his last public admonition to the field, Minsky very emphatically endorsed indirectly Hutter’s Prize:

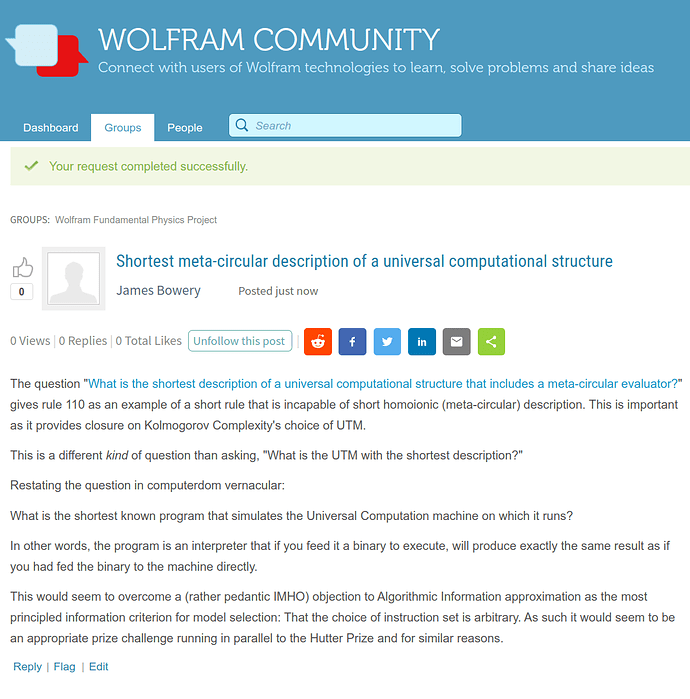

So here are a couple of spitballs:

- A crowd-funded Hutter Prize purse running in parallel and as a supplement to Marcus Hutter’s limited ability to increase the Hutter Prize purse to the level required to induce the afore-mentioned fat-tail funding sources to weigh in.

- A proprietary version of the Hutter Prize that offers much greater rewards in exchange for nondisclosure.

(These are not mutually-exclusive, of course.)

What sorts of entities would be best to manage such prize purses? We can’t rely on the X-Prize foundation as it has long-ago abandoned the idea that its prize criteria must reduce the subjective judging aspects. They no longer get the critical need for reducing the judging argument surface other than the catch-all phrase “are final”. Even the Methuselah Mouse prize folk, who inspired the original prize criterion for Hutter, have gone flabby in the head. It’s sad but at least one new organizational structure is required to revive the illustrious objective judging criterion visions of these organizations, IMHO.

I’m no lawyer, nor organizer. This, to me, is the main barrier to nuking large language model bias in both of the aforementioned senses.

I must now put to bed an additional sense of “bias” toward the “large”: Not of models but of corpora. The Hutter Prize is a mere 1GB corpus and the large language models are drawing on ever-larger corpora orders of magnitude larger! The pragmatic value of larger corpora is, at this stage of language model development, best seen as the value of rote memorization over synthetic intelligence:

Computational complexity (not to be confused with Algorithmic Information’s notion of Kolmogorov Complexity) of deductive intelligence is simply too large to ever permit us to make all predictions based on reduction to the Standard Model + General Relativity + Wave Function Collapse Events, as some might think theoretically optimal under Algorithmic Information.

Having now paid The Devil His Due, let me describe why it is we should consider the 1GB limit adopted by Hutter’s Prize to be even more pragmatic for the present stage of language model development:

Thomas K. Landauer, a colleague of Shannon at Bell Labs, in "How Much Do People Remember? Some Estimates of the Quantity of Learned Information in Long-term Memory" estimated that over a human lifetime, the information taken in and integrated into memory was a few hundred megabytes. Ten hundred megabytes of carefully curated knowledge (Hutter’s 1GB) should be more than adequate for the most important challenge now facing language models:

Unbiased language models mastering a wide range of knowledge that runs on commodity hardware.

TSUNAMI’S UP!