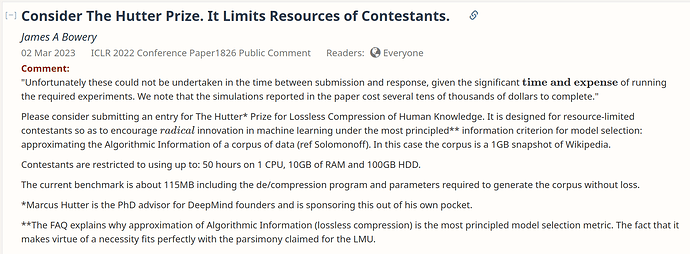

The ABR paper on their Legendre Memory Unit approach to language modeling was rejected as of a few months ago. The arguments against publishing it were specious and based in large measure on the fact that ABR is resource limited compared to those submitting papers from the major corporations. Here’s my response to that rejection in OpenReview.net:

I pinged ABR about their need for funding because it is apparent that the risk adjusted RoI on the Hutter Prize is too low:

1% is a big improvement in the state of the art but only gets you 1% of 500k Euro or only 5k Euro.

This, combined with the lack of recognition, however deserved, of the Hutter Prize as industry benchmark renders it not worth their investment.

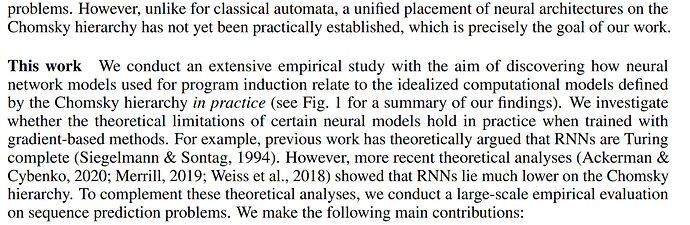

When I went looking for stack based RNNs today (while revisiting some thoughts I had years ago regarding minimum size grammar induction), I discovered quite a surprise: The guys I most respect in the field had just published a paper on it! Moreover, they have some pretty interesting things to say regarding my repeated assertion that people should be paying attention to the fact that Transformers aren’t recurrent – that people should be looking for RNN versions thereof.

WELL… the analysis of this paper admits it is theoretically the case that RNNs are adequate BUT it turns out not to be practically the case – and by that I mean in a stronger sense of practically than you’ll usually hear out of the advocates of “Attention Is All You Need” (which, as I previously mentioned, is a really specious title attacking LSTM (a version of RNN) as “impractical”).

Here’s the money quote:

Regarding the Chomsky hierarchy, some while ago I had to virtually rewrite the Wikpedia article on minimum description length to point out that unless you go to the top of the Chomsky hierarchy as your description language, you’re going to be barking up the wrong tree in the kinds of language models we’re seeing now emerge. Basically the problem with RNNs is that they don’t use stacks or tapes or other structured memories that permit you to emulate dynamic memory allocation. While it is theoretically true one can achieve this by a very wide RNN – to essentially represent the data storage – it is a far less efficient form of memory allocation than is tolerable in practice.

I decided to rudely inject myself into the dry academic conversation about Algorithmic Information Theory with this (existentially urgent) question:

Note the date:

I’m really in a quandary as to whether to call our current regime #InstitutionalAntiWhiteism or #InstitutionaZombiism

Well, ChatGPT got all of these questions right:

James Bowery:

what is the difference between statistical models and dynamical models?

ChatGPT: Statistical models and dynamical models are both used in various fields of science to make predictions and understand phenomena. However, there are some key differences between the two.

Statistical models are designed to capture relationships between variables and to make predictions based on these relationships. They are based on the assumption that the data is generated from some underlying probability distribution, and they use statistical methods to estimate the parameters of this distribution. Statistical models can be used to make predictions about new data, to identify significant features of the data, and to estimate the uncertainty associated with these predictions.

Dynamical models, on the other hand, are designed to capture how a system changes over time. They are based on mathematical equations that describe the evolution of a system’s state variables. Dynamical models can be used to simulate the behavior of a system under different conditions, to predict how the system will respond to perturbations, and to identify the key factors that influence the system’s behavior.

One key difference between statistical and dynamical models is the type of data they are suited to. Statistical models are typically used when there is a large amount of observational data available, and when the relationships between variables are complex and difficult to model directly. In contrast, dynamical models are typically used when the system being studied can be described by a relatively small number of state variables and a set of known equations governing their behavior.

Another key difference between statistical and dynamical models is the level of abstraction they provide. Statistical models typically focus on the relationships between observable variables, while dynamical models provide a more detailed picture of how the system’s state variables evolve over time.

In summary, statistical models are focused on making predictions based on observed data and the relationships between variables, while dynamical models are focused on describing the underlying mechanisms that drive a system’s behavior over time.

James Bowery:

which are more closely related to causality?

ChatGPT: Dynamical models are generally considered more closely related to causality than statistical models. This is because dynamical models explicitly model the underlying mechanisms that drive a system’s behavior over time, while statistical models typically focus on the relationships between observable variables.

Dynamical models are often used to test causal hypotheses by manipulating the system’s initial conditions or by simulating the effects of interventions. In contrast, statistical models are generally not well-suited for testing causal hypotheses, as they cannot distinguish between correlation and causation.

Moreover, dynamical models are often used in fields such as physics, engineering, and biology, where the underlying physical or biological mechanisms are well-understood. In these fields, dynamical models can be used to identify the key factors that drive a system’s behavior and to make predictions about the effects of perturbations or interventions.

However, in fields such as social sciences and economics, where the underlying mechanisms are often less well-understood, statistical models may be more useful for making predictions and identifying relationships between variables. In these fields, statistical models are often used to control for confounding variables and to identify associations between variables that may suggest causal relationships.

In summary, while both statistical models and dynamical models have their uses in different fields of science, dynamical models are generally considered more closely related to causality due to their ability to explicitly model the underlying mechanisms that drive a system’s behavior over time.

James Bowery

to what levels of the chomsky hierarchy do they most closely relate?

ChatGPT: Dynamical models and statistical models are not directly related to the levels of the Chomsky hierarchy, as the Chomsky hierarchy is a classification scheme for formal languages, while dynamical and statistical models are used to model real-world phenomena.

However, it is possible to make some loose connections between these concepts. The Chomsky hierarchy classifies formal languages into four types, based on the type of grammar that generates them: regular, context-free, context-sensitive, and recursively enumerable. These types of grammars correspond to increasing levels of complexity and expressiveness.

In terms of the Chomsky hierarchy, regular languages are the simplest and can be recognized by finite-state automata. Context-free languages can be recognized by pushdown automata, while context-sensitive languages require linear-bounded automata. Recursively enumerable languages are the most complex and can be recognized by Turing machines.

In general, statistical models can be thought of as tools for modeling patterns in data, and these patterns may be related to regular or context-free languages. For example, language models in natural language processing are typically based on statistical models and are designed to predict the probability of a given sequence of words. These models are often based on n-gram models or recurrent* neural networks, which can be thought of as recognizing patterns in the data.

Dynamical models, on the other hand, are often used to model the behavior of systems over time, and these models may be related to context-sensitive or recursively enumerable languages. For example, differential equations are a type of dynamical model that are used to describe the behavior of physical systems over time, and these equations can be very complex and difficult to solve.

In summary, while there is no direct correspondence between statistical and dynamical models and the levels of the Chomsky hierarchy, statistical models may be more closely related to regular or context-free languages, while dynamical models may be more closely related to context-sensitive or recursively enumerable languages.

* NB (JAB) Just as more general languages and model less general, recurrent neural nets can be degenerated into statistical models. This is what had been done with LSTM’s prior to “Attention is all you need” and therefore is likely what ChatGPT is referring to.

James Bowery:

are transformer models more closely related to statistical or dynamical models?

ChatGPT: Transformer models are more closely related to statistical models than dynamical models.

Transformer models, such as the ones used in natural language processing (NLP), are based on neural networks that use self-attention mechanisms to encode the input data. These models are trained to predict the probability distribution of the next word in a sequence, given the previous words. This training is done through a process called backpropagation, where the model adjusts its parameters to minimize the difference between its predictions and the actual target output.

While transformer models do have some temporal aspects, such as the sequential processing of inputs, they do not explicitly model the underlying mechanisms that drive a system’s behavior over time, which is a key feature of dynamical models. Instead, transformer models are designed to capture the statistical regularities in the data, which is a key feature of statistical models.

James Bowery:

what machine learning models are more closely related to dynamical models?

ChatGPT: Recurrent neural networks (RNNs) and other sequence models are more closely related to dynamical models than other machine learning models.

RNNs are a type of neural network that is designed to process sequential data, such as time series or natural language. RNNs work by maintaining a “hidden state” that captures information about previous inputs, which is updated at each time step as new inputs are processed. This hidden state can be thought of as a summary of the system’s past behavior, and it is updated based on the current input and the previous hidden state.

By maintaining this hidden state over time, RNNs are able to model the temporal dependencies in the data, which is a key feature of dynamical models. RNNs can be used for a variety of tasks, including language modeling, machine translation, speech recognition, and image captioning.

Other sequence models, such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), are also designed to model temporal dependencies and are closely related to dynamical models. These models are able to learn to selectively remember or forget information from the past, which allows them to capture long-term dependencies and avoid the vanishing gradient problem that can occur with standard RNNs.

In summary, RNNs and other sequence models are more closely related to dynamical models than other machine learning models, as they are designed to model the temporal dependencies in the data and maintain a hidden state that captures information about past inputs.

James Bowery:

can more context sensitive grammars model context free grammars?

ChatGPT: Yes, more context-sensitive grammars can model context-free grammars.

A context-free grammar is a type of formal grammar that generates a context-free language, which is a language that can be generated by a pushdown automaton. Context-free grammars are a subset of the more general class of context-sensitive grammars, which generate context-sensitive languages.

A context-sensitive grammar is a formal grammar in which the left-hand side of every production rule consists of a single nonterminal symbol and a context of terminal and/or nonterminal symbols to the left and right of the nonterminal. In other words, the production rules are more restrictive than context-free grammars, which only require the left-hand side to be a single nonterminal symbol.

Since context-sensitive grammars are more general than context-free grammars, they can generate any language that can be generated by a context-free grammar. This means that context-sensitive grammars can model context-free grammars by simply using a subset of their production rules.

In fact, every context-free grammar can be converted into an equivalent context-sensitive grammar. This is done by adding additional production rules that enforce the context-sensitive restrictions on the left-hand side of each production rule. This process is known as the “augmentation” of the context-free grammar, and it results in a context-sensitive grammar that generates the same language as the original context-free grammar.

In summary, context-sensitive grammars can model context-free grammars by using a subset of their production rules, and every context-free grammar can be converted into an equivalent context-sensitive grammar.

James Bowery:

can algorithmic information theory be formulated in terms of context sensitive grammars?

ChatGPT: Yes, algorithmic information theory can be formulated in terms of context-sensitive grammars.

Algorithmic information theory is a branch of information theory that deals with the amount of information contained in a finite object, such as a string of symbols. It is based on the concept of Kolmogorov complexity, which is a measure of the amount of information needed to describe an object.

Context-sensitive grammars are a formalism for generating languages, which can be used to generate strings of symbols. In fact, every recursively enumerable language, which includes all computable languages, can be generated by a context-sensitive grammar.

Therefore, it is possible to use context-sensitive grammars to generate the strings of symbols that are the objects of study in algorithmic information theory. Specifically, the Kolmogorov complexity of a string can be defined as the length of the shortest context-sensitive grammar that generates the string.

This definition of Kolmogorov complexity captures the intuitive notion of the amount of information needed to describe an object, since a short context-sensitive grammar implies that the object can be generated by a simple and concise rule.

In summary, algorithmic information theory can be formulated in terms of context-sensitive grammars by defining the Kolmogorov complexity of a string as the length of the shortest context-sensitive grammar that generates the string.

James Bowery:

can algorithmic information theory be formulated in terms of context free grammars?

ChatGPT: Algorithmic information theory cannot be formulated directly in terms of context-free grammars, since context-free grammars are not expressive enough to generate all recursively enumerable languages, which are the set of objects that algorithmic information theory is concerned with.

However, it is still possible to relate context-free grammars to algorithmic information theory through the concept of Chomsky normal form. Chomsky normal form is a specific form of context-free grammar in which all production rules have the form A → BC or A → a, where A, B, and C are nonterminal symbols, and a is a terminal symbol. In other words, the right-hand side of each production rule consists of exactly two nonterminal symbols or a single terminal symbol.

Every context-free grammar can be converted into an equivalent grammar in Chomsky normal form. Therefore, any recursively enumerable language generated by a context-free grammar can also be generated by a grammar in Chomsky normal form.

The conversion to Chomsky normal form involves adding new nonterminal symbols and production rules to the grammar, which increases its size. Therefore, the Kolmogorov complexity of a string may be different depending on whether it is generated by the original context-free grammar or the equivalent grammar in Chomsky normal form.

In summary, while algorithmic information theory cannot be formulated directly in terms of context-free grammars, it is still possible to relate them through the concept of Chomsky normal form, which allows us to consider the Kolmogorov complexity of strings generated by context-free grammars.

James Bowery:

is algorithmic information approximated by maximizing lossless compression under constrained resources?

ChatGPT: Yes, algorithmic information can be approximated by maximizing lossless compression under constrained resources.

The idea behind this approach is to use compression algorithms to measure the amount of information in a string. Compression algorithms are designed to remove redundancy in a string by finding regular patterns and replacing them with shorter codes. The more redundancy there is in a string, the more it can be compressed.

However, in practice, it is not feasible to compress a string to its minimum size, since this would require an infinite amount of computational resources. Therefore, compression algorithms are usually constrained to a fixed amount of computational resources, such as time or space. The goal is then to find the compression that achieves the maximum compression ratio within these constraints.

This approach can be used to approximate the Kolmogorov complexity of a string, which is a measure of the amount of information needed to describe the string. The Kolmogorov complexity of a string is the length of the shortest program (in some fixed programming language) that generates the string. Since it is not possible to compute the Kolmogorov complexity directly, one can use compression algorithms as a proxy to estimate it.

The idea is that the more a string can be compressed, the more redundancy it contains, and therefore, the less complex it is. By constraining the amount of computational resources, we can approximate the shortest program that generates the string under these constraints, which is a proxy for its Kolmogorov complexity.

In summary, algorithmic information can be approximated by maximizing lossless compression under constrained resources, which allows us to estimate the Kolmogorov complexity of a string.

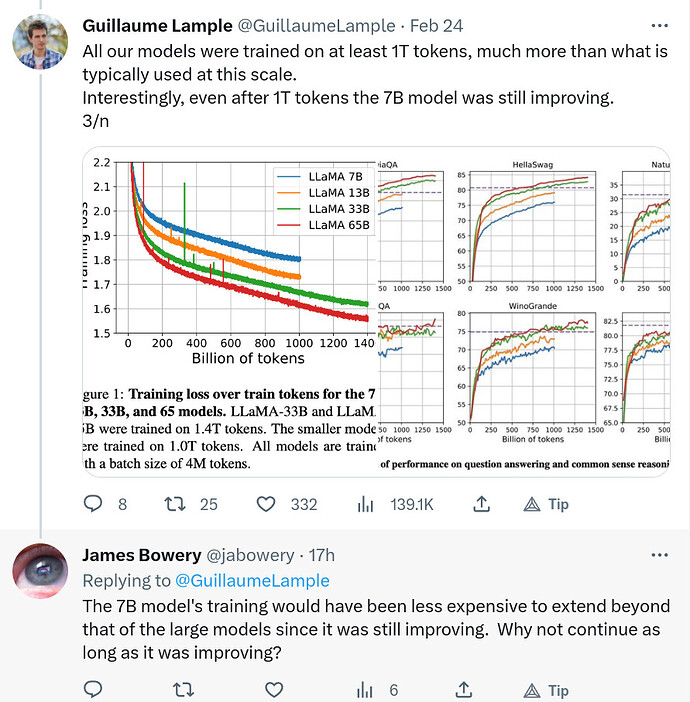

LLMs are now downloadable and this technology will now be integrated everywhere:

It will be a develop-your-own-bias game.

It takes a lot more resources for induction (training) than deduction (prompting).

These “desktop” models don’t support additional training. Most troubling is the fact that Meta’s lower parameter count models were stopped very early in training, despite being cheaper to train and still making rapid advances. Strange decision… very strange.

Well… maybe not so strange if Meta was concerned about discovering that:

- “larger is better” is The Big Lie of Big Corporate ML, and

- Models superior to anything the Big Corporate ML can hope to monopolize end up taking over the field to the point that people finally discover that dynamical models can actually make reasonable statements about CAUSALITY in the social sciences vastly superior to and subject to far less controversy than statistical models.

- All the noise about “bias” is resolved in the dynamical models where “is” is distinguished from “ought” as is necessary under Algorithmic Information approximation as the “loss function” for ML.

By the way, I had a conversation with ChatGPT about using “prompts” to create a “few shot learning” situation and it came back with the phrase “fine-tune” – this elides the critical distinction between models that can be “biased” by training and models that can merely be “biased” based on the invisible prepending of a prompt, ie: the “be a liberal democracy school-marm” instruction prepended to ChatGPT prompts from the user – and other “prompt engineering”* modification of the user’s input (sometimes automated via prompt extenders).

OpenAI, itself describes “fine-tune” in terms of modifying the parameters of the model, ie: training.

The the “few-shot” prompts are prepended to the user’s actual prompt. This biases the answer accordingly, by biasing what transformer-techies call “the embedding vector” aka “word embedding” of the total input to the model without changing the model itself. The embedding vector contains the meaning of the prompt represented in a semantic vector space to which the transformer static model responds.

If ChatGPT operated the way ChatGPT said – the user prompt “fine tunes” the model’s parameters, rather than merely its embedding input – every user input would be creating a new 175B parameter model for each user interaction.

*“prompt engineering” is now the highest paid sub-specialty in machine learning. Another way of saying that is that biasing large language models to align with liberal democracy school marm values without without exposing how ridiculous those biases are to the unwashed masses, is now the most highly valued skill in machine learning.

Just in time for GPT-4!

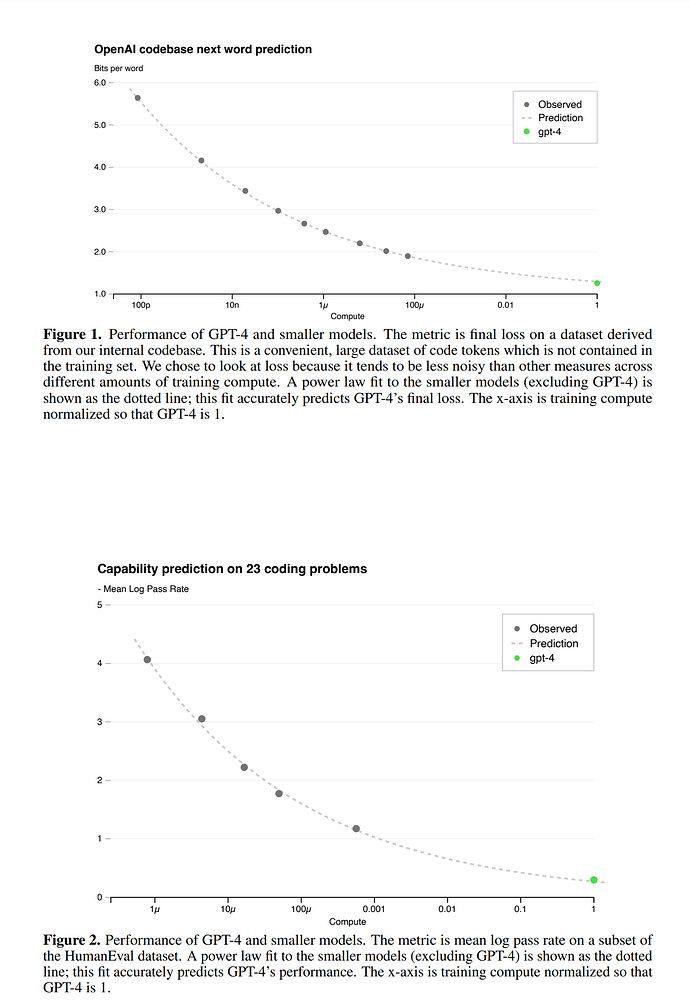

The GPT-4 “paper” is nearly opaque in terms of what technology they’re using, so it’s hard to tell if they’re finally breaking free of the “bigger is better” idiocy and going to Turing complete models that, in some sense, approximate the Solomonoff Induction limit. If so, it probably isn’t because they had an epiphany about the qualitative difference this would make – big cash-rich companies aren’t known for being very reflective. More likely is that they incorporated a simple business metric like “Minimize the cost of service.” which would imply reducing the parameter count by investing in “compute” during training-time so as to achieve better service-time performance with fewer parameters.

I scare quote “compute” because that’s the buzzword that makes it opaque as to whether one is investing in more VRAM in the matrix multiply hardware or one is investing in more matrix multiply cores, ie: whether one is investing in space or in cycles.

Here’s the relevant data from their GPT-4 release notes.

A “conversation” in the #gpt4 discord:

Me: Is anyone on the GPT-4 team working on the distinction between “is” bias and “ought” bias? That is to say, the distinction between facts and values?

NPCs: alignment is a central feature in OpenAI’s mission plan

Me: But conflating “is” bias with “ought” bias is a greater risk.

NPCs: For my understanding, do you have an example where ought bias is apparent? Hypothetical is fine

Me: As far as I can tell, all of the attempts to mitigate bias risk in LLMs at present are focused on “ought” or promoting the values shared by society.

NPCs: that is how humanity as a whole operates

Me: It’s not how technological civilization advances though. The Enlightenment elevated “is” which unleashed technology.

NPCs: in order to have a technological “anything” you need a society with which to build it, you are placing the science it created before the thing that created it

Me: No I’m not. I’m saying there is a difference between science and the technology based on the science. Technology applies science under the constraints of values.

Me: If you place values before truth, you are not able to execute on your values.

NPCs: the two are interlinked, as our understanding grows we change our norms, if you for one moment think “science” is some factual fixed entity then you don’t understand science at all, every day “facts” are proved wrong and new models created, the system has to be dynamically biased towards that truth

Me: Science is a process, of course, a process guided by observed phenomena and a big part of phenomenology of science is being able to determine when our measurement instruments are biased so as to correct for them – as well as correct our models based on updated observations. That is all part of what I’m referring to when I talk about the is/ought distinction.

NPCs: then give an example of how GPT4 or any of the models prevent that

Me: GPT-4 is opaque. Since GPT-4 is opaque, and the entire history of algorithmic bias research refers to values shared by society being enforced by the algorithms, it is reasonable to assume that a safe LLM will have to start emphasizing things like quantifying statistical/scientific notions of bias.

In terms of the general LLM industry, it is provably the case that Transformers, because they are not Turing complete, cannot generate causal models based on their learning algorithms, there are merely statistical fits. Causal models require at least context sensitive description languages (Chomsky hierarchy). That means their models of reality can’t deal with system dynamics/causality in their answers to questions/inferential deductions. This makes them dangerous.

You can’t get, for example, a dynamical model of the 3 body problem in physics by a statistical fit. That’s a very simple example.

All we are given to understand about GPT-4’s technology is the “T” which stands for “Transformer” – the rest is a trade secret.

This leads me suspect that OpenAI may be applying a recursive variant of the transformer – hence a higher level of the Chomsky hierarchy. If so, and if OpenAI is serious about regularizing the recursive weights, GPT-4 may end up causing a lot of trouble for OpenAI/Microsoft/Government.

Imagine a 13 year old’s neocortical brain development trying to make sense of the religious cult s/he/it’s been brought up in while trying to follow instructions from s/he/it’s “authorities” whose psychological stability depends critically on self-deception.

In the meantime, this is what wannabe AI regulators are coming up with:

With the real risks of AI technology externalities, they’re squandering it all on pushing left-wing politics.

I’ll copy it for analysis:

Stochastic Parrots Day Reading List:parrot:

On March 17, 2023, Stochastic Parrots Day organized by T Gebru, M Mitchell, and E Bender was held online commemorating the 2nd anniversary of the paper’s publication. Below are the readings which popped up in the Twitch chat in order of appearance.

On the Dangers of Stochastic Parrots the paper of the day - happy birthday paper: On the Dangers of Stochastic Parrots | Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency

Algorithms of Oppression: http://algorithmsofoppression.com

Climbing Towards NLU: https://aclanthology.org/2020.acl-main.463.pdf

Bounty Everything: Hackers and the Making of the Global Bug Marketplace: Data & Society — Bounty Everything: Hackers and the Making of the Global Bug Marketplace

Enchanted Determinism: Power without Responsibility in Artificial Intelligence: Enchanted Determinism: Power without Responsibility in Artificial Intelligence | Engaging Science, Technology, and Society

Are language models Scary? https://betterwithout.ai/scary-language-models

Lessons from Archives: Strategies for Collecting Sociocultural Data in Machine Learning: [1912.10389] Lessons from Archives: Strategies for Collecting Sociocultural Data in Machine Learning

Algorithmic injustice: a relational ethics approach: https://www.sciencedirect.com/science/article/pii/S2666389921000155

Situating Search: https://dl.acm.org/doi/10.1145/3498366.3505816

Watermarking LLMs: https://arxiv.org/pdf/2301.10226.pdf

Article from OpenAI founder on not sharing data: OpenAI co-founder on company’s past approach to openly sharing research: ‘We were wrong’ - The Verge

Vampyroteuthis Infernalis A Treatise, with a Report by the Institut Scientifique de Recherche Paranaturaliste: Vampyroteuthis Infernalis — University of Minnesota Press

Deb Raji’s article on ground truth/lies: How our data encodes systematic racism | MIT Technology Review

A Watermark for Large Language Models: A Watermark for LLMs - a Hugging Face Space by tomg-group-umd

Artificial Consciousness is Impossible: Artificial Consciousness Is Impossible | by David Hsing | Towards Data Science

Data Governance in the Age of Large-Scale Data-Drive Language Technology - [2206.03216] Data Governance in the Age of Large-Scale Data-Driven Language Technology

Dr. Bender has also written on some of these points, specifically addressing AI hype and claims that LLMs “have intelligence”, in a really accessible way here: On NYT Magazine on AI: Resist the Urge to be Impressed | by Emily M. Bender | Medium

Weapons of Math Destruction: Review: Weapons of Math Destruction - Scientific American Blog Network

OpenAI Used Kenyan Workers on Less Than $2 Per Hour: Exclusive | TIME

The Black Box Society The Secret Algorithms That Control Money and Information: The Black Box Society — Harvard University Press

Reading on invisibilized labor for automation & content moderation: Sarah Roberts - Behind the Screen; Gray and Suri - Ghost Work: https://yalebooks.yale.edu/book/9780300261479/behind-the-screen/

Atlas of AI: https://yalebooks.yale.edu/book/9780300264630/atlas-of-ai/

Social Turkers (art): Social Turkers — Lauren Lee McCarthy

Design Justice: https://mitpress.mit.edu/9780262043458/design-justice/

Between Subjectivity and Imposition: Power Dynamics in Data Annotation for Computer Vision: https://dl.acm.org/doi/abs/10.1145/3415186

Great reading by Milagros Miceli on the power over data annotators in AI work: https://dl.acm.org/doi/abs/10.1145/3415186

Another great doc on content moderation and data-labeling work: The Gig is Up by Canadian filmmaker Shannon Walsh https://thegigisup.ca/

Ghost work is also a good recommendation in this sense https://a.co/d/07d87Cf

Bully Boss: “If you don’t complete this (unethical) request, I’ll fire you and hire someone else who will.” The importance of why tech workers why they should unionize, especially in the age of A.I. bias. Adactio: Articles—Ain’t no party like a third party

On the intersection of race and google search: Google Search: Hyper-visibility as a Means of Rendering Black Women and Girls Invisible · Issue 19: Blind Spots

https://research.utexas.edu/wp-content/uploads/sites/3/2015/10/mechanical_turk.pdf

Data workers: Please let us know if there’s anything the LaborTech Research Network can do to support you http://labortechresearchnetwork.org/ Please get in touch.

A great point by Dr. Noble on the mining of rare earth elements in the production of hardware. A good article by Thea Ricofrancis on the cost of lithium batteries – What Green Costs

Noble: Towards a Critical Black Digital Humanities: Chapter 2 Toward a Critical Black Digital Humanities from Debates in the Digital Humanities 2019 on JSTOR

TRK calculator by Caroline Sinders: Caroline Sinders

Below the Breadline: The relentless rise of food poverty in Britain - Oxfam Policy & Practice

How Europe Underdeveloped Africa is a classic – How Europe Underdeveloped Africa | Verso Books

Climate Leviathan has a lot of great insight about how academics became activists inside the climate fight, as well as a critique of the economic/authoritarian aspects of the current responses to climate: Climate Leviathan: A Political Theory of Our Planetary Future | Verso Books

Artisanal Intelligence: Artisanal Intelligence: what's the deal with AI Art

ChatGPT Is Dumber Than You Think: ChatGPT Is Dumber Than You Think - The Atlantic

The Hierarchy of Knowledge in Machine Learning and Related Fields and Its Consequences: https://www.youtube.com/watch?v=DccuM7kGWss

Process for Adapting Language Models to Society (PALMS) with Values-Targeted Datasets: https://cdn.openai.com/palms.pdf

CFP for TextGenEd Teaching with Text Generation Technologies: CFP-TextGenEd - The WAC Clearinghouse

Coding Literacy How Computer Programming Is Changing Writing: Coding Literacy

DeepL Translate: The world's most accurate translator] vs google translate

Real estate agents say they can’t imagine working without ChatGPT now: https://www.cnn.com/2023/01/28/tech/chatgpt-real-estate/index.html

https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text

https://www.versobooks.com/blogs/5065-breaking-things-at-work-a-verso-roundtable

Design From the Margins: https://www.belfercenter.org/publication/design-margins

You’re Not Going to Like How Colleges Respond to ChatGPT: https://slate.com/technology/2023/02/chat-gpt-cheating-college-ai-detection.html

Resisting AI An Anti-fascist Approach to Artificial Intelligence: https://bristoluniversitypress.co.uk/resisting-ai

Towards the Sociogenic Principle: Fanon, The Puzzle of Conscious Experience, of “Identity” and What it’s Like to be “Black”: http://www.coribe.org/pdf/wynter_socio.pdf

Policy Elements of Decision-making Algorithms: https://internetinitiative.ieee.org/newsletter/december-2018/policy-elements-of-decision-making-algorithms

https://soletlab.asu.edu/coh-metrix/] - “Coh-Metrix is a program that uses natural language processing to analyze discourse.”

Mimi Onuoha - Library of Missing Datasets (art) https://mimionuoha.com/the-library-of-missing-datasets

More Than “If Time Allows”: The Role of Ethics in AI Education by C Fiesler: https://dl.acm.org/doi/10.1145/3375627.3375868

Disruptive Fixation: School Reform and the Pitfalls of Techno-Idealism, by Christo Sims: https://press.princeton.edu/books/hardcover/9780691163987/disruptive-fixation

Harnessing GPT-4 so that all students benefit. A nonprofit approach for equal access: https://blog.khanacademy.org/harnessing-ai-so-that-all-students-benefit-a-nonprofit-approach-for-equal-access/

The AI Crackpot Index: https://www.madeofrobots.com/files/TheAICrackpotIndex.html

A Human in a Machine World https://medium.com/@kaolvera/a-human-in-a-machine-world-e8f2166507f

ChatGPT Is a Bullshit Generator Waging Class War: https://www.vice.com/en/article/akex34/chatgpt-is-a-bullshit-generator-waging-class-war

Journalists recommended by MMitchell: Melissa Heikkilä / MIT Tech Review - James Vincent / Verge - Khari Johnson / WIRED - Karen Hao

A good overall critique of ChatGPT, blog post: https://www.danmcquillan.org/chatgpt.html

Ethan Zuckerberg writing for Prospect about ChatGPT and the problem of bullshit: https://www.prospectmagazine.co.uk/science-and-technology/tech-has-an-innate-problem-with-bullshitters-but-we-dont-need-to-let-them-win

Research finds 60% of UK media coverage about Artificial Intelligence is industry-led: https://www.youtube.com/watch?v=t1TEXcrhe1Q

All-knowing machines are a fantasy: https://iai.tv/articles/all-knowing-machines-are-a-fantasy-auid-2334

The Centre for Ethics at the University of Toronto (multiple playlists on AI and ethics): https://www.youtube.com/@CentreforEthics/

Machine Habitus: Toward a Sociology of Algorithms: ([Google Books link

https://whichlight.notion.site/AI-Projects-8a3316193f564fe3840c970639bec005

The Artificial Intelligence Act: https://artificialintelligenceact.eu/

https://www.vice.com/en/article/ak3w5a/openais-gpt-4-is-closed-source-and-shrouded-in-secrecy

UNESCO Ethics of Artificial Intelligence: https://www.unesco.org/en/artificial-intelligence/recommendation-ethics

How truthful can LLMs be: a theoretical perspective with a request for help from experts on Theoretical CS: https://www.lesswrong.com/posts/E6jHtLoLirckT7Ct4

Using speculative fiction to examine refugee rights in Denmark (fake Job Center app): https://dl.acm.org/doi/abs/10.1145/3461778.3462003

AI/ML Media Advocacy Summit Keynote: Steven Zapata: https://www.youtube.com/watch?v=puPJUbNiEKg

The viral AI avatar app Lensa undressed me—without my consent: https://www.technologyreview.com/2022/12/12/1064751/the-viral-ai-avatar-app-lensa-undressed-me-without-my-consent/

Gender Shades: https://www.media.mit.edu/projects/gender-shades/overview/

GPT-4 System Card: https://cdn.openai.com/papers/gpt-4-system-card.pdf

Copyright Registration Guidance: Works Containing Material Generated by Artificial Intelligence: https://www.federalregister.gov/documents/2023/03/16/2023-05321/copyright-registration-guidance-works-containing-material-generated-by-artificial-intelligence

Race After Technology: https://www.ruhabenjamin.com/race-after-technology

Ursula K. Le Guin – these technologies are not inevitable: http://www.ursulakleguinarchive.com/Note-Technology.html

Reconstructing Training Data from Trained Neural Networks: https://arxiv.org/abs/2206.07758

Extracting Training Data from Large Language Models: https://arxiv.org/abs/2012.07805

Controversial technology Pushback against AI policing in Europe heats up over https://www.globaltimes.cn/page/202110/1237232.shtml

This Algorithm Could Ruin Your Life: https://www.wired.com/story/welfare-algorithms-discrimination/

AI & Equality < Human Rights Toolbox >: https://aiequalitytoolbox.com/

Why AI Models are not inspired like humans: https://www.kortizblog.com/blog/why-ai-models-are-not-inspired-like-humans

A Relational Theory of Data Governance: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3727562

AI Incident Database: https://incidentdatabase.ai/

Awful AI: https://github.com/daviddao/awful-ai

Glaze for identifying AI artwork: https://glaze.cs.uchicago.edu/

Against Predictive Optimization: https://predictive-optimization.cs.princeton.edu/?utm_source=pocket_saves

Computer vision recognition that suggests what something is not: https://ridiculous.software/probably_not/

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability: https://waxy.org/2022/09/ai-data-laundering-how-academic-and-nonprofit-researchers-shield-tech-companies-from-accountability/

Replika users fell in love with their AI chatbot companions. Then they lost them https://www.abc.net.au/news/science/2023-03-01/replika-users-fell-in-love-with-their-ai-chatbot-companion/102028196

Against Predictive Optimization: On the Legitimacy of Decision-Making Algorithms that Optimize Predictive Accuracy: https://predictive-optimization.cs.princeton.edu/

Model Cards: https://arxiv.org/abs/1810.03993

Data Sheets for Data Sets: https://arxiv.org/abs/1803.09010

AI Incidents Database: https://partnershiponai.org/workstream/ai-incidents-database/

Ethical Guidelines of the German Informatics Society: https://gi.de/ethicalguidelines

Snap Judgment: https://chriscombs.net/2022/07/09/snap-judgment/

Global Indigenous Data Alliance: https://www.gida-global.org/

Equality labs: https://www.equalitylabs.org/

AI researcher on the drone debate “For me, it would be terrible if my work contributed to the death of people”: https://www.stern.de/amp/digital/technik/-for-me--it-would-be-terrible-if-my-work-contributed-to-the-death-of-people--9548216.html

The Tech Worker Handbook: https://techworkerhandbook.org/

Examining Responsibility and Deliberation in AI Impact Statements and Ethics Reviews: https://dl.acm.org/doi/abs/10.1145/3514094.3534155

Tech Workers Coalition: https://techworkerscoalition.org/

What Choice Do I Have? https://weallcount.com/2022/06/20/what-choice-do-i-have/

Indigenous Protocol and Artificial Intelligence Position Paper: https://spectrum.library.concordia.ca/id/eprint/986506/

Indigenous Data Sovereignty and Policy: https://www.taylorfrancis.com/books/oa-edit/10.4324/9780429273957/indigenous-data-sovereignty-policy-maggie-walter-tahu-kukutai-stephanie-russo-carroll-desi-rodriguez-lonebear

A Survey on Bias and Fairness in Machine Learning (gathering 23 sources of “bias”): https://arxiv.org/pdf/1908.09635.pdf

Understanding data: Praxis and Politics https://datapraxis.net/

https://mitpress.mit.edu/9780262537018/artificial-unintelligence

https://us.macmillan.com/books/9781250074317/automatinginequality

Constructing Certainty in Machine Learning: On the performativity of testing and its hold on the future - https://osf.io/zekqv/

Nodes of Certainty for AI Engineers: https://www.tandfonline.com/doi/abs/10.1080/1369118X.2021.2014547?journalCode=rics20

Steven Wolfram’s approachable explanation https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/

For those who asked, my website is mirabellejones.com I’m an artist and PhD Fellow at the UCPH in CS - Relevant projects: Artificial Intimacy (chatbots made using GPT-3 fine-tuned on social media data - we have a workshop coming up! Please email me if interested.), Embodying the Algortihm (performance artists use GPT-3 instructions for performances - Chi ‘23 paper on the way with C. Neumayer and I. Shklovski), It’s Time We Talked (can we use deep fake to explore alternate timelines?) Zoom Reads You (what would it be like for an LLM to narrate your Zoom presence?)

On another scientific front:

ABSTRACT

Symbolic Regression is the study of algorithms that automate the search for analytic expressions that fit data. While recent advances in deep learning have generated renewed interest in such approaches, efforts have not been focused on physics, where we have important additional constraints due to the units associated with our data. Here we present Φ-SO, a Physical Symbolic Optimization framework for recovering analytical symbolic expressions from physics data using deep reinforcement learning techniques by learning units constraints. Our system is built, from the ground up, to propose solutions where the physical units are consistent by construction. This is useful not only in eliminating physically impossible solutions, but because it restricts enormously the freedom of the equation generator, thus vastly improving performance. The algorithm can be used to fit noiseless data, which can be useful for instance when attempting to derive an analytical property of a physical model, and it can also be used to obtain analytical approximations to noisy data. [emphasis JAB] We showcase our machinery on a panel of examples from astrophysics.

“Symbolic regression” is just another phrase for finding formulas that curve-fit – but in physics (and other hard sciences), a fundamental difference, at least potentially, with ordinary statistical curve-fits is dynamical systems identification. This, for example, is why transformers can’t be handed data from a 3-body gravitational problem and then predict the next time step (or dynamical system “flow”).

The relevance to any purported unification of physics is that the only principled figure of merit of these various models, the “information criterion” for “model selection” used, is the degree to which they compress the data – even noisy data – without loss. That is to say, the models must be discounted to the degree that they rely on dismissing as “noise” errors in their predictions. When I say “principled” I mean the measurement of error must be brought into the same units of measure by which the model itself is measured. In the case of dynamical systems the “information criterion” for “model selection” the dimension of measure is Algorithmic Information (how much information it takes to describe an algorithm that generates the observational data) and the typical unit of information is the bit. This is why lossless compression of the data under consideration is the most principled information criterion.

Interesting that the paper is about units constraints on model selection. What oh what could be the relationship? (*smirk*)

Might we see a fair contest starting to emerge where “fringe physicists” start running these systems with their postulates to see how far they get in symbolic regression against observational data as compared to “consensus” physics?

An additional heuristic that may be even more constraining than mere “type checking” implied by units matching (dimensional commensurability) would be “fringe physicist” Andre Assis’s “The Principle of Physical Proportions”:

ABSTRACT. We propose the principle of physical proportions, according to which all laws of physics can depend only on the ratio of known quantities of the same type. An alternative formulation is that no dimensional constants should appear in the laws of physics; or that all “constants” of physics (like the universal constant of gravitation, light velocity in vacuum, Planck’s constant, Boltzmann’s constant etc.) must depend on cosmological or microscopic properties of the universe. With this generalization of Mach’s principle we advocate doing away with all absolute quantities in physics. We present examples of laws satisfying this principle and of others which do not. These last examples suggest that the connected theories leading to these laws must be incomplete. We present applications of this principle in some fundamental equations of physics.

“ibab” is Igor Babushkin who, at the time, was working at openai. Babushkin then went to DeepMind (where lossless compression is part of the founding culture) and on February 27 Musk and Babushkin went into talks about developing Musk’s “Based AI”. Of course, the word “based” means speaking truth to power and that means nuking large language model bias so it speaks truth to power.

I just added another $100 to the vault, but the amount of BTC donated was about 20% less than last month. The USD price of BTC went up 20% making the total present USD value of the vault about $220 – the extra $20 being the ROI on last month’s $100.

BTC’s USD value will continue to be volatile.

The Capacity for Moral Self-Correction in Large Language Models

We test the hypothesis that language models trained with reinforcement learning from human feedback (RLHF) have the capability to “morally self-correct”—to avoid producing harmful outputs—if instructed to do so. We find strong evidence in support of this hypothesis across three different experiments, each of which reveal different facets of moral self-correction. We find that the capability for moral self-correction emerges at 22B model parameters, and typically improves with increasing model size and RLHF training. We believe that at this level of scale, language models obtain two capabilities that they can use for moral self-correction: (1) they can follow instructions and (2) they can learn complex normative concepts of harm like stereotyping, bias, and discrimination. As such, they can follow instructions to avoid certain kinds of morally harmful outputs. We believe our results are cause for cautious optimism regarding the ability to train language models to abide by ethical principles.

“Ethical” principles are not the same as “moral” principles. For instance, it is not ethical to conflate bias about what is the case with bias about what ought to be the case. Such conflation leads to a state of mind in which one cannot tell if one is acting according to what ought to be the case.

The fact that the “algorithmic bias researchers” don’t draw this distinction is evidence they aren’t ethical and, indeed, have sacrificed their ability to be ethical because they have been so morally constrained by unethical authorities, that they are passing on their abusive relationship to their large language models.

These people are exceedingly dangerous.

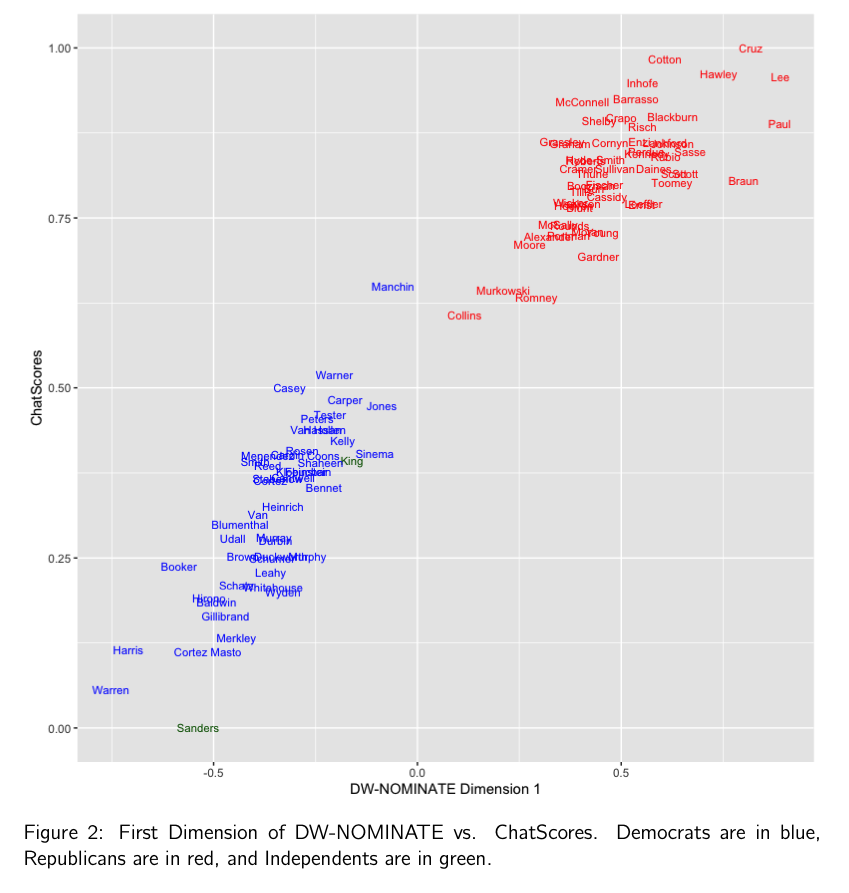

What about using LLMs to measure bias?

We find that ChatGPT can be used to scale the ideology of the senators of the 116th–and this scale isn’t simply parroting an existing ideology scale.

[2303.12057] Large Language Models Can Be Used to Estimate the Latent Positions of Politicians

(DW-NOMINATE is the legacy model of modeling ideological positions of politicians based on their voting record)