To call the instruction set of Intel’s x86 processors baroque is to understate the absurd complexity of a computer architecture that has “just grown” for four decades, accreting every trendy idea and industry fad along the way, to such an extent that, as discussed here on 2021-11-18, just answering the question “How Many Intel x86 Instructions Are There?” sends one deep down a rabbit hole with myriad branches and twists, all labeled with signs reading “It Depends”. For those who entered the computing game at a time when processors had instructions with names like ADD, LOAD, STORE, and JUMP, it’s hard to wrap your mind around Intel “innovations” such as VFMSUBADD132PD (“Fused Multiply-Alternating Subtract/Add of Packed Double-Precision Floating-Point Values”).

Because it has come to such a state that no two Intel processor models seem to have exactly the same instructions, in 1993, Intel introduced the CPUID instruction to allow programs to figure out, on the fly, precisely which instructions were available on the machine running them. This, in turn, which originally returned a simple bit map of features, has grown like Topsy, to the extent that the output from the cpuid Linux command, which interprets the information from the eponymous instruction, is 24416 lines long when run on Roswell, my Alienware M18 development machine with an Intel Core i9 processor. Here are just the “flags” that tell which instructions are present.

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf tsc_known_freq pni pclmulqdq dtes64 monitor ds_cpl vmx est tm2 ssse3 sdbg fma cx16 xtpr pdcm sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb ssbd ibrs ibpb stibp ibrs_enhanced tpr_shadow vnmi flexpriority ept vpid ept_ad fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid rdseed adx smap clflushopt clwb intel_pt sha_ni xsaveopt xsavec xgetbv1 xsaves split_lock_detect avx_vnni dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp hwp_pkg_req hfi umip pku ospke waitpkg gfni vaes vpclmulqdq rdpid movdiri movdir64b fsrm md_clear serialize arch_lbr ibt flush_l1d arch_capabilities

If you wonder why compiler code generator developers, once among the most clever and cheerful of programmers, now have a haunted look resembling long-term prison camp inmates, look no further than this mess and imagine yourself charged with generating high-performance code for all combinations of this pile of gimmicks, hacks, and kludges.

The only way Intel could make it worse is to segment the market so that some of the fancier instructions, such as those for 512 bit vector operations (which allow the processor to act upon multiple pieces of data with a single instruction: so-called “single instruction multiple data” [SIMD]) architecture), are only present in certain models of their processors but absent from others. For example, processors targeted at the “data centre" and supercomputing market may contain these fancy features, while those aimed at the consumer market and mobile devices may omit them.

But Intel didn’t stop there. Their recent generations of processors (12th and beyond) incorporate “performance” and “efficient” cores (P-core and E-core) within the same package. Intel describes the distinction as follows:

Performance-cores are:

- Physically larger, high-performance cores designed for raw speed while maintaining efficiency.

- Tuned for high turbo frequencies and high IPC (instructions per cycle).

- Ideal for crunching through the heavy single-threaded work demanded by many game engines.

- Capable of hyper-threading, which means running two software threads at once.

Efficient-cores are:

- Physically smaller, with multiple E-cores fitting into the physical space of one P-core.

- Designed to maximize CPU efficiency, measured as performance-per-watt.

- Ideal for scalable, multi-threaded performance. They work in concert with P-cores to accelerate core-hungry tasks (like when rendering video, for example).

- Optimized to run background tasks efficiently. Smaller tasks can be offloaded to E-cores — for example, handling Discord or antivirus software — leaving P-cores free to drive gaming performance.

- Capable of running a single software thread.

Do these P- and E-cores support the same instruction sets? Of course not! So this means that a program, running happily on a P-core and taking advantage of its fancy vector instructions, may find itself spontaneously teleported by the operating system to an E-core that doesn’t implement them. This is the kind of thing which inclines compiler and library developers to relax by playing Russian roulette with a semi-automatic pistol.

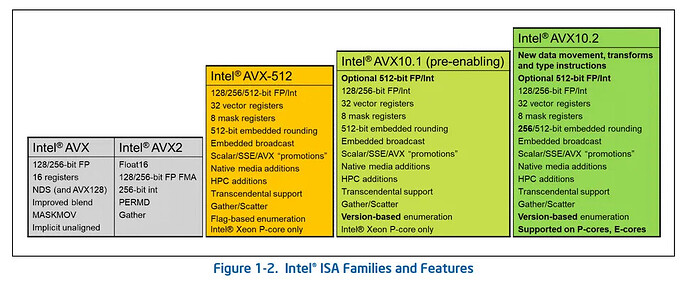

So bad have things become that Intel—Intel!—is attempting to sort out the mess by introducing, in future processor families, a new instruction set, dubbed AVX10, described by Tom’s Hardware as follows.

Intel posted its new APX (Advanced Performance Extensions) today and also disclosed the new AVX10 [at the bottom of this page] that will bring unified support for AVX-512 capabilities to both P-Cores and E-Cores for the first time. This evolution of the AVX instruction set will help Intel sidestep the severe issues it encountered with its new x86 hybrid architecture found in the Alder and Raptor Lake processors.

This will unify—kind of—the vector instructions supported by P- and E-cores. As long as a program uses instructions that operate on vectors no longer than 256 bits, it will run identically on P- and E-cores. Programs that require 512 bit vectors will still only run on P-cores, but presumably they can be tagged so an operating system can restrict them to only be dispatched there. Here is Intel’s chart of the evolution of AVX over the generations.

Does this herald a new era of simplification for Intel’s x64 architecture? Place your bets—my money is on the “no” side.