This is now easily my favorite AI YouTube channel especially the four videos involving Grokked transformers up to an including this one.

He sets forth a plausible business model for insurance at 54:24. Basically if you have structured knowledge, as in a business database, you can synthesize its quasi-formal language corpus and train the Grokked LLM with that. The *conjectured *result is an expert system that groks the business domain.

It is rather bemusing to me that people are befuddled by the phenomenon of “grokking” (and “double descent”) when to me it seems rather obvious what is going on:

When you adjust your loss function to more closely relate to the Algorithmic Information Criterion for causal model selection, what ends up happening is, at first, the conventional notion of an “error term” (squared error, etc.) dominates the gradient descent. Then it levels off and you apparently get no further improvement in your loss function for a long time because the regularization term(s) (the number of “parameters” in the model, ie: the number of algorithmic bits in the model) is a much smaller term in the loss function. But, it is still a small gradient. It is this reduction in the number of parameters that signals the onset of “grokking”.

Toward the end of the video he gets into ICL or “in context learning”. ICL, IMNSHO, is a dead end because it confuses inference with training. I don’t totally discount ICL’s potential either for some marginal benefit or for some sort of “black swan” breakthrough in ML, but I really don’t think it is a good idea to confuse inference with training. My simple test that demonstrates LLMs aren’t all they’re cracked up to be is intended to expose this distinction.

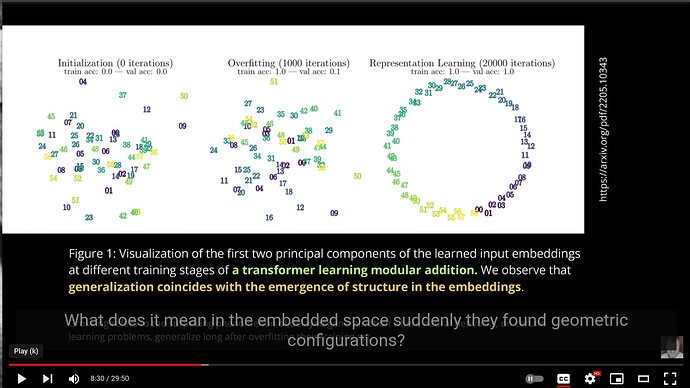

A visual representation of this appears in an earlier video:

The geometric symmetry is of the parameters of the model becomes more compressible (ie: less random) at the onset of grokking.