Since the advent of electronic digital computers, most have used what is called the von Neumann architecture, where programs and data are stored in a memory unit separate from the computer processor (CPU). As the relative speed of these components has changed, this can lead to the “von Neumann bottleneck”, where the overall performance of the computer is limited by the speed at which data can be transferred between the CPU and memory, and not by the speed of the units themselves. This problem was identified as early as 1977 by John Backus in his Turing Award lecture, “Can Programming Be Liberated from the von Neumann Style?”:

Surely there must be a less primitive way of making big changes in the store than by pushing vast numbers of words back and forth through the von Neumann bottleneck. Not only is this tube a literal bottleneck for the data traffic of a problem, but, more importantly, it is an intellectual bottleneck that has kept us tied to word-at-a-time thinking instead of encouraging us to think in terms of the larger conceptual units of the task at hand. Thus programming is basically planning and detailing the enormous traffic of words through the von Neumann bottleneck, and much of that traffic concerns not significant data itself, but where to find it.

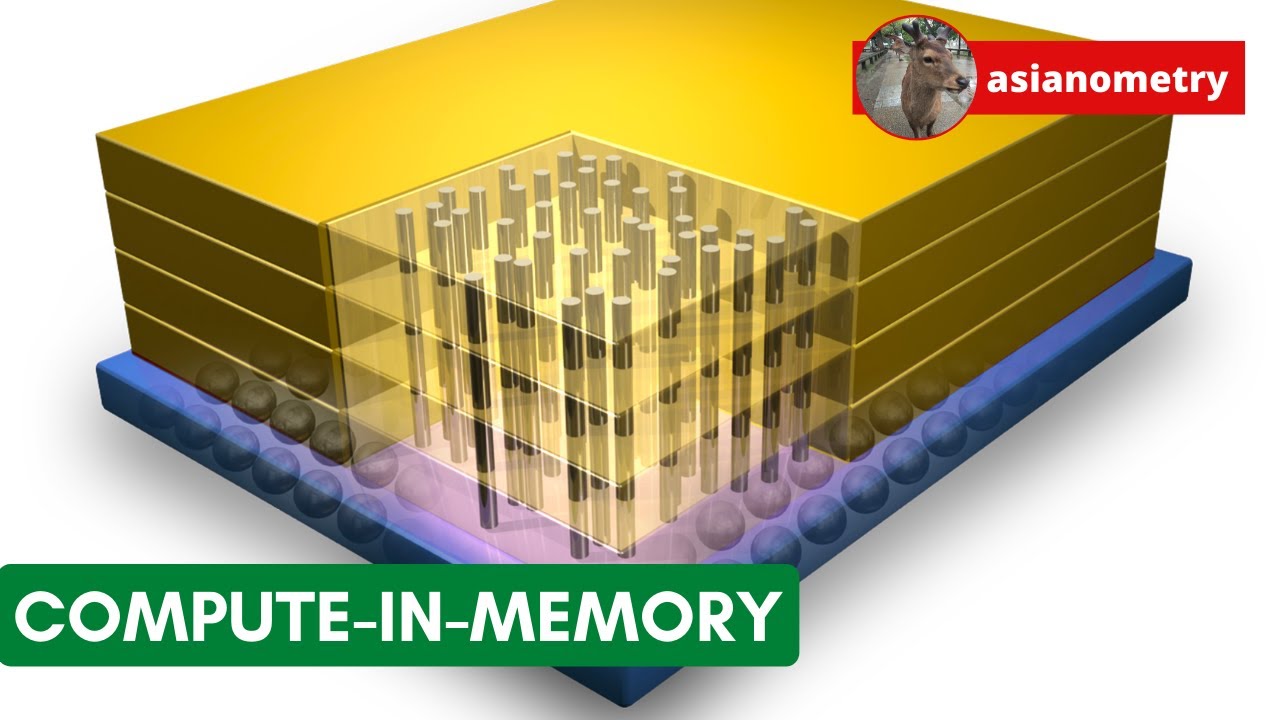

Over the years, this has led computer designers to explore ways in which compute elements and memory might be unified to broaden the data transfer path and eliminate the bottleneck. But the silicon fabrication technologies used by mass memory and random logic circuitry are very different, and simply putting CPU and memory on the same chip may result in a system that performs worse than individually-optimised designs putting up with the bottleneck.

Still, clever designers are exploring ways to unify computing or memory or at least couple them more closely with less overhead, and these schemes may become important for the next generations of high-performance computing hardware.