In a paper posted to arXiV on 2023-01-26, “Text-To-4D Dynamic Scene Generation” (full text [PDF]), Meta AI researchers describe a system, MAV3D (“Make-A-Video3D”) which creates animated three-dimensional videos from text prompts. Here is the abstract:

We present MAV3D (Make-A-Video3D), a method for generating three-dimensional dynamic scenes from text descriptions. Our approach uses a 4D dynamic Neural Radiance Field (NeRF), which is optimized for scene appearance, density, and motion consistency by querying a Text-to-Video (T2V) diffusion-based model. The dynamic video output generated from the provided text can be viewed from any camera location and angle, and can be composited into any 3D environment. MAV3D does not require any 3D or 4D data and the T2V model is trained only on Text-Image pairs and unlabeled videos. We demonstrate the effectiveness of our approach using comprehensive quantitative and qualitative experiments and show an improvement over previously established internal baselines. To the best of our knowledge, our method is the first to generate 3D dynamic scenes given a text description.

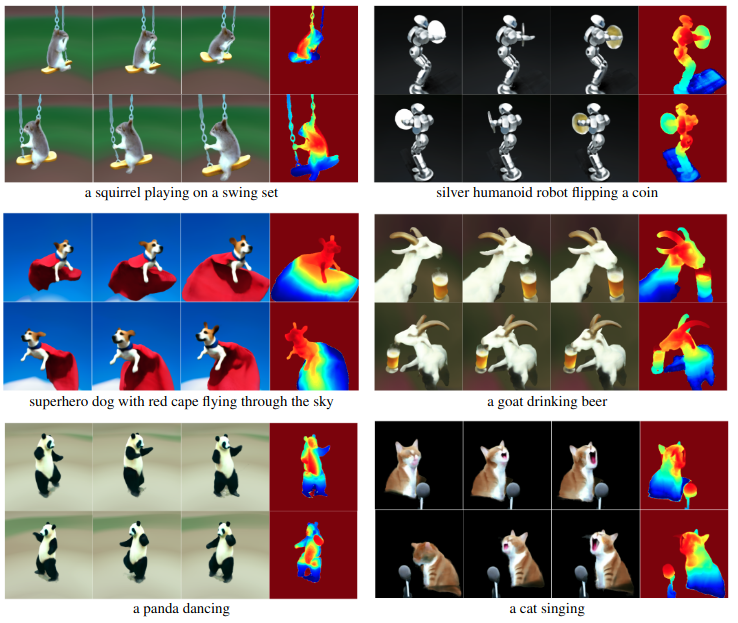

Here are some prompts and clips from the resulting generated video.

You can watch the actual animated videos on the companion page (confusingly with the same title as the research paper) “Text-To-4D Dynamic Scene Generation” on the MAV3D GitHub page. This page may require you to view it with the Google Chrome browser to show the videos.

MAV3D is not available to the public at this time.

Ever slowly, yet inexorably, the water rises.