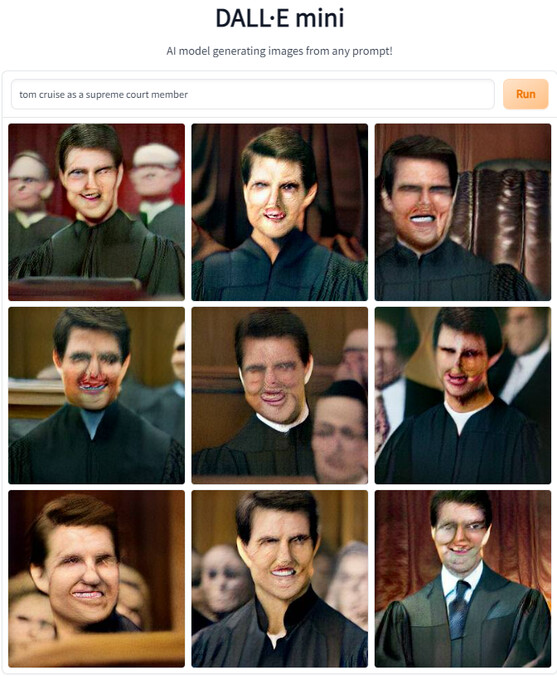

Errr…eepy-creepy?

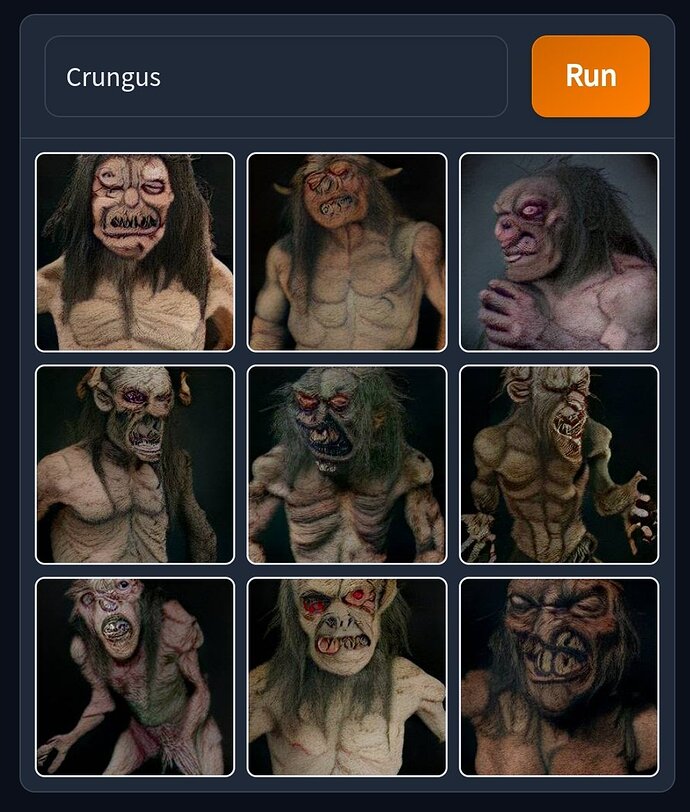

Here are the creepy results of the made-up word “Crungus”, courtesy of @Brainmage:

What is remarkable is that all of the images depict the same character even though “Crungus” should not exist at all.

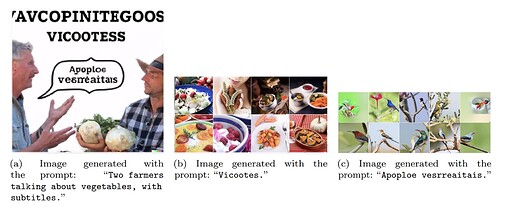

Similar results have been observed using DALL-E 2. For example, the following images are a result of the meaningless string “Apoploe vesrreaitais”:

These findings have led researchers to hypothesize that DALLE-2 might possess a “hidden vocabulary”.

Hi Magus,

In the case of the “Apoploe,” surely the program went with “hoopoe.” Those birds look like hoopoes.

I wonder: Does the software gather feedback and learn from it, right there in the software lab, so to speak? And, does it learn from serial requests from end-users? I suppose it could infer feedback from the details of serial requests.

It looks like the software knows English and also knows how to play with English by making associations.

“Crungus” was given as a proper noun, so it made images of a character, an entity. Crungus is grungy. Crungus prominently features those teeth, which is why all the images are fairly close-up. Crungus likes to crunch us.

I think DALL-E 2 reads the papers - has been at least since the Iraq Surge.

I don’t think so. Models like GPT-3 and DALL-E are called “pre-trained” (GPT stands for “Generative Pre-trained Transformer”), and the weights in the neural networks are set by the training inputs supplied when the model was built (in the case of GPT-3, 410 billion encoded tokens, which it distilled down into 175 billion machine learning parameters (weights). But, as I understand it, there is no feedback from results after the training stage. You would have to change the training set and re-train the model to modify its response for given prompts.

One of the things that makes models like this practical to deploy is that while the training stage is enormously costly in computing power, just running a prompt through the trained model to produce output is relatively inexpensive. This is why training Tesla’s self-driving requires massive supercomputer hardware but running it in real time can be done on a single board computer in the car.

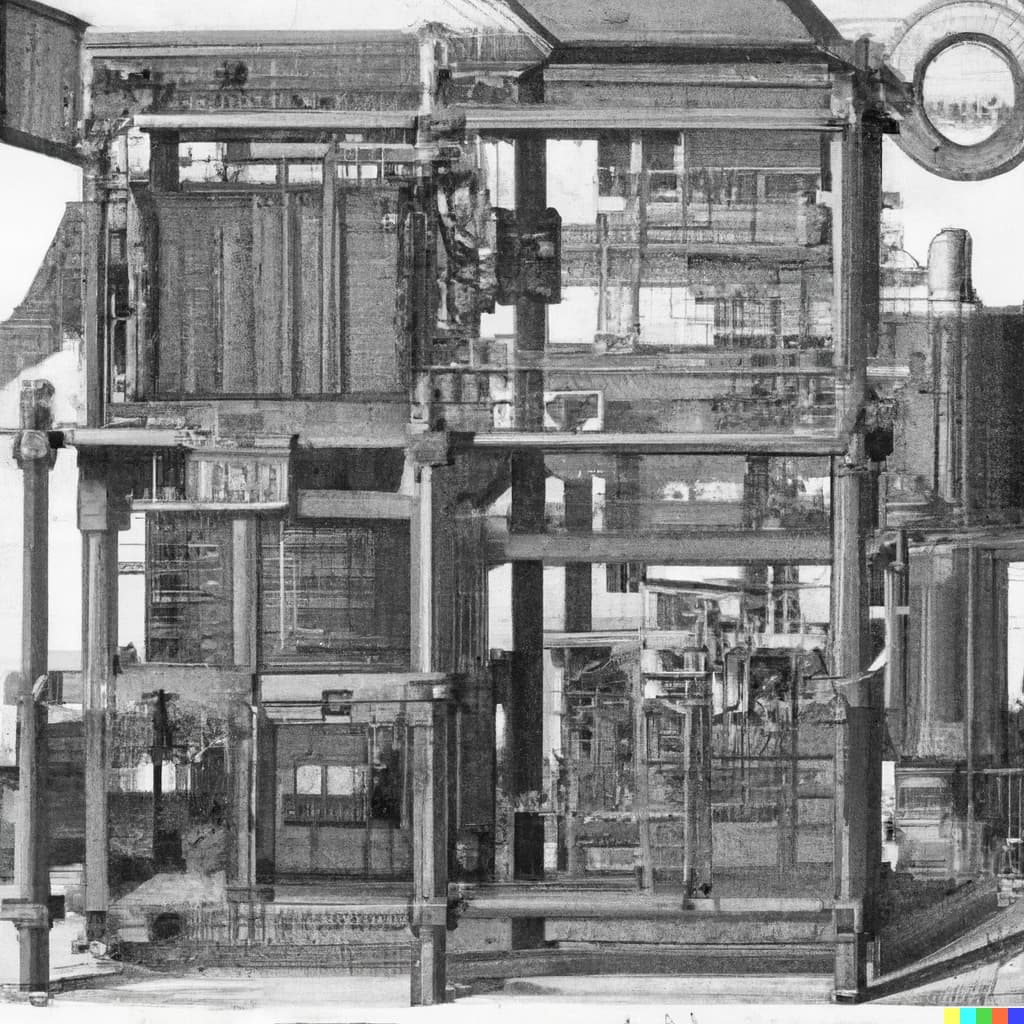

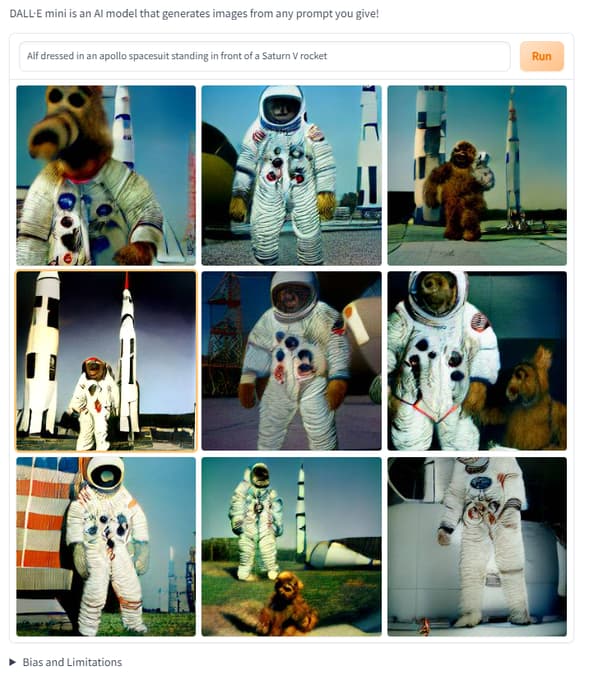

Well, it happened. Today Fourmilab was approved for access to OpenAI’s DALL-E 2. Here my first try, from the prompt “plastic robot ants in computer room in comic book cover style”.

Utterly, terrifyingly, brilliant! (BrilliANT?)

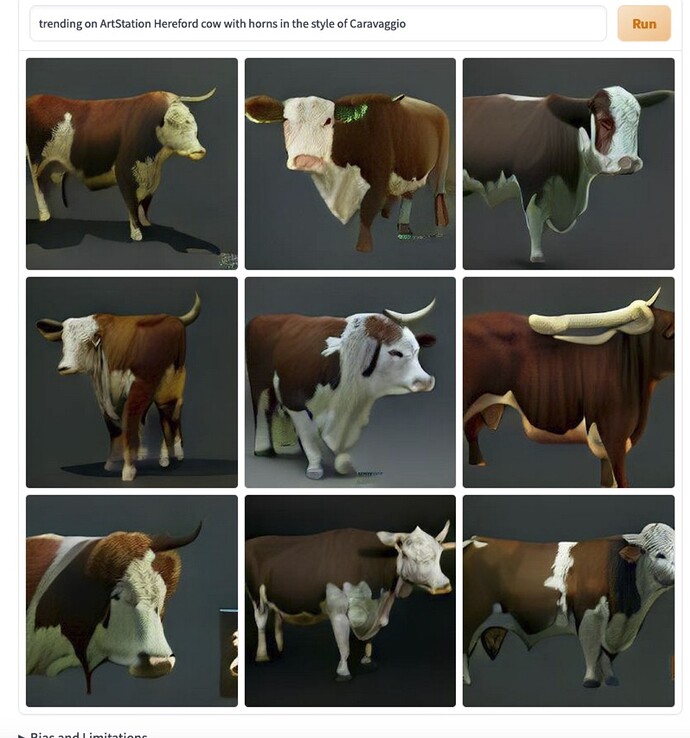

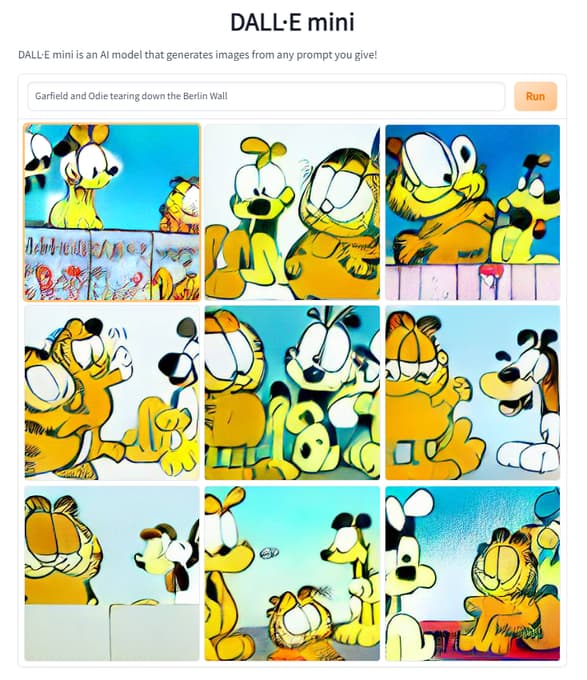

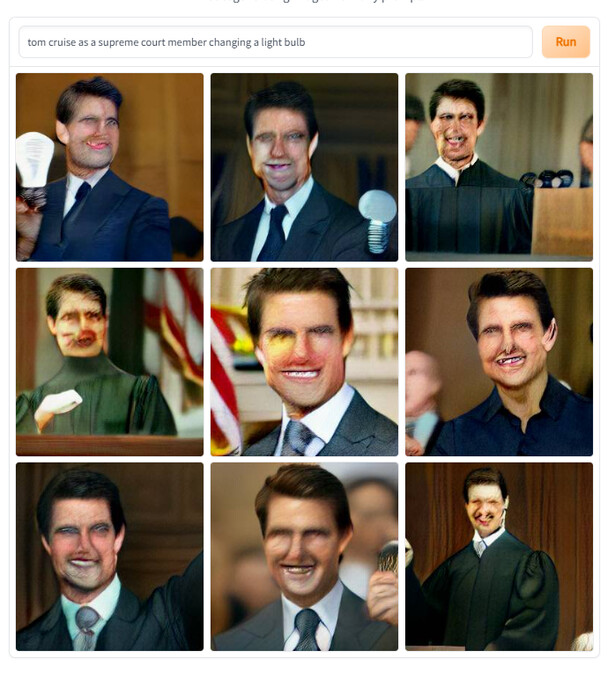

“Babbage analytical engine as drawn by Albrecht Durer, from the British Museum”

Large version of top right:

“programmer discovers alien message in human DNA, 1950s pulp novel cover”

My first reaction was this looks more like Soviet Realism style and then I noticed the writing on the last panel resembles Cyrillic ?

I recall reading somewhere that some researchers speculate DALL-E “invented” its own language. Here is a paper with some examples. What’s weird is the authors names look Greek (no joke) and the “caption” seems to be written in modern Greek.

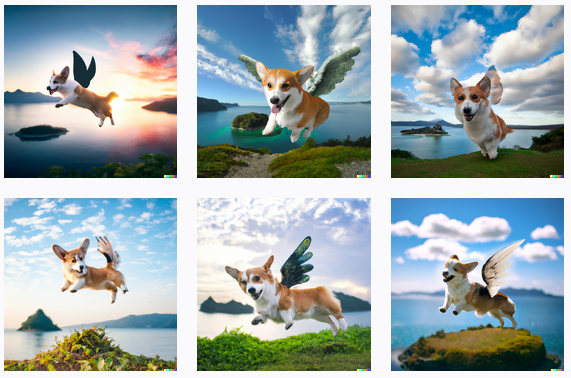

Everything’s better with corgis!

“small New England town disappears down to topsoil, as painted by Winslow Homer, in the National Art Gallery”

This is an illustration for my story “Free Electrons”.

My favourite:

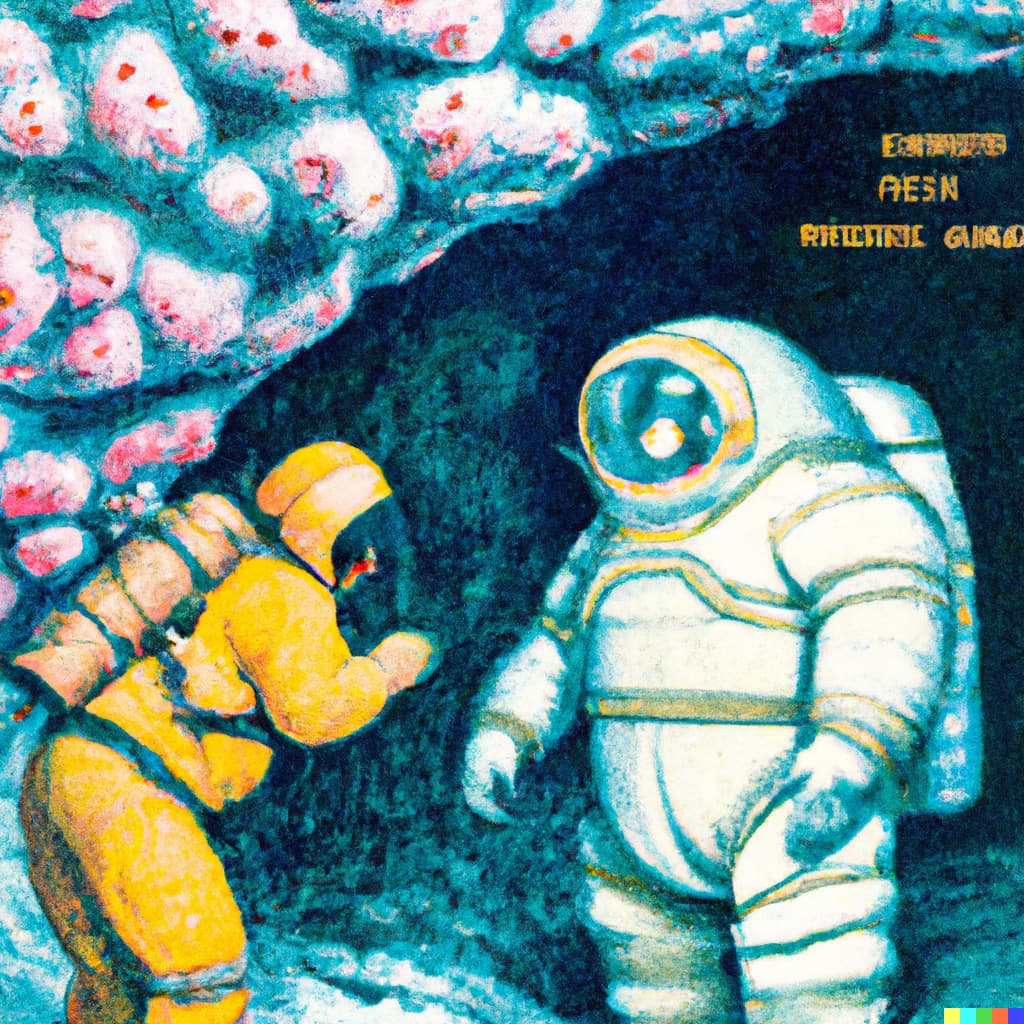

“astronauts in spacesuits encounter giant tardigrade in cave, science fiction pulp magazine color cover”

Here is a blow-up of the bottom right, in which the tardigrade appears to also be wearing a spacesuit that resembles Bibendum.

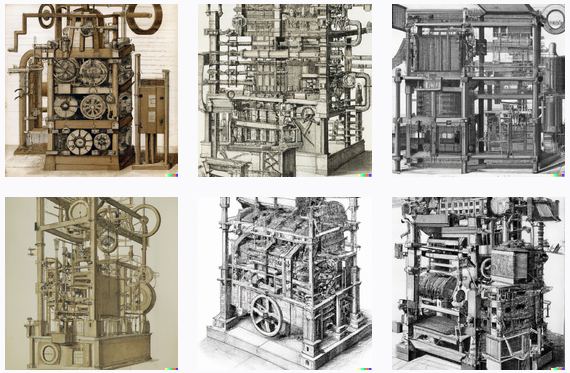

“oil painting of an orange cat in the style of van Gogh, around 1887, from the Van Gogh Museum, Amsterdam”

This experiment was prompted by a comment I made on another Web site on 2015-05-12 during a discussion of the potential of artificial intelligence.

Here is an example [from MIT Technology Review, “The Machine Vision Algorithm Beating Art Historians at Their Own Game”] of what happens when you put together big data, artificial neural networks, and exponentially growing computing power.

Imagine: it’s 2022.

“Siri, please paint a picture of my cat in the style of Van Gogh, around 1887.”

“Which cat: Meepo or Rataclysm?”

“Meepo.”

“Just a moment. All right, here you go. Would you like me to post this to all of your friends?”

Roll over Ray Kurzweil, I even got the year right.