Ray tracing allows creation of photorealistic images from three-dimensional computer models, incorporating multiple light sources, shadows, reflection, refraction, and material properties. Ray tracing is, however, extremely computationally expensive and, even with high-performance specialised hardware such as graphics processor units (GPUs), it may be difficult to produce images in real time or near-real time, as required by video games and virtual reality applications.

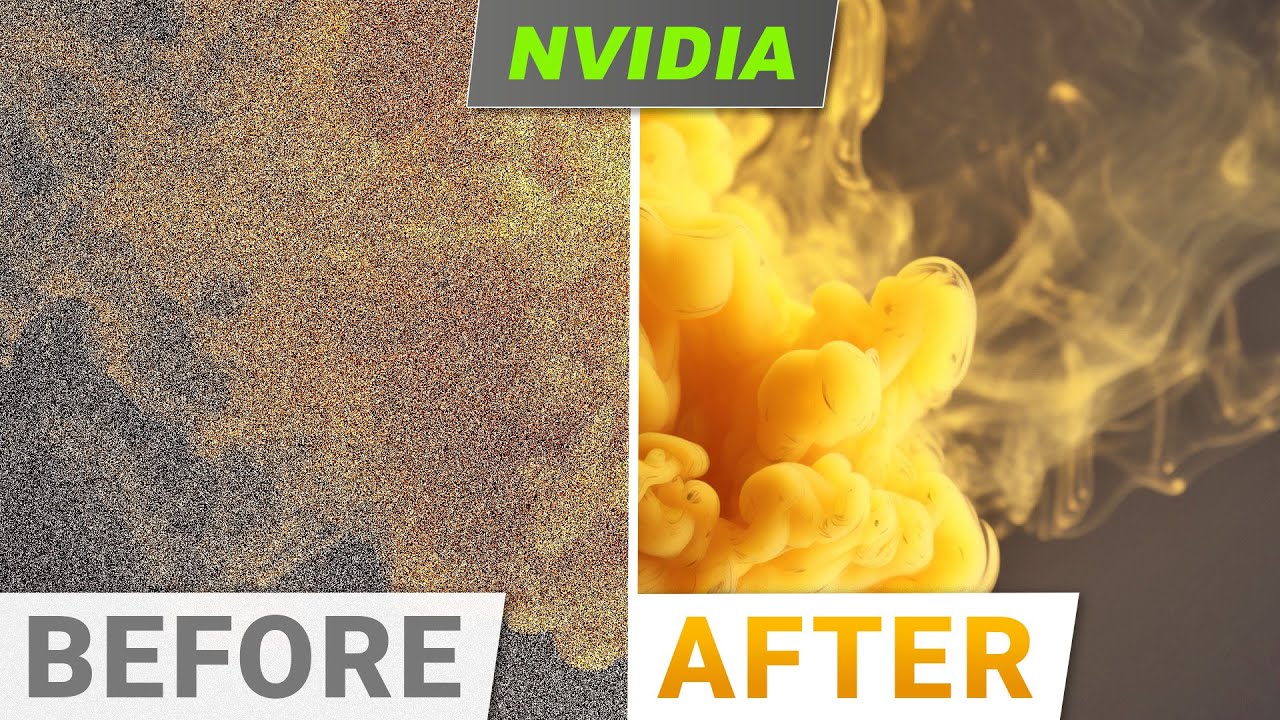

The time required for ray tracing can be reduced by computing fewer pixels per image and/or reducing over-sampling used to simulate optical imaging effects, but this reduces image quality and the perceived realism of the images. A paper from researchers at NVIDIA Research, “Joint Neural Denoising of Surfaces and Volumes” (full text [PDF]), presents a system which achieves fast, high quality image synthesis by using reduced resolution ray tracing to “rough in” the image, then using a neural network trained on a wide variety of images to synthesise the misising detail in the image from the limited data it was given. This is shown to produce resolution comparable to full ray tracing for many images, accounting both for surface rendering and volume effects such as smoke, haze, and fog.