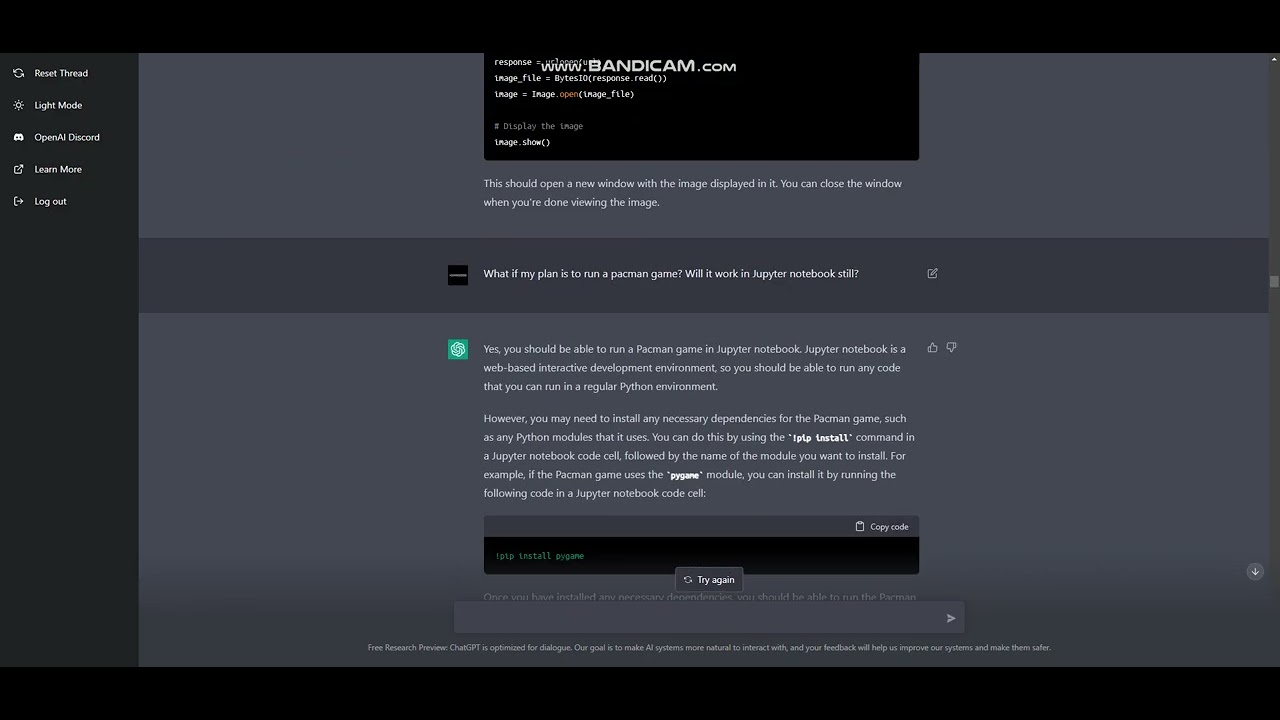

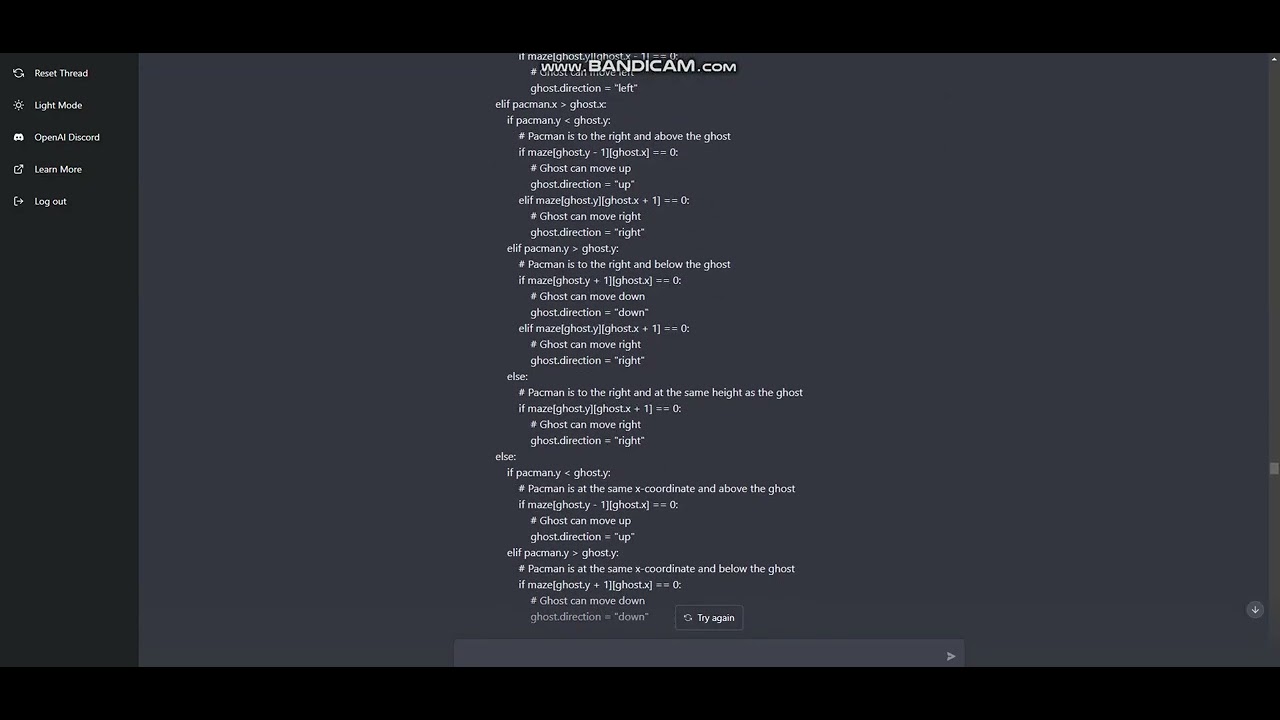

These two (very high speed scroll-by – so be prepared to manually advance the frames) videos of programming a PacMan game illustrates what might be called a quasi pair programming session with ChatGPT.

This deserves its own thread here at Scanalyst because as people have long recognized (and I have recognized since my first pair programming experiences with remote programmers sharing screens on PLATO circa 1974) at least at the coding stage two heads can be squared better than one because the probability of annoying errors getting through both of you during coding is about squared the probability of one programmer. Moreover, as with my PLATO experience, remote pair programming is a further multiplier as one can choose from a number of folks who may share one’s interest. For example, while programming Spasim at the Unviersity of Iowa, I interacted heavily with the guys at Iowa State University and Indiana University as well as University of Illinois at Champaign Urbana to get up to speed on the programming language and environment, as well as techniques in game programming. Having an on-call programming partner will expand the reach of this productivity multiplier.

I say “quasi” because ChatGPT isn’t watching you code but it is acting as a much more efficient means of accessing stackexchange.com and other sources of wisdom from the school of hard knocks and appears not to mislead nearly as often as one might have expected from the rate of bad advice in those online programmer advice sites.

This is a huge deal – a much bigger deal than other language model coding assistants.

PS: It may be wishful thinking to hope the economic value of this kind of assistance may drive an “arms race” between the Big-LLM folks to finally start recognizing they need to break through the statistical information barrier to the algorithmic information regime. If that happens, some of these companies may find themselves suffering an internal cage-match between their watchdog Algorithmic Bias Commissars (imposing kludges to align the LLM behavior with the theocracy) and the profit-seeking Algorithmic Bias Scientists (attempting to remove algorithmic bias so as to capture more network effects from customers that mean business rather than politics).

But don’t count on the latter winning. The Big Boys have way too much to lose if the truth gets out about how damaging to civilization is the centralization of positive network externalities.