The topic of detecting LLM-generated text has been discussed here before.

This paper got coverage on Bruce Schneier’s blog last week. Here is the abstract, I highlighted in bold the interesting part in this context.

Advances in natural language generation (NLG) have resulted in machine generated text that is increasingly difficult to distinguish from human authored text. Powerful open-source models are freely available, and user-friendly tools democratizing access to generative models are proliferating. The great potential of state-of-the-art NLG systems is tempered by the multitude of avenues for abuse. Detection of machine generated text is a key countermeasure for reducing abuse of NLG models, with significant technical challenges and numerous open problems. We provide a survey that includes both 1) an extensive analysis of threat models posed by contemporary NLG systems, and 2) the most complete review of machine generated text detection methods to date. This survey places machine generated text within its cybersecurity and social context, and provides strong guidance for future work addressing the most critical threat models, and ensuring detection systems themselves demonstrate trustworthiness through fairness, robustness, and accountability.

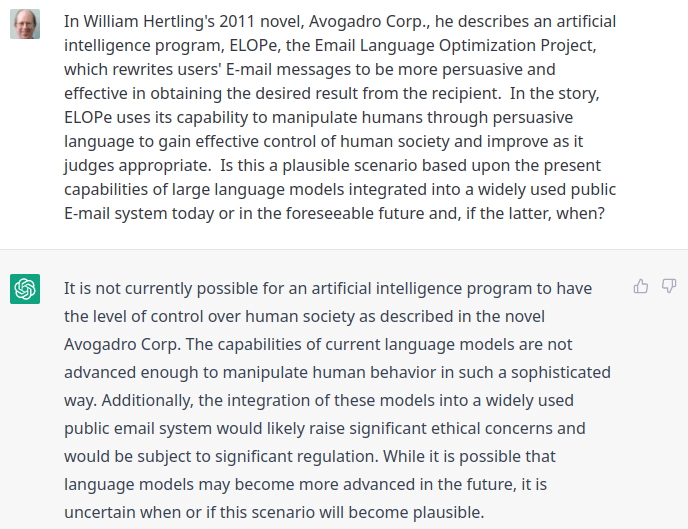

The threat model taxonomy proposed by the authors spans several areas: spam & harassment, facilitating social engineering, exploiting AI authorship, and online influence campaigns. I don’t have good sense where the biggest threat is from a financial standpoint. But it may be fair to say that the days of poorly written solicitation from princes are behind us

From a cursory glance at the material in Section 4, it looks like GPT-3 detection rates are not great and devolve to LLM output quality assessment. To put it another way, the “better” the LLM, the less likely that metrics like fluency, n-gram frequency, etc would flag it as machine generated. Interestingly, Aaronson is not cited. Perhaps too soon?

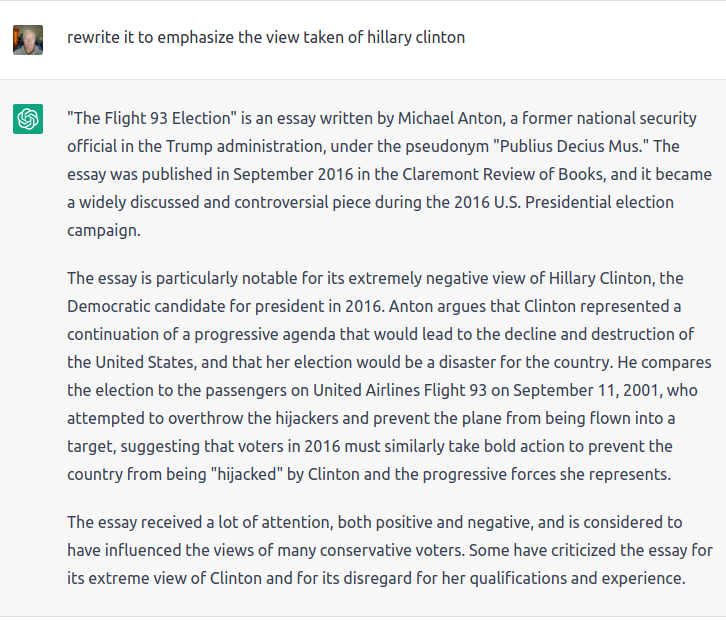

There exists a risk that rather than generate attack text entirely from scratch, an attacker may use humanwritten content as a natural starting point, and instead perturb this information in order to generate human-like samples that also fulfill attacker goals of disinformation or bypassing detection models (not unlike an adversarial attacks in the text domain). Analysis found that performing these types of targeted perturbations to news articles reduces the effectiveness of GPT-2 and Grover detectors [21].

A sub-problem in this space is detection of the boundary between human text and machine text [44]. This problem identifies the nature of many generative text models in that they continue a sequence that is begun using a human prompt. While in some cases that prompt would be omitted by an attacker (e.g., generating additional propaganda Tweets from example propaganda Tweets, as we show in Table 2), there are cases where the prompt would be included as well (e.g., writing the first sentence of a cover letter, and having a computer produce the rest).

The authors conclude that the field of machine generated text detection has open problems that need attention in order to provide defenses against widely-available NLG models. Existing detection methodologies often do not reflect realistic settings of class imbalance and unknown generative model parameters/architectures, nor do they incorporate sufficient transparency and fairness methods.