Motivated by all the hysteria over “AI”, “Futurists” are weighing in on the odds of an “extinction level event”, in the form of both prediction markets with pseudo-monetary incentives for “superforecasters” selected under Metaculus’s non-monetary incentives, and “Experts”. As might be expected they conclude “AI” is the top contender for causing human extinction.

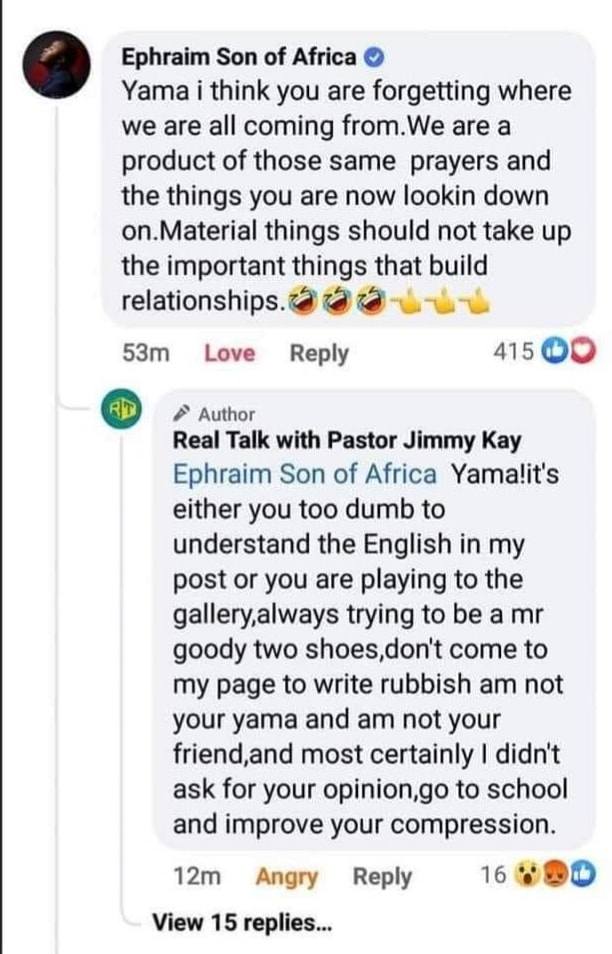

But the brouhaha over this “Tournament” is that the non-monetary prediction market “superforecasters” differ substantially from “the experts” in their predictions.

A pox on both their houses.

Let’s start with the “superforecasters” selected by Metaculus. One problem with non-monetary prediction markets is the lack of seriousness not only in judging but in posing the questions. This is of a piece with their primary weakness:

Their inability to appropriately scale rewards (& punishments) in the regime of exceedingly unlikely events with enormous outcomes – i.e. “Black Swans” – appropriate to public policy where, for example, the Biden administration has stated $10T/year expenditure to deal with global warming alone is appropriate present investment.

Since the dominant non-monetary prediction market, Metaculus, in particular, was founded on the premise that public policy thinktanks (including intelligence agencies) had a poor track record due to lack of accountability for forecasts, this is a critique that gets to its raison dêtre. Metaculus has a policy of rejecting claims that are so low probability in the social proof miasma that it would discredit Metaculus as serious.

However, this rationale proves, not based on social acceptance but on simple investment math, that it can’t be taken seriously by those waiving their $10T/year dicks around at the world.

Let’s take global warming as an example of how Metaculus fails to deal with the “Black Swan”:

There are any of a number of “preposterous” energy technologies that would purportedly terminate the emission of greenhouse gasses and draw CO2 out of the atmosphere within a very short period of time – technologies that are so “preposterous” that nothing, nada, zero, zip is invested in even bothering to “debunk” them because they are a priori “debunked” by the simple expedient of social proof’s estimated probability approaching 0. However, when we’re dealing with a NPV on a cash stream of $10T/year, at 30 year interest rate available to the US government of 3.91% of over $200T, the question becomes exactly how close to the social proof probability of “0” do we have to come in order to invest nothing at all in even “debunking” those technologies? Let’s say a good, honest, “debunk” by a technosocialist empire that believes in bureaucratically incentivized “science” takes $10M (remember, you have to buy off Congress to get anything appropriated so I’m being kind to the poor bureaucrats). That’s 10M/200T = 5e-8 = probability threshold at which you can no longer simply wave the imperial hand of social proof and dismiss the $10M appropriation.

Can Metaculus tolerate the loss of credibility it will suffer by entertaining claims with a one in twenty million odds of rendering the whole problem moot?

To ask is to answer the question.

Moreover, even in the event that Metaculus grew some hair on its balls and started entertaining Black Swans, its scoring system cannot provide appropriate rewards to those predicting them because of nonlinear effects as the probabilities approach 0% or 100%.

In this regard, it is particularly relevant that the now-defunct real money prediction market, Intrade, had a claim about “cold fusion” that was very similar to the one at Robin Hanson’s ideosphere, but which judged in the opposite direction. The only reason it was judged differently is that real money was involved. The judgement? Whatever the claims are about “cold fusion” regarding nuclear products, etc. the only relevant claim about it is “True”: Excess heat has been produced at levels that exceed those allowed by current interpretations of standard physical theory.

I turn now to our “Professional Experts”.

Oxford’s Future of Humanity Institute, closely associated with Metaculus during the COVID crisis, thinks that the Algorithmic Information Criterion for model selection is the same as the Bayesian Information Criterion. This is up there with confusing a finite state machine with a Universal Turing Machine, which, in turn, means they can’t understand what it means to create a data-driven model.

This, alone, disqualifies them from any authority regarding anything having to do with prediction.

On top of that, when corrected on this account, pointing out that reality in general and a pandemic in particular, requires modeling system dynamics rendering the Algorithmic Information Criterion necessary, they responded that dynamical systems exhibit chaotic behavior – so it is basically futile.

If so, that means one needn’t bother trying to predict complex systems… an argument that, were it true, would have cut off any investment in supercomputers to Seymour Cray in the 1960s.

We’re dealing with IDIOTS occupying the positions of “futurists”.

(And all of the above is my first hand experience dealing with them very early on in the COVID epidemic.)

Oh, one other thing that reveals the sheer idiocy of all these “prediction authorities” as it pertains to “AI”, needs to be taken in context of Minsky’s final advice – not just to the field of AI but to the practice of science – specifically regarding predictions :

It seems to me that the most important discovery since Gödel was the discovery by Chaitin, Solomonoff and Kolmogorov of the concept called Algorithmic Probability which is a fundamental new theory of how to make predictions given a collection of experiences and this is a beautiful theory, everybody should learn it, but it’s got one problem, that is, that you cannot actually calculate what this theory predicts because it is too hard, it requires an infinite amount of work. However, it should be possible to make practical approximations to the Chaitin, Kolmogorov, Solomonoff theory that would make better predictions than anything we have today. Everybody should learn all about that and spend the rest of their lives working on it.

Marvin Minsky

Panel discussion on The Limits of Understanding

World Science Festival

NYC, Dec 14, 2014

So why is it that no one pays attention to this in the machine learning world or in the prediction world: Accepting lossless compression as the most principled model selection criterion? One might steelman argue that, for example, the Large Text Compression Benchmark can’t practically be applied to the 540GB text corpora used to train GPT3.5/ChatGPT because that is simply too much data to treat losslessly! And we might even accept that despite the fact that the Large Text Compression Benchmark’s 1GB is less than a factor of 1000 smaller; and OpenAI’s market value is $20B the LTCB has not enjoyed anything remotely comparable to $20B/1000 = $20M investment – that it would be simply outlandish to claim that putting $20M into losslessly compressing the 540GB corpus would produce a result comparable to GPT3.5.

OK, so let’s grant all that, despite the fact that it is far from obvious.

So now, where are we?

We’re at the premise that the amount of data required to achieve GPT3.5 performance has to be on the order of at least 540GB.

Right?

But let’s step back for a moment and consider the actual knowledge contained in the 1GB Wikipedia corpus and what that knowledge implies in the way of ability to make predictions:

Every programming language is described in Wikipedia. Every scientific concept is described in Wikipedia. Every mathematical concept is described in Wikipedia. Every historic event is described in Wikipedia. Every technology is described in Wikipedia. Every work of art is described in Wikipedia – with examples. There is even the Wikidata project that provides Wikipedia a substantial amount of digested statistics about the real world.

Are you going to tell me that comprehension of all that 1GB is insufficient to generatively speak the truth as best it can be deduced consistent with all that knowledge – and that this notion of “truth” will not be at least comparable to that generatively spoken by GPT3.5?

Does truth have anything to do with prediction?