Programmers know how important notation can be. Much of the progress in programming languages over the decades has been in finding more expressive ways to write algorithms which, when coded in earlier languages, are cumbersome, difficult to understand, and prone to error.

(This post is based upon, and uses illustrations from, a Profound Physics article, “Einstein Field Equations Fully Written Out: What Do They Look Like Expanded?”, which I highly recommend, including its links that explain the underlying physics. I have adapted the presentation to focus upon the power of notation to express concepts, particularly as it applies to computer programming language design.)

This is true in mathematics and science as well. The use of calculus in Britain was impeded for years by its use, out of patriotic pride, of Newton’s clumsy “fluxion” notation while mathematicians and physicists on the Continent adopted Leibniz’ notation, which is universally used today.

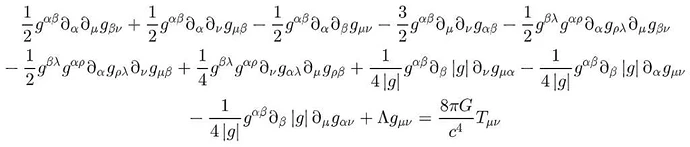

An excellent example of the power of notation can be seen in the Einstein field equations, which are the heart of general relativity, the geometric theory of gravitation. Written in modern tensor notation, the entire theory is expressed as:

But those tensor terms, such as G_{\mu\nu}, mean this simple equation actually expresses 16 different equations in the subscripts \mu and \nu which, as this is a four dimensional theory of space and time, take on values from 0 to 3. (Due to symmetry considerations, the 16 equations can actually be collapsed to “only” 10, but we can ignore this when considering notation.)

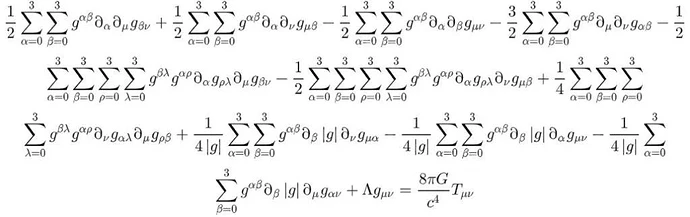

If the left side of the equation is expanded out in terms of the metric tensor g_{\mu\nu} we get the equivalent equation.

But the above equation uses a shorthand notation called the “Einstein summation convention” in which an index variable appearing twice in a term implies summation over all of the values of the index. So, in this case, the \alpha, \beta, \rho, and \lambda superscripts and subscripts in the left hand side terms express summations which, if written out explicitly, look like:

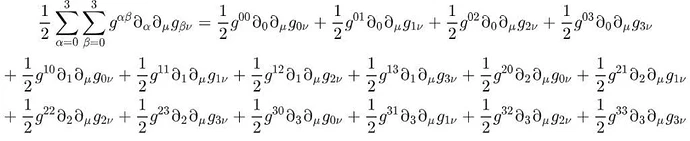

But, of course, the \sum notation for summation is also a shorthand, albeit with a longer pedigree than Einstein’s (it was invented by Leonhard Euler in the 18th century), which means that if we completely wrote out just the first term in the equation above, we’d have:

Expanding the entire left hand side is left as an exercise to you, the gentle reader.

Now, the point is, for programmers, that all of this tedious work expanding compact and expressive notation into what is really going on at the bottom level (which you have to if you’re going to, you know, actually calculate with these equations) is something at which computers are very good and humans are…not that great. Good notation allows translating thought into computation with minimum risk of error and makes a program readable in terms of the problem it’s solving, not how the computer goes about getting the answer out.