This is the title (in which I have expanded acronyms and symbols to improve readability to non-specialists) of a paper published on arXiv on 2023-06-05, “Transformative AGI by 2043 is <1% likely” (full text [PDF]), which is a submission to the Open Philanthropy AI Worldviews Contest, for which papers had to be submitted no later than 2023-05-31. The contest asked essays to address one of two questions, the first of which is the topic of the present paper:

Question 1: What is the probability that AGI is developed by January 1, 2043?

where “AGI” (Artificial General Intelligence) is defined as “something like” “AI that can quickly and affordably be trained to perform nearly all economically and strategically valuable tasks at roughly human cost or less.”. The authors choose to address “transformative AI”, which has been defined elsewhere as:

[A]rtificial intelligence that constitutes a transformative development, i.e. a development at least as significant as the agricultural or industrial revolutions.

The authors, artificial intelligence researchers at OpenAI and the artificial intelligence unit of pharmaceutical company GSK (GlaxoSmithKline), conclude that based upon the cascading conditional probabilities of the individual steps (great or modest filters) which must be passed before transformative AI emerges, the probability of its achievement before the year 2043 is around 0.4%, far below the estimates of many artificial intelligence enthusiasts, publicists, seekers of public funds or private investment, pearl-clutchers, doom-sayers, and would-be regulators of this emerging and, in their view, imminently arriving technology.

Here is the abstract of the paper, summarising the argument.

This paper is a submission to the Open Philanthropy AI Worldviews Contest. In it, we estimate the likelihood of transformative artificial general intelligence (AGI) by 2043 and find it to be <1%.

Specifically, we argue:

● The bar is high: AGI as defined by the contest—something like AI that can perform nearly all valuable tasks at human cost or less—which we will call transformative AGI is a much higher bar than merely massive progress in AI, or even the unambiguous attainment of expensive superhuman AGI or cheap but uneven AGI.

● Many steps are needed: The probability of transformative AGI by 2043 can be decomposed as the joint probability of a number of necessary steps, which we group into categories of software, hardware, and sociopolitical factors.

● No step is guaranteed: For each step, we estimate a probability of success by 2043, conditional on prior steps being achieved. Many steps are quite constrained by the short timeline, and our estimates range from 16% to 95%.

● Therefore, the odds are low: Multiplying the cascading conditional probabilities together, we estimate that transformative AGI by 2043 is 0.4% likely. Reaching >10% seems to require probabilities that feel unreasonably high, and even 3% seems unlikely.Thoughtfully applying the cascading conditional probability approach to this question yields lower probability values than is often supposed. This framework helps enumerate the many future scenarios where humanity makes partial but incomplete progress toward transformative AGI.

The entire paper is 114 pages long and contains detailed arguments and justifications of the probability estimates that contribute to the bottom line. There is a four page “Executive Summary” that presents the conclusions and primary areas in which they may be challenged. The following is a summary of the summary in which I simply list the events as the authors have defined them, all of which must occur for transformative AI to emerge, along with their estimates of each occurring by 2043.

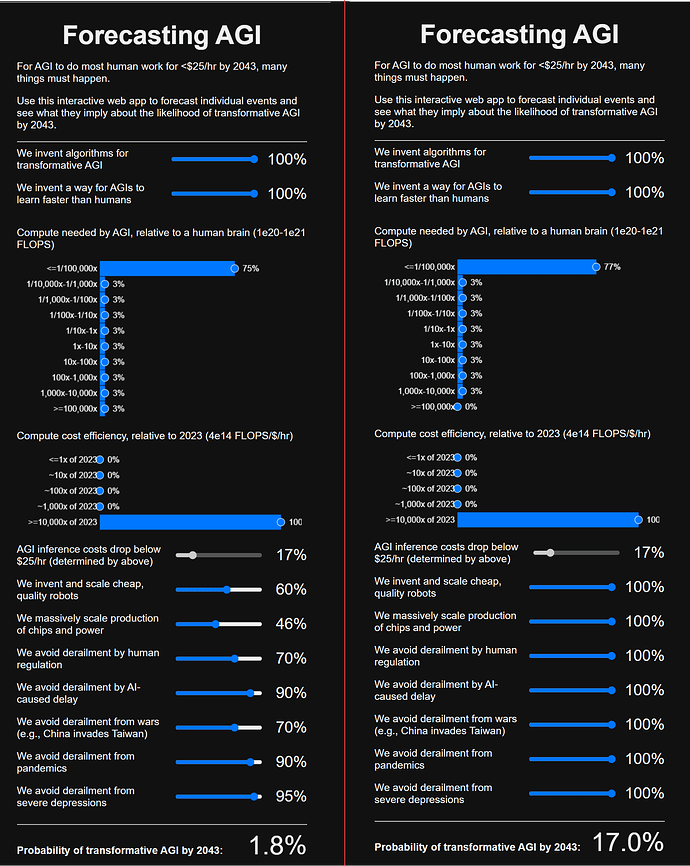

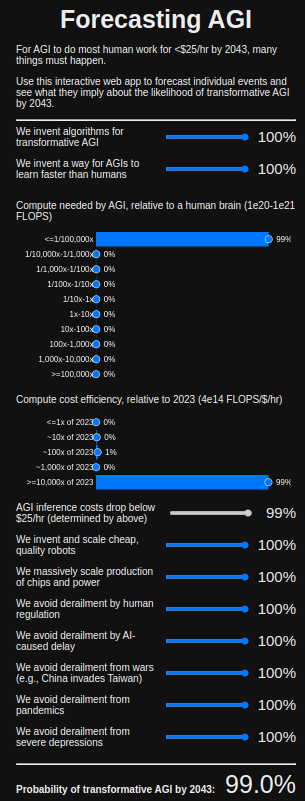

- We invent algorithms for transformative AGI 60%

- We invent a way for AGIs to learn faster than humans 40%

- AGI inference costs drop below $25/hr (per human equivalent) 16%

- We invent and scale cheap, quality robots 60%

- We massively scale production of chips and power 46%

- We avoid derailment by human regulation 70%

- We avoid derailment by AI-caused delay 90%

- We avoid derailment from wars (e.g., China invades Taiwan) 70%

- We avoid derailment from pandemics 90%

- We avoid derailment from severe depressions 95%

Joint probability 0.4%

If you disagree with any of these estimates, you can plug your own into this “Forecasting AGI” page and see how that affects the computed joint probability.

I should note that Open Philanthropy, which sponsored the essay contest, is identified with the “effective altruism” cult. I do not know whether the authors of this paper subscribe to this philosophy or the extent it may have influenced this work.