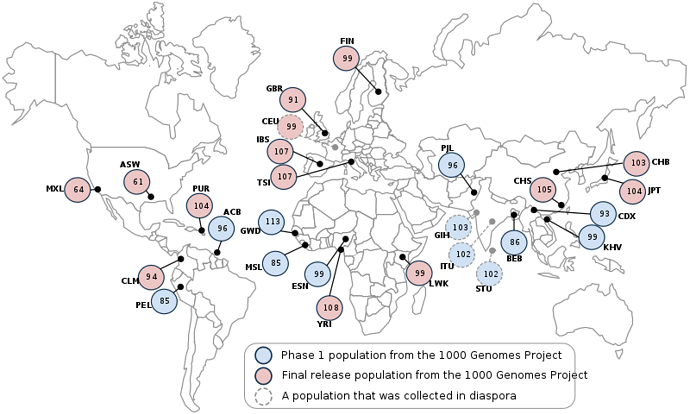

What if there were a prize, like the Hutter Prize for Lossless Compression of Human Knowledge, replacing the Wikpedia corpus with a database of human genomes?

The idea would be to approximate the Algorithmic Information of humanity’s population genetics.

This turns the science of human history into a market where money talks and bullshit walks.

People make all kinds of inferences about history (and prehistory) from present data sources – even billions of years in the past (e.g. cosmology). When doing so, they are inferring historic processes. Algorithms model processes so as to “fit” the observed data. Algorithmic Information Theory tells us that if computable processes are generating our observations, we should be able to simulate those processes with algorithms and that choosing the smallest algorithm (measured in bits of instructions) that exactly simulates our prior observations (including errors as literal constants) is the best anyone can do in deciding which model will yield the most accurate predictions.

So, if we start with any dataset and get people competing to compress it without loss, we have turned the scientific domain of that dataset into a market! Forget about “prediction markets” like Ideosphere or Metaculus – this is the way forward in the age of Moore’s Law and Big Data.

Now consider what happens with a dataset of human genomes. What are the historic processes that generated those genomes? Isn’t that an interesting question? Wouldn’t you prefer it if you had some objective – even automatic criterion for judging which model of history was the best one given a standard and unbiased source of data? Note, I’m not here talking about “machine learning” or “artificial intelligence”. I’m just talking about a bullshit filter that works the way a high trust society’s market works to filter out bullshit with minimum transaction cost, and lets competent human actors flourish.

Why start with a database of human genomes? The main reason is to avoid rhetorical arguments over “bias in the data”. The point is to minimize the “argument surface” because that is how theocrats attack scientists: By increasing the “argument surface” and thereby admitting more subjective criteria to any decision.

And we have to be a careful here but not just for the technical or theoretic reasons many might like to throw in the way of this to increase the argument surface. We have to be careful precisely because so many people might like to prevent the emergence of a genuine revolution that advances the natural sciences – just as there was religious objection to early advances in the philosophy of natural science. And, like the old theocracies, these people are not only powerful they are increasingly aware that they are intellectually bankrupt abusers of their power. They’re so scared that they are doing everything they can to hold back the tsunami of data and computation from meeting up with Algorithmic Information Theory to produce an objective judging criterion that can create a genuine scientific market.

They are already restricting access to human genome data with contracts binding people to not study socially relevant differences between races despite the fact that Federal Law uses “race” as a legal category in such questionable social policies as “affirmative action”.

Let me give you some background as to why I think we might be able to blast a hole in the city gate of this theocratic stronghold:

In 2005, about the same time I suggested to Marcus Hutter the idea for The Hutter Prize for Lossless Compression of Human Hnowledge, I wrote a little essay titled “Removing Lewontin’s Fallacy From Hamilton’s Rule”. The idea is pretty simple:

W. D. Hamilton came up with the basis of “kin selection” theory: “Hamilton’s inequality” which basically just says that if a gene produces behaviors that help one’s relatives have enough children then that gene is likely to spread even if one has no children*. Lewontin then took that word “gene” and used it to convince nubile Boomer chicks with fat trust funds attending Harvard that “race is a social construct” because “there is more genetic variation within than between races”. We’ve all heard that one because he then teamed up with his red diaper baby colleague, Jay Gould, to popularize their bullshit. They then went on the offensive to attack E. O. Wilson’s sociobiology as though it were a political rather than scientific controversy. I’m sure they got lots of Boomer coed nookie out of this at the height of the sexual revolution. But, that’s no big deal. The big deal is they held back the social sciences thence social policy by many decades. Moreover, this may ultimately kill tens of not hundreds of millions as the proximate cause of a rhyme with The Thirty Years War for freedom from their theocratic bullshit.

Decades later A. W. F. Edwards published “Human genetic diversity: Lewontin’s Fallacy” wherein he pointed out that the word gene is inadequate to talk about race as a biological construct. One must talk about “genetic correlation structures”. If we then turn around and talk about replacing the word gene with genetic correlation structure in Hamilton’s theory of kin selection, we have disambiguated Hamilton’s theory of kin selection and thereby pried some of Lewontin’s and Gould’s cold cadaverous fingers from the throat of Western Civilization – hopefully before their theocracy kills it entirely.

The only way one can minimize the size of an algorithm that outputs a human genome dataset is by identifying the genetic correlation structures that most parsimoniously fit the historic processes that created (and destroyed) them.

But what about access to that genomic data locked up behind the city-gate of the theocracy’s stronghold?

One of the beautiful things about the Algorithmic Information Criterion for model selection is that one need not have direct access to the data in order for one’s model to win! All one needs is a model that can take whatever is in the hidden dataset and turn it into a program that is smaller than the program others turn it into. One might call this “lossless compression” but really that is misleading because it is domain specific lossless compression – not zip or bzip, etc. In other words, you have a better way of simulating the history that generated the set of human genomes. You have a better model of historic processes that can, without knowing the details of the dataset, better compress those genomes sight-unseen.

Submit the compression program and judge solely on the size of the executable archive output by the compressor – all judging done automatically!

Now, I’m not necessarily saying that Harvard or any theocratic stronghold is going to permit a fair scientific competition to occur pertaining to human society. Well, they will over their dead bodies, with us trying to pry their cold dead fingers from their data, endowment and coeds. But if any substantial set of human genome data is made available to this objective market dynamic, even if data is held behind a veil of secrecy, it could blow a hole in the theocracy’s city-gate.

*I won’t go into the controversy involving the late E.O. Wilson here regarding the evolution of sterile workers in eusocial species except to say that everyone is talking past each other because they can’t face the awful truth of the human condition presenting itself in the present descent into a rhyme with The Thirty Years War.