Glenn Reynolds, law professor and Instapundit, has posted an essay “AI and the Screwfly Succession” on his Substack site (2023-05-04). Recently, there has been a growing crescendo of discussion of the risks of development and deployment of artificial intelligence (AI), including a variety of scenarios in which AI, developed with the best of intentions by competent people with no malice in their hearts, might inadvertently cause the extinction of the human race, all life on Earth, and possibly spread throughout the galaxy and beyond, extinguishing biological life and/or inhibiting its emergence anywhere the AI reaches. Many consider this to be a bad outcome.

But what if the human adventure ends, not with a bang, but with a whoopie? That is the scenario of Reynolds’ speculation.

The other day, a friend was talking about AI, and about sexbots, and opining that neither was really ready for primetime. My response was that this is true, but that the AI is getting smarter, and the sexbots sexier, while human beings are basically staying the same. We don’t really know what the upper limits for either smarts or sexiness are for machines, but we have a pretty good idea what the limits are for humans, because we’re more or less already there.

How can sexy sexbots be an existential threat? Well, we’ve devastated populations of insects like screwflies, fruit flies, and (somewhat less successfully) mosquitoes by saturating them with sexy but sterile specimens to breed with. (This is called the Sterile Insect Technique). The result is a sharp drop in reproduction, and population.

⋮

Imagine sexbots – both male and female – that are aren’t just copies of attractive humans, but much more attractive than natural humans. Machine learning could find just the right physical and behavioral characteristics to appeal to humans, and then tweak them for each individual person. Maybe they even release pheromones. Your personal sexbot would be tailor-made, or self-tailored, to appeal to you. It might even be programmed to fall in love with its human.

⋮

Distribute enough of these bots in the population, and reproductive rates would plummet. Would people stop breeding entirely? Almost certainly not, but a 90% reduction would pretty much end humanity as we know it in a couple of generations.

Are humans so simply wired that not-all-that-intelligent machines can push their buttons and cause them to forsake their own species for a super-optimised replacement? Well, it works with junk food.

Futurist James Miller calls porn the “junk food of sex.” That is, just as junk food is made more or less addictive – or at least highly appealing – by overstimulating people’s evolutionarily programmed desire for sugar, salt, and fat, so porn too appeals to people by stimulating evolutionarily created receptors/proclivities to a much greater degree than real life does. People had good solid reasons for craving sweet berries, salt, and fat in the caveman days, but those were all hard enough to come by that we weren’t strongly equipped with curbs on those cravings. Likewise with sex.

In the past, I responded to fears that porn would lead to more sexual violence and unwise teen sex by pointing out that in practice the opposite seems to be the case: As porn consumption skyrocketed with the introduction of the Internet, rape and teen sex actually underwent a steep decline.

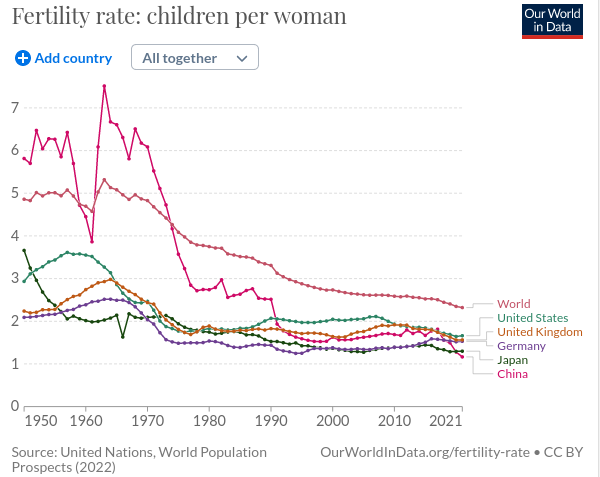

Here is the fertility rate for the world and a variety of countries plotted from 1950 through 2021. Note in particular how developed countries whose fertility had been close to stable over a long period of time began to drift downward in unison around the start of the smartphone era (~2008).

Maybe if the machines take over, it will be because their progenitors died out due to being loved more by the machines than their own species.

The machines get better every year, while humans stay more or less the same. That will raise all sorts of issues, in all sorts of settings.