Most physicists learn in their education that all of the phenomena of electromagnetism were explained by the theory of quantum electrodynamics, developed in the 1950s, with some of its creators awarded the Nobel Prize in Physics for 1965. But even the creators of the theory had doubts about its foundations and consistency: Richard Feynman called the technique of renormalisation, used to eliminate infinities that pop up in its calculations, a “shell game” and “hocus pocus”.

Indeed, even some topics in classical electrodynamics, grounded in Maxwell’s equations, which appeared in the books from which the founders of quantum electrodynamics learned their physics, have dropped out of modern texts, although electrical engineers still have to know about the strange behaviour of electromagnetic fields in the strange “near field” domain between oscillating charge and free space wave propagation.

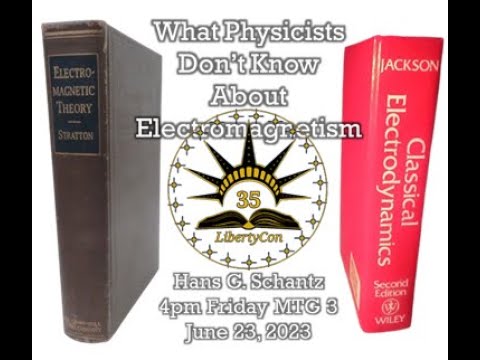

Hans Schantz, a physicist whose career has been in designing practical electronic devices, many of which operate in the near field regime, and author of The Art and Science of Ultrawideband Antennas, pulls back the curtain on the development of modern electromagnetic theory and reveals some of the dark corners of which most physicists are unaware.

Dr Schantz is also a masterful science fiction author, having written The Hidden Truth trilogy, and a shrewd observer of the madness of the contemporary scene, exemplified by his delightful present-day re-telling of the Scopes Monkey Trial in The Wise of Heart.

An introduction to the underside of quantum electrodynamics recommended by Dr Schantz is Oliver Consa’a 2021 paper, “Something is wrong in the state of QED” (full text at link). Here is the abstract:

Quantum electrodynamics (QED) is considered the most accurate theory in the history of science. However, this precision is based on a single experimental value: the anomalous magnetic moment of the electron (g-factor). An examination of the history of QED reveals that this value was obtained in a very suspicious way. These suspicions include the case of Karplus & Kroll, who admitted to having lied in their presentation of the most relevant calculation in the history of QED. As we will demonstrate in this paper, the Karplus & Kroll affair was not an isolated case, but one in a long series of errors, suspicious coincidences, mathematical inconsistencies and renormalized infinities swept under the rug.

The ongoing saga (some would say soap opera) of the conflict between theoretical prediction of the anomalous magnetic moment of the muon and experimental measurement of this quantity illustrates that this foundation of physics may be a tad rickety. An amazing to behold “front of book” article published in Nature on 2023-08-10, “Dreams of new physics fade with latest muon magnetism result” contains the following remarkable passage:

Judging from the standard, data-driven predictions alone, the latest measurement of g – 2 would seem to deviate from theory (as updated most recently in 2020 by around two parts in a million. And the shrunken uncertainty would for the first time clear the ‘five sigma’ bar that particle physicists usually require to claim a discovery.

But starting with results by Fodor and his colleagues in 2021 an alternative technique for calculating g – 2 has emerged, which does not require collider data and instead uses computer simulations. When the Muon g – 2 measurement is compared against this new prediction, the discrepancy essentially disappears. Several other teams have followed up with their own computer simulations, which have tentatively converged with those by Fodor’s group. Fermilab scientist Ruth Van De Water, a leading member of one of these groups, says she expects any lingering disagreements to be sorted out “in the next year or two”.

Let that sink in. In essence, they’re saying, “If we throw out the results from experiments conducted over decades on two continents at a cost of tens of millions of dollars and replace them with computer simulations instead, the conflict between theory and experiment ‘essentially disappears’ ” Move along, people, nothing to see here.

Scientific American has a less breezy take in “Muon Mystery Deepens with Latest Measurements”, published the same day as the Nature screed:

But if it has solved one discrepancy—between theory and experiment—[the Budapest–Marseille–Wuppertal calculation] may have created another. There is now a sizable difference between lattice QCD predictions and the data-driven ones derived from empirical experiments.

“A lot of people would look at that and say, ‘Okay, that weakens the new physics case.’ I don’t see that at all,” [physicist Alex] Keshavarzi says. He believes the discrepancy within the theory result—between the lattice and data-driven methods—could be linked to new physics, such as an as-yet-undetected low-mass particle. Other researchers are less gung ho about such heady prospects. Christoph Lehner, a theorist at the University of Regensburg in Germany and a co-chair of the Muon g−2 Theory Initiative, says it is much more likely that the theoretical discrepancy is caused by problems in the data-driven method.