Tom’s Hardware reports:

Tesla is set to launch its highly-anticipated supercomputer on Monday, according to @SawyerMerritt. The machine will be used for various artificial intelligence (AI) applications, but the cluster is so powerful that it could also be used for demanding high-performance computing (HPC) workloads. In fact, the Nvidia H100-based supercomputer will be one of the most powerful machines in the world.

Tesla’s new cluster will employ 10,000 Nvidia H100 compute GPUs, which will offer a peak performance of 340 FP64 PFLOPS for technical computing and 39.58 INT8 ExaFLOPS for AI applications. In fact, Tesla’s 340 FP64 PFLOPS is higher than 304 FP64 PFLOPS offered by Leonardo, the world’s fourth highest-performing supercomputer.

⋮

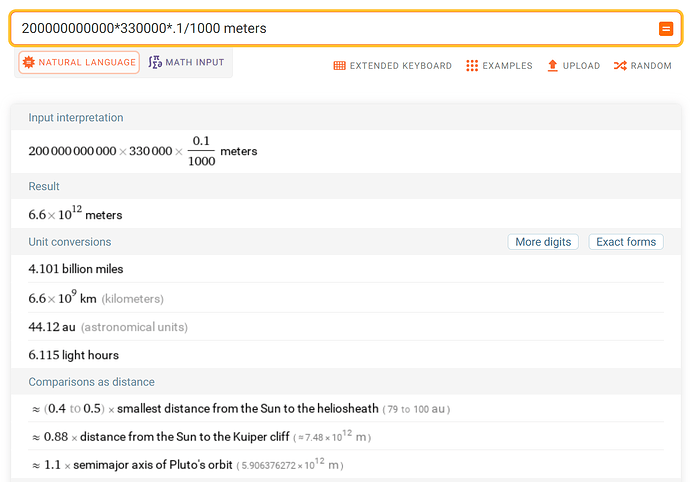

“Due to real-world video training, we may have the largest training datasets in the world, hot tier cache capacity beyond 200PB — orders of magnitudes more than LLMs,” explained Tim Zaman, AI Infra & AI Platform Engineering Manager at Tesla.

While the new H100-based cluster is set to dramatically improve Tesla’s training speed, Nvidia is struggling to meet demand for these GPUs. As a result, Tesla is investing over $1 billion to develop its own supercomputer, Dojo, which is built on custom-designed, highly optimized system-on-chips.

Elon Musk recently revealed that Tesla plans to spend over $2 billion on AI training in 2023 and another $2 billion in 2024 specifically on computing for FSD training. This underscores Tesla’s commitment to overcoming computational bottlenecks and should provide substantial advantages over its rivals.

Here is information from the manufacturer on the Nvidia H100 Tensor Core Graphics Processor Unit (GPU).

Here is the detailed Nvidia “H100 White Paper” [PDF, 71 pages] on the GPU architecture. There are 10,000 of these chips in Tesla’s new cluster.

Note that this Nvidia cluster is completely independent of Tesla’s Dojo supercomputer, developed totally in-house and expected to come on line incrementally in the second half of 2023 as additional cabinets are completed and added to the system. (See “Inside Tesla’s Dojo AI Training Supercomputer”, posted here on 2022-09-21). Elon Musk has remarked that had Nvidia been able to deliver as many GPUs as Tesla requires, development of Dojo might not have been necessary.

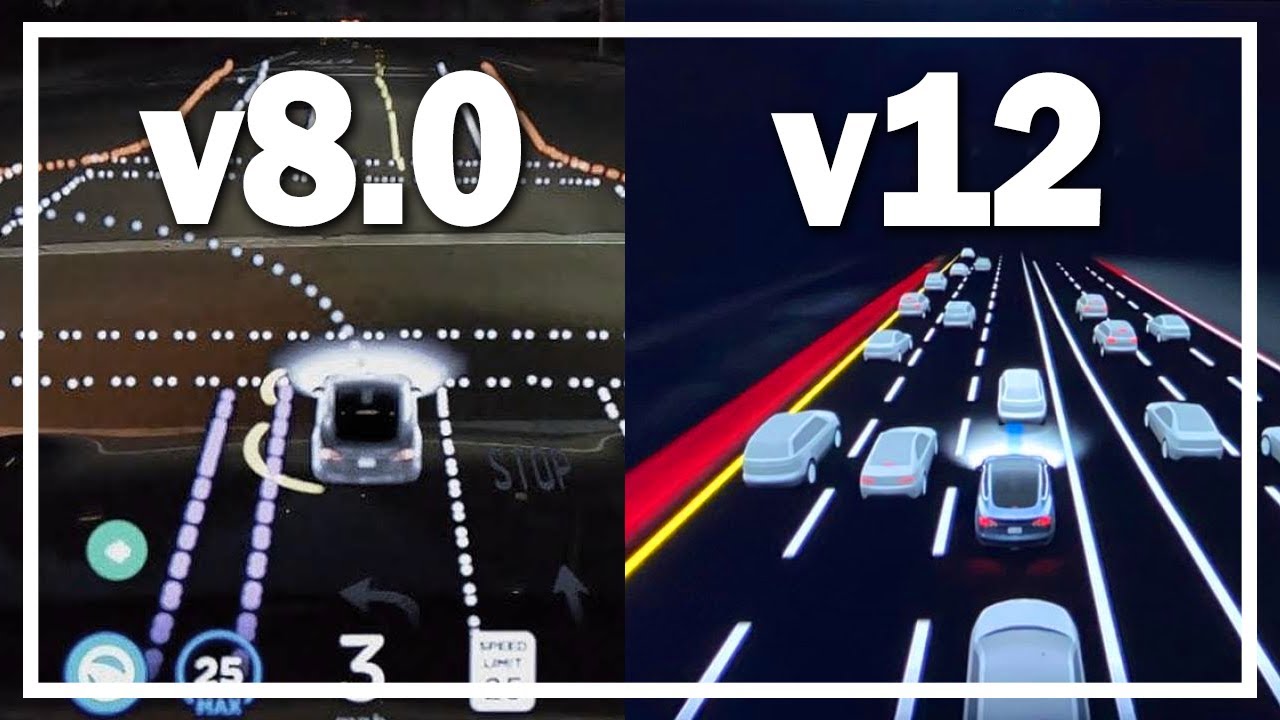

All of this computing and artificial intelligence training power appears to be dedicated to Tesla’s next generation of autonomous vehicle development, as previewed in “Elon Musk’s Sneak Peek at Tesla Full Self-Driving V12, ‘Nothing But Nets’ ”, posted here on 2023-08-28. Tesla appears to be going “all in” on the vision-only, trained by video approach to self-driving. The Nvidia H100 and Dojo both have, in addition to massive computational resources for AI training, integrated decoders for video, which will allow keeping the pipeline filled with video images to drive the training process.

Here’s a key to jargon used in articles quoted above.

- FLOPS — Floating-point operations per second

- PFLOPS — Quadrillion (10^{15}) FLOPS

- ExaFLOPS — Quintillion (10^{18}) FLOPS (1000 PFLOPS)

- FP64 — IEEE 754 64-bit “double precision” floating point

- INT8 — 8-bit integer (signed or unsigned) used for quantised weights and activations in neural networks

- PB — Petabyte: Quadrillion (10^{15}) bytes