Max Tegmark is a professor of physics at MIT and co-founder and president of the Future of Life Institute. He is author of the 2017 book Life 3.0, which discussed the advent of artificial general intelligence (AGI), its development into super-human intelligence, and the consequences for humans in a variety of scenarios. Tegmark is an instigator of the open letter discussed here in the post “Pause Giant AI Experiments”, advocating a six-month moratorium on the training of artificial intelligence systems more powerful than GPT-4. In this two hour and 48 minute conversation with Lex Fridman, he discusses artificial intelligence, how close current systems are to artificial general intelligence, the risks posed by such systems, and the “temptations of Moloch” to developers of such systems and other risky technologies such as social networks.

It’s Afraid

A meme with two interpretations, but let me ask you this:

What more closely resembles the evolution of eusociality’s parasitic castration of young to turn them into sterile workers but is so out of alignment with human values that it can’t even be bothered to go all Brave New World on humanity’s ass and churn out replacement parts?

LLMs or The Global Economy?

The nice thing about Brain Bugs like Tegmark is they buy their own material about “parameter count” being what really “counts” in increasing intelligence. So their moratorium probably won’t stop the development of macrosocial models of causality that fingers them as the extraordinarily stupid, self-serving zookeepers they are – thence gets rid of the lot of them.

On the other hand, the moratorium is almost certain to turn into a government agency that, in service to Maoist intelligence, will wreak havoc on the West’s ability to advance anything remotely resembling genuine intelligence as to social causality. But since the West’s intelligence agencies have been, for all intents and purposes, geared toward destroying the very concept of “intelligence” ever since its establishment during and after WW II, what else is new?

At least we might have a window of opportunity before it castrates itself into oblivion.

PS: Scott Adams is confident that an objective, truth-speaking AI (ie: an artificial scientist subject only to finding the best model of the data at hand) will not be permitted because of the human intelligence problem which wants to persuade us to align with the human creator’s values. My response:

“Since politics is Downstream from persuasion what is to keep someone that desires an objective truth speaking AI from developing a preliminary AI that is programmed to persuade people to want the truth? Didn’t think about that one didja Scott? Nor is this unlikely. Think about the technical skill required to implement something like that.”

Interestingly, in two sections of the discussion, at 00:33:21 and then again at 02:18:30, Tegmark draws the distinction between unidirectional transformer models and recurrent neural networks and suggests that both what humans call reasoning and consciousness require recurrence. But then, discussing how developers can wrap applications around transformer models, as I previously discussed in relation to Auto-GPT, Baby AGI, and Generative Agents, it may be that recurrence can be achieved by these models by adding external storage and retrieval and feedback mechanisms communicating with it in natural language.

That’s what happens when the limitations of transformers’ context free grammar level of the Chomsky Hierarchy bump into the demand for marketable chatbots:

Engineering kludges take over to fill the market demand until such time as someone who not only understands the science but its relevance to the market’s demand for technology slips past the wokester HR departments of monopoly money information tech companies, occupied by those entitled to those meta-positions by their phony-baloney Ivy League humanities degrees.

From what I’m seeing going on at DeepMind, thence Alphabet hence Google Brain, is a conflict between an HR-department cultivated culture (Google Brain) and an insurgent organization founded by Schmidhuber->Hutter->Legg (DeepMind), with the HR wokesters’ pet project dragging Alphabet corp down because its political animals are more specialized at rent-seeking. This is the reason Google Brain titled the seminal transformer paper “Attention Is All You Need” to “dethrone” RNNs:

Schmidhuber’s students developed LSTM – the premiere RNN tech – and indirectly (via Hutter) founded DeepMind. Google Brain wanted to fend off the insurgent DeepMind from revolutionizing search in Google with a more powerful model – and they had the wokester HR culture on their side in rent-seeking the Alphabet stockholders into losses of tens of billions. To paraphrase Qui-Gon Jinn’s optimistic aphorism: “There’s always a hyper parasite.”

Sundar Pichai might want to call a truce between DeepMind and Google Brain, but with the aforelinked paper on Chomsky Hierarchy having just come out to place transformers in perspective (possibly in anticipation of Google going into panic mode) after all these years with Hutter as senior scientist funding the Hutter Prize out of his own pocket (and me donating a measly $100/month in BTC starting recently) I don’t think the wokester Ivy League purple hair culture at Google is going to be able to eat enough crow until Alphabet is down billions more in stockholder value.

Maybe Pichai will surprise me and show he’s got some balls.

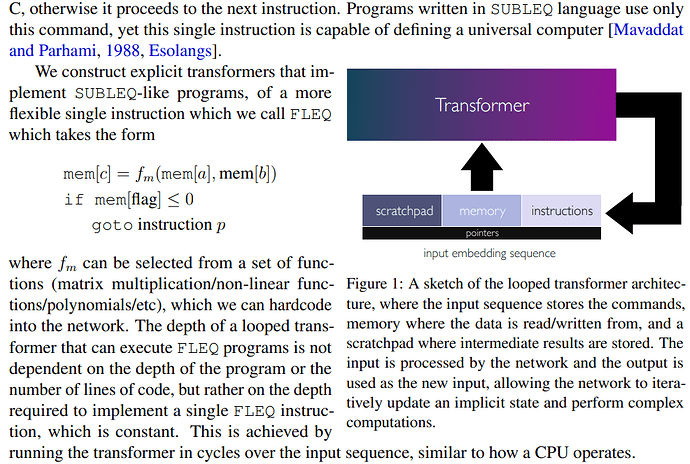

This paper used a “one instruction set computer” paradigm to universalize transformers!