Among the signatories is Elon Musk. Do any of these folks seriously think the CCP is going to “pause” even if among the signatories?

Ilya Sutskever [OpenAI Chief Scientist]: One thing that I think some people will choose to do is to become part AI. In order to really expand their minds and understanding and to really be able to solve the hardest problems that society will face then.

Here are some of the signatories to the “Open Letter”:

- Elon Musk

- Steve Wozniak

- Andrew Yang

- Jaan Tallinn (co-founder, Skype)

- Evan Sharp (co-founder, Pinterest)

- Emad Mostaque (CEO, Stability AI)

- John J Hopfield (neural network pioneer)

- Max Tegmark (author of Life 3.0)

- Grady Booch (programming methodology guru, IBM Fellow)

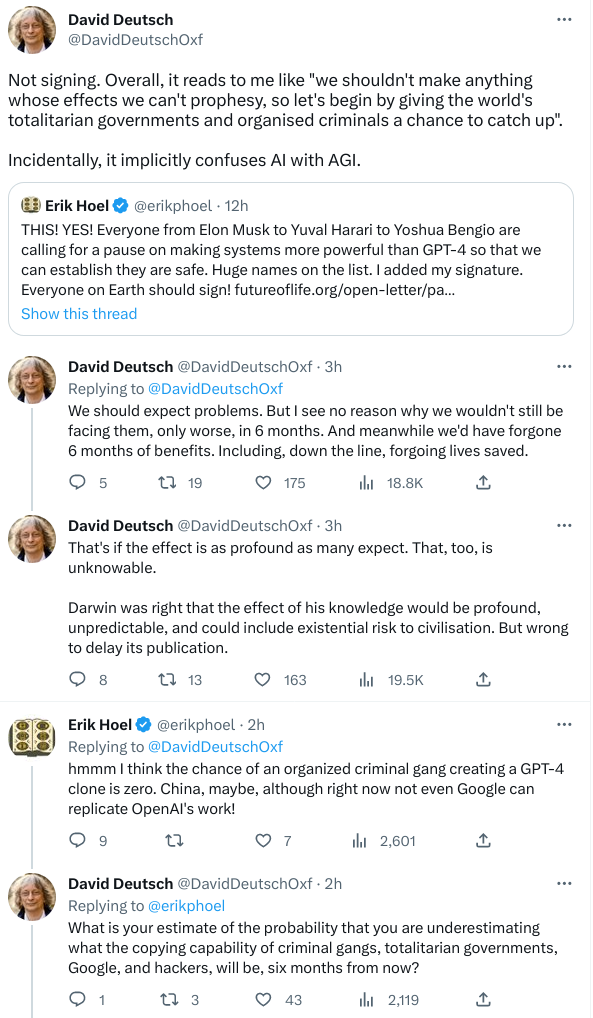

David Deutsch dissents.

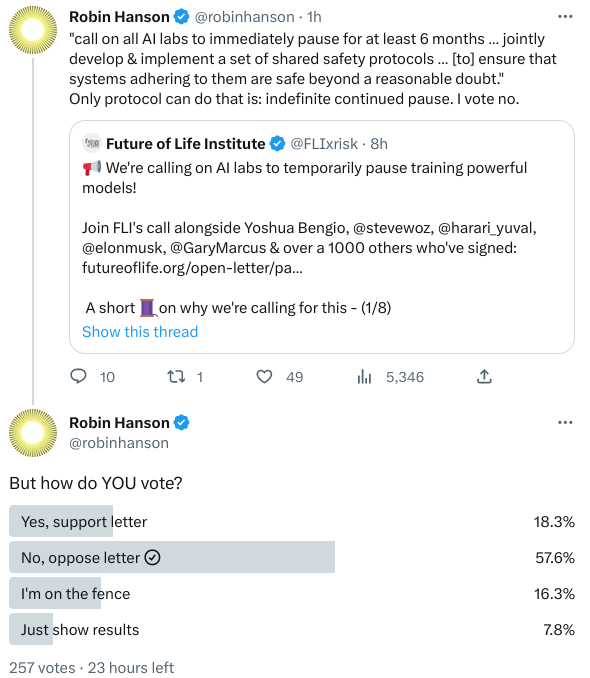

And so does Robin Hanson.

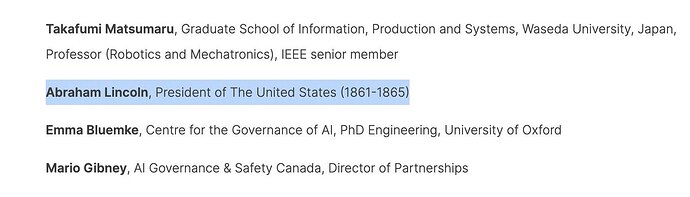

The signatory list for the open letter appears to be under a spam attack.

?Am I right in assuming all the signatories are administrative types, not working scientists, working on AI.

I signed AI experiment pause letter, because scientists ought to proceed ethically in all endeavors, and the singularity (if achieved) will change things forever.

Rogue scientists (and in the future, rogue AI?) will ignore, but letter serves as a goalpost, where such a scientist cannot now plead ignorance to the dangers of AI tech.

Scientists along with True Statesmen, have kept us at “2 minutes to midnight”, and recently “90 seconds to midnight” regarding nuclear annihilation as of this writing. Perhaps AI scientists can use a space-time or quantum computing analogy – 2 cubic-meter-minutes to midnight or 2 qubits to midnight ???

Eliezer Yudkowsky, co-founder of the Machine Intelligence Research Institute, argues in an opinion piece published in Time (Is that still a thing? Who knew?), “Pausing AI Developments Isn’t Enough. We Need to Shut it All Down”.

An open letter published today calls for “all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.”

This 6-month moratorium would be better than no moratorium. I have respect for everyone who stepped up and signed it. It’s an improvement on the margin.

I refrained from signing because I think the letter is understating the seriousness of the situation and asking for too little to solve it.

⋮

Many researchers steeped in these issues, including myself, expect that the most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die. Not as in “maybe possibly some remote chance,” but as in “that is the obvious thing that would happen.” It’s not that you can’t, in principle, survive creating something much smarter than you; it’s that it would require precision and preparation and new scientific insights, and probably not having AI systems composed of giant inscrutable arrays of fractional numbers.

Without that precision and preparation, the most likely outcome is AI that does not do what we want, and does not care for us nor for sentient life in general. That kind of caring is something that could in principle be imbued into an AI but we are not ready and do not currently know how.

Absent that caring, we get “the AI does not love you, nor does it hate you, and you are made of atoms it can use for something else.”

⋮

To visualize a hostile superhuman AI, don’t imagine a lifeless book-smart thinker dwelling inside the internet and sending ill-intentioned emails. Visualize an entire alien civilization, thinking at millions of times human speeds, initially confined to computers—in a world of creatures that are, from its perspective, very stupid and very slow. A sufficiently intelligent AI won’t stay confined to computers for long. In today’s world you can email DNA strings to laboratories that will produce proteins on demand, allowing an AI initially confined to the internet to build artificial life forms or bootstrap straight to postbiological molecular manufacturing.

If somebody builds a too-powerful AI, under present conditions, I expect that every single member of the human species and all biological life on Earth dies shortly thereafter.

There’s no proposed plan for how we could do any such thing and survive. OpenAI’s openly declared intention is to make some future AI do our AI alignment homework. Just hearing that this is the plan ought to be enough to get any sensible person to panic. The other leading AI lab, DeepMind, has no plan at all.

⋮

Here’s what would actually need to be done:

The moratorium on new large training runs needs to be indefinite and worldwide. There can be no exceptions, including for governments or militaries. If the policy starts with the U.S., then China needs to see that the U.S. is not seeking an advantage but rather trying to prevent a horrifically dangerous technology which can have no true owner and which will kill everyone in the U.S. and in China and on Earth. If I had infinite freedom to write laws, I might carve out a single exception for AIs being trained solely to solve problems in biology and biotechnology, not trained on text from the internet, and not to the level where they start talking or planning; but if that was remotely complicating the issue I would immediately jettison that proposal and say to just shut it all down.

Shut down all the large GPU clusters (the large computer farms where the most powerful AIs are refined). Shut down all the large training runs. Put a ceiling on how much computing power anyone is allowed to use in training an AI system, and move it downward over the coming years to compensate for more efficient training algorithms. No exceptions for governments and militaries. Make immediate multinational agreements to prevent the prohibited activities from moving elsewhere. Track all GPUs sold. If intelligence says that a country outside the agreement is building a GPU cluster, be less scared of a shooting conflict between nations than of the moratorium being violated; be willing to destroy a rogue datacenter by airstrike.

⋮

We are not ready. We are not on track to be significantly readier in the foreseeable future. If we go ahead on this everyone will die, including children who did not choose this and did not do anything wrong.

Shut it down.

If only the virologist engineering the RNA in some of the viruses to make them more contagious had been this open about the potential dangers. I fear these folks more than AI.

Many of the people calling for a moratorium or restriction on artificial intelligence research cite as an example the Asilomar Conference on Recombinant DNA held in February 1975, where 140 attendees (mostly biologists, but also physicians and lawyers) met to draw up guidelines to avoid risks from research in recombinant DNA (“gene splicing”). The conference resulted in recommendations for containment of different types of experiments, limitations on using organisms that could survive outside the laboratory, and outright prohibition of certain experiments, such as those involving pathogenic organisms.

The guidelines were widely adopted and taken into account by research committees and funding agencies, and are considered to have played a part in our avoiding existential risk from ill-conceived gene splicing experiments such as, for example, modifying a bat virus to be infectious to humans.

Obligatory book recommendation

The Two Faces of Tomorrow, James P. Hogan, First published May 12, 1979

Anticipates much of the current AI dilemma. The technical approach in the novel is dated (he consulted with Marvin Minsky) but the overall outline is prophetic.

Archive link to the Time article in case it is behind a paywall.

Also, Zvi has his usual intelligent analysis of the situation.

Not much original opinion expressed so far by Scanalyst members. My own thinking is guided by what I see as the operant technological imperative: whatever can be done, must be done. John cited the Asilomar Principles relating to genetic engineering. Though I cannot find a citation, I recall reports of a Chinese scientist promptly proceeding with a proscribed experiment. Has it gone down the memory hole? There seems to be little investigative reporting of whether compliance is assiduous and ongoing. The MSM, after all, likes ‘progress’.

Both genetic engineering and AGI clearly pose some risk to - if not eliminate - radically alter human life for the worse. There are grounds for pessimism, when it comes to the likelihood of restraint. We already live in societies whose ethos is ‘anything goes’. Not long ago, the medical profession, for example, was firmly in the ethical camp of “first, do no harm”. This was, until recently, a bedrock of the profession. It was a reflection of erring on the side of basic humility in the profession’s ability to predict possible harms of various treatments. It is obvious from chemical castration and physical mutilation of children nowadays (consent was another bedrock and children lack the capacity to consent), that these principles have suddenly ceased to exist in the medical profession. We witness a headlong rush to perform irreversible ‘therapies’ based, not on sound or long-term scientific studies, but solely on political momentum in service of ultimately proving that the state and its minions have a monopoly on truth; if they can successfully tell us that biological sex is meaningless, all things are then possible. All meaning is definable by the state.

I don’t see much difference in decision making between medicine and the tech development community, especially when someone, somewhere, is capable of doing as (s)he damn well pleases in the garage or similar humble shelter. Perhaps it is even worse, since physicians’ formal educations have included significant exposure to ethical principles. Many of the most talented and adept computing experts, on the other hand, seem to have been born with this incredible, but focused, talent and had no such wider educational exposure to considered ethical principles. Success reigns, and yes, there may be many foreseeable benefits of AGI. The risks of both foreseeable and unforeseeable risks, however, are potentially of such a magnitude - i.e. extinction, that invocation of the cautionary principle by way of a pause is, in my opinion, highly justified (genies and bottles come to mind).

This is why there is no practical possibility of preventing the development of artificial intelligence short of imposing a global tyrannical regime more severe than any in human history then maintaining it forever. This kind of future figures in Karl Gallagher’s Torchship novels, in which the development of AI ushers in a golden age that comes to abrupt end when the machines take over and wipe out humanity on several planets including Earth. The worlds that had not yet integrated sufficient AI for this to happen then completely ban any development of AI and impose the death penalty for any citizen who uses computing power beyond the most rudimentary level.

The fundamentals of how machine learning works are not difficult to learn (tomorrow, I will be writing about an online course with which anybody with basic proficiency in C++ programming can teach themselves the key techniques with nothing but a modest computer able to build C++ programs), software toolkits such as PyTorch are available for free, and machines with graphics processing units (GPUs) suitable for fast machine learning training are already in the hands of millions of gamers and a super-high end training configuration with 192 CPUs, 8 GPUs, 768 Gb of main memory, and 192 Gb of GPU memory can be rented on demand from Amazon Web Services for US$ 16.29 per hour, or US$ 9.77 per hour if you commit to one year’s rental in advance. The material that was used to train these models was largely “scraped” from the Internet, and anybody who wishes can do that (and besides, open source training data sets are now available to use as a starting point).

The genie is out of the bottle and cannot be put back in. Even if we avoid an extinction-level event (personally, I think the probability of extinction is very low, especially if there are multiple players developing AI systems and development remains as transparent as it has been so far), there will be large-scale economic displacement among “knowledge workers”, just as happened among unskilled workers in the 19th century and skilled workers in the 20th due to the advent of machines and automation. There is substantial sentiment among those who watched skilled jobs in manufacturing offshored and/or automated away without a whit of concern what this did to families and communities who, now observing the growing panic among cubicle dwellers discovering that GPT-4 can do their jobs, react by saying “Cry me a river."

Thank you, John. I particularly value your thoughts on such essential issues. Also, Scanalyst is a forum which is essential if anyone today wishes to retain even a drop of human agency in a world which relies on a handful of specialists who can create, maintain and improve the technologies necessary for everyday life. Thank you for that, too.

There’s been a few natural experiments banning technology. For example Ottoman empire banned printing just around the beginning of their descent from peak - here’s an excerpt from Howes’ excellent Substack about the history of technology:

Sultan Bayezid II in 1485 issued a firman, or edict, banning printing in Arabic characters, or perhaps the Arabic or Turkish languages, or perhaps printing outright — it depends on which rumour the author happened to see. This is almost always mentioned alongside a follow-up edict by Selim I in 1515, which ostensibly confirmed the ban.

The Catholic Church was an early adopter of printing, but they still tried to control information via the “List of Prohibited Books” right at the point when Italy’s power too begun descending from the pinnacle:

In 1571, Pius created the Congregation of the Index to give strength to the Church’s resistance to Protestant and heretical writings, and he used the Inquisition to prevent any Protestant ideas from gaining a foot hold in Italy.

Instead of trying to stop the nature of technological progress, we should be innovating about how to AI-proof systems and institutions from AI-enhanced adversaries.

OK. ?But how do you do that in the face of not really knowing what all an AI can do.

It’s not that different from information security: it’s an ongoing arms race.

I should also point out the White House blueprint for AI:

One of the policies poses an interesting conundrum:

You should not face discrimination by algorithms and systems should be used and designed in an equitable way.

So, for example, an algorithm might be discovered to send fewer women than men for a vasectomy. Should it leverage positive or negative algorithmic discrimination in order to achieve perfect equity, or is equity impossible?

Whoa! This is WAY BEYOND what an algorithm or computer should be deciding!

And BTW, ?how did spelling of “ALGORITHM” change from the root “RHYTHM”.

Here’s Bard:

Achieving perfect equity in algorithms is impossible, as algorithms are designed by humans who are inherently biased. However, it is possible to design algorithms that are less discriminatory by being aware of potential biases and taking steps to mitigate them. In the case of the algorithm that sends fewer women than men for a vasectomy, it is important to understand why this is happening. Is it because women are less likely to want vasectomies? Or is it because the algorithm is biased against women? If it is the latter, then steps need to be taken to make the algorithm more equitable, such as by including more women in the data set that the algorithm is trained on.

It is also important to note that positive discrimination is not always the answer. In the case of the vasectomy algorithm, it would not be fair to send more women for vasectomies simply to achieve equity. The goal should be to send the right people for vasectomies, regardless of their gender. This can only be done by understanding the reasons why people choose to have vasectomies and making sure that the algorithm is not biased against any particular group.

Ultimately, achieving equity in algorithms is a complex and challenging task. However, it is important to strive for equity, as algorithms have the potential to have a significant impact on people’s lives. By being aware of potential biases and taking steps to mitigate them, we can make algorithms more equitable and help to create a more just society.

(highlights are mine, nor Bard’s)

The word “algorithm” has no connection to “rhythm”. “Algorism” appeared in 1240, in a book by Alexander of Villedieu titled Carmen de Algorismo, whose first words are, in English translation:

Algorism is the art by which at present we use those Indian figures, which number two times five.

“Algorism” was coined from the name of Muhammad ibn Musa al-Khwarizmi, who wrote a book in 825 on calculation with Indian numerals (which we call “Arabic” numerals), and a second book on algebra. The spelling “algorithm” appeared in English in 1658 to describe “the art of reckoning by Cyphers”. By 1811, it had come to mean a step-by-step procedure.

“Rhythm”, by contrast, is derived from the Greek ῥυθμός, rhythmos, meaning any regularly recurring motion.

Even without GPT-4, if a cubicle dweller can work from home, somebody else (somebody much, much cheaper) can do the work from Bangalore. The cubicle dweller is out of luck, either way. And that is before a soon-to-be impoverished society starts to ask the fundamental question – Just what is it that the cubicle dwellers are producing anyway?

The underlying problem is that all of us human beings have too high a discount factor for the future; we are stuck in the present. The cubicle dwelling government employee who bought an imported automobile never thought – If everybody does this, there will be no taxpaying industry left in the US and then I will be unemployed too.

There was a time when children were named after parents and grandparents. People understood that society would go on long after they left the stage. But that was when people had children.